Last week I attended the IBM Big Data at the Speed of Business Event at IBM’s Research facility in Almaden. At the event IBM announced multiple capabilities around its big data initiative including its new BLU Acceleration and IBM PureData System for Hadoop. Additionally, new versions of Infosphere Big Insights and Infosphere Streams (for data streams) were announced as enhancements to IBM’s Big Data Platform. A new version of Informix that includes time series acceleration was also announced.

The overall goal of these products is to make big data more consumable –i.e. to make it simple to manage and analyze big data. For example, IBM PureData System for Hadoop is basically Hadoop as an appliance, making it easier to stand up and deploy. Executives at the event said that a recent customer had gotten its PureData System “loading and interrogating data 89 minutes.” The solution comes packaged with analytics and visualization technology too. BLU Acceleration combines a number of technologies including dynamic in-memory processing and active compression to make it 8-25x faster for reporting and analytics.

For me, some of the most interesting presentations focused on big data analytics. These included emerging patterns for big data analytics deployments, dealing with time series data, and the notion of the contextual enterprise.

Big data analytics use cases. IBM has identified five big data use cases from studying hundreds of engagements it has done across 15 different industries. These high value use cases include:

- 360 degree view of a customer- utilizing data from internal and external sources such as social chatter to understand behavior and “seminal psychometric markers” to gain insight into customer interactions.

- Security/Intelligence- utilizing data from sources like GPS devices and RFID tags and consuming it at a rate to protect individual safety from fraud or cyber attack.

- Optimizing infrastructure- utilizing machine generated data such as IT log data, web data, and asset tags to a improve service or monetize it.

- Data warehouse augmentation- extending the trusted data in a data warehouse by integrating other data with it like unstructured information.

- Exploration- visualizing and understanding more business data by unifying data across different silos to identify patterns or problems.

(for more information on these use cases there is a good podcast by Eric Sall)

Big data and time series. I was happy to see that Informix can handle time series data (it has been doing that for several years) and that the market is beginning to understand the value of time series data in big data analytics. According to IBM, this is being driven in part by the introduction of new technologies like RFID tags and smart meters. Think about a utility company collecting time series data from the smart meter on your house. This data can be analyzed not only to compute your bill, but to do more sophisticated analysis like predicting outages. Now, it will be faster to analyze this data because BLU Acceleration will be used with IBM Informix. This is a case of a new kind of data being analyzed using new technology.

The contextual enterprise. Michael Karasick, VP of IBM Research talked about the notion of the Contextual Enterprise which is a new holistic approach of dynamically building and accumulating context at scale from disparate data sources to deliver client value. These utilize data from what IBM calls systems of engagement (sources such as email, social data, media) together with traditional data sources in a gather, connect, reason, and adapt loop.

There is definitely a lot to wrap your head around in these big data announcements. The bottom line though is that the goal of these new products is to provide ease of use and improvements in performance and capabilities which can help improve big data analytics. The products can help improve what companies have already been doing with analytics because it is now faster to do it or they can help companies to perform new kinds of analysis that they couldn’t do before. That is what big data analytics is about.

Posted by Fern Halper, Ph.D.0 comments

By Philip Russom, TDWI Research Director

[

NOTE -- My new TDWI report “Integrating Hadoop into Business Intelligence (BI) and Data Warehousing (DW)” (Hadoop4BIDW) is finished and will be published in early April. I will broadcast the report’s Webinar on April 9, 2013 at noon ET. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #Hadoop, #TDWI, and #Hadoop4BIDW to find other leaks. Enjoy!]

Hadoop is still rather young, so it needs a number of upgrades to make it more palatable to BI professionals and mainstream organizations in general. Luckily, a number of substantial improvements are coming.

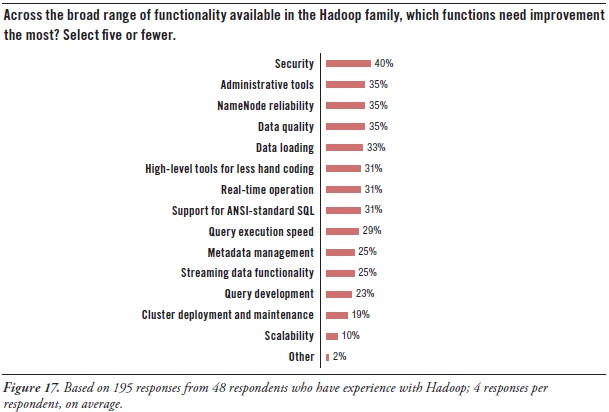

Hadoop users’ greatest needs for advancement concern security, tools, and high availability:

Security. Hadoop today includes a number of security features, such as file-permission checks and access control for job queues. But the preferred function seems to be Service Level Authorization, which is the initial authorization mechanism that ensures clients connecting to a particular Hadoop service have the necessary, pre-configured permissions. Furthermore, add-on products that provide encryption or other security measures are available for Hadoop from a few third-party vendors. Even so, there’s a need for more granular security at the table level in HBase, Hive, and HCatalog.

Administration. As noted earlier, much of Hadoop’s current evolution is at the tool level, not so much in the HDFS platform. After security, users’ most pressing need is for better administrative tools (35% in Figure 17 above), especially for cluster deployment and maintenance (19%). The good news is that a few vendors offer tools for Hadoop administration, and a major upgrade of open-source Ambari is coming soon.

High availability. HDFS has a good reputation for reliability, due to the redundancy and failover mechanisms of the cluster it sits atop. However, HDFS is currently not a high availability (HA) system, because its architecture centers around NameNode. It’s the directory tree of all files in the file system, and it tracks where file data is kept across the cluster. The problem is that NameNode is a single point of failure. While the loss of any other node (intermittently or permanently) does not result in data loss, the loss of NameNode brings the cluster down. The permanent loss of NameNode data would render the cluster's HDFS inoperable, even after restarting NameNode.

A BackupNameNode is planned to provide HA for NameNode, but Apache needs more and better contributions from the open source community before it’s operational. There’s also Hadoop SecondaryNameNode (which provides a partial, latent backup of NameNode) and third-party patches, but these fall short of true HA. In the meantime, Hadoop users protect themselves by putting NameNode on especially robust hardware and by regularly backing up NameNode’s directory tree and other metadata.

Latency issues. A number of respondents are hoping for improvements that overcome the data latency of batch-oriented Hadoop. They want Hadoop to support real-time operation (31%), fast query execution (29%), and streaming data (25%). These will be addressed soon by improvements to Hadoop products like MapReduce, Hive, and HBase, plus the new Impala query engine.

Development tools. Again, many users needs better tools for Hadoop, including development tools for metadata management (25%), query design (23%), and ANSI-standard SQL (31%), plus a higher-level approach that results in less hand coding (31%).

Want to learn more about big data and its management? Take courses at the TDWI World Conference in Chicago, May 5-10, 2013.

Enroll online.

Posted by Philip Russom, Ph.D.0 comments

By Philip Russom, TDWI Research Director

[

NOTE -- My new TDWI report “Integrating Hadoop into Business Intelligence (BI) and Data Warehousing (DW)” (Hadoop4BIDW) is finished and will be published in early April. I will broadcast the report’s Webinar on April 9, 2013 at noon ET. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #Hadoop4BIDW, #Hadoop, and #TDWI to find other leaks. Enjoy!]

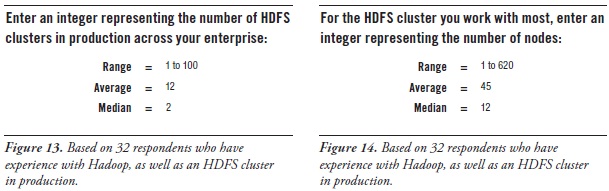

Number of HDFS clusters per enterprise. One way to measure the adoption of HDFS is to count the number of HDFS clusters per enterprise. Since far more people have downloaded HDFS and other Hadoop products than have actually put them to enterprise use, it’s best to only count those clusters that are in production use. The vast majority of survey respondents (and, by extension, most user organizations) do not have HDFS clusters in production. So, this report identified 32 respondents who do, and asked them about their clusters. (See Figure 13 above.)

When asked how many HDFS clusters are in production, 32 survey respondents replied in the range one to one hundred. Most responses were single digit integers, which drove the average number of HDFS clusters down to 12 and the median down to 2. Parsing users’ responses reveals that over half of respondents have only one or two clusters in production enterprise-wide at the moment, although one fifth have 50 or more.

Note that ownership of Hadoop products can vary, as discussed earlier, thereby affecting the number of HDFS clusters. Sometimes central IT provides a single, very large HDFS cluster for shared use by departments across an enterprise. And sometimes departments and development teams have their own.

Number of nodes per HDFS cluster. We can also measure HDFS cluster maturity by counting the number of nodes in the average cluster. Again, the most meaningful count comes from clusters that are in production. (See Figure 14 above.)

When asked how many nodes are in the HDFS cluster most often used by the survey respondent, respondents replied in the range one to six hundred and twenty, where one third of responses were single digit. That comes to 45 nodes per production cluster on average, with the median at 12. Half of the HDFS clusters in production surveyed here have 12 or fewer nodes, although one quarter have 50 or more.

To add a few more data points to this discussion, people who work in large Internet firms have presented at TDWI conferences, talking about HDFS clusters with approximately one thousand nodes. However, speakers discussing fairly mature HDFS usage specifically in data warehousing usually have clusters in the fifty to one-hundred node range. Proof-of-concept clusters observed by TDWI typically have four to eight nodes, whereas development clusters may have but one or two.

Want to learn more about big data and its management? Take courses at the TDWI World Conference in Chicago, May 5-10, 2013.

Enroll online.

Posted by Philip Russom, Ph.D.0 comments

By Philip Russom, TDWI Research Director

[

NOTE -- My new TDWI report “Integrating Hadoop into Business Intelligence (BI) and Data Warehousing (DW)” (Hadoop4BIDW) is finished and will be published in early April. I will broadcast the report’s Webinar on April 9, 2013. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #Hadoop4BIDW, #Hadoop, and #TDWI to find other leaks. Enjoy!]

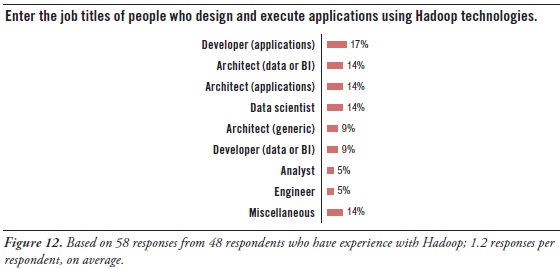

One way to get a sense of what kinds of technical specialists are working with HDFS and other Hadoop tools is to look at their job titles. So, this report’s survey asked a subset of respondents to enter the job titles of Hadoop workers. (See Figure 12 above.) Many users are concerned about acquiring the right people with the right skills for Hadoop, and this list of job titles can assist in that area.

Hadoop workers are typically architects, developers, data scientists, and analysts:

Architect. It’s interesting that the word architect appeared in more job titles than any other word, followed closely by the word developer. Among these, two titles stand out – data architect and application architect – plus miscellaneous titles like system architect and IT architect. Most architects (regardless of type) guide designs, set standards, and manage developers. So architects are most likely providing a management and/or governance function for Hadoop, since Hadoop has an impact on data, application, and system architectures.

Developer. Similar to the word architect, many job titles contained the word developer. Again, there’s a distinction between application developers and data (or BI) developers. Application developers may be there to satisfy Hadoop’s need for hand-coded solutions, regardless of the type of solution. And, as noted, some application groups have their own Hadoop cluster. The data and BI developers obviously bring their analytic expertise to Hadoop-based solutions.

Data Scientist. This job title has slowly gained popularity in recent years, and seems to be replacing the older position of business analyst. Another way to look at it is that some business analysts are proactively evolving into data scientists, because that’s what their organizations need from them. When done right, the data scientist’s job involves many skills, and most of those are quite challenging. For example, like a business analyst, the data scientist is also a hybrid worker who needs knowledge of both business and data (that is, data’s meaning, as well as its management). But the data scientist must be more technical than the average business analyst, doing far more hands-on work writing code, designing analytic models, creating ETL logic, modeling databases, writing very complex SQL, and so on. Note that these skills are typically required for high-quality big data analytics in a Hadoop environment, and the position of the data scientist originated for precisely that. Even so, TDWI sees the number of data scientists increasing across a wide range of organizations and industries, because they’re needed as analytic usage gets deeper and more sophisticated and as data sources and types diversify.

Analyst. Business analyst and data analyst job titles barely registered in the survey. Perhaps that’s because most business analysts rely heavily on SQL, relational databases, and other technologies for structured data, which are currently not well represented in Hadoop functionally. As noted, some analysts are becoming data scientists, as they evolve to satisfy new business requirements.

Miscellaneous. The remaining job titles are a mixed bag, ranging from engineers to marketers. This reminds us that big data analytics – and therefore Hadoop, too – is undergoing a democratization that makes it accessible to an ever-broadening range of end users who depend on data to do their jobs well.

Want more? Register for my Hadoop4BIDW Webinar, coming up April 9, 2013 at noon ET:

http://bit.ly/Hadoop13

Posted by Philip Russom, Ph.D.0 comments

By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report “Integrating Hadoop into Business Intelligence (BI) and Data Warehousing (DW)” (Hadoop4BIDW) is finished and will be published in early April. I will broadcast the report’s Webinar on April 9, 2013. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #Hadoop4BIDW, #Hadoop, and #TDWI to find other leaks. Enjoy!]

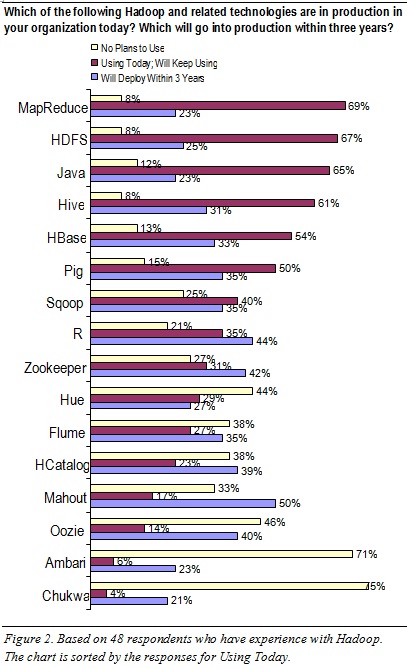

This report considers Hadoop an ecosystem of products and technologies. Note that some are more conducive to applications in BI, DW, DI, and analytics than others; and certain product combinations are more desirable than others for such applications.

To sort out which Hadoop products are in use today (and will be in the near future), this report’s survey asked: Which of the following Hadoop and related technologies are in production in your organization today? Which will go into production within three years? Which will you never use? (See Figure 2 above.) These questions were answered by a subset of 48 survey respondents who claim they’ve deployed or used HDFS. Hence, their responses are quite credible, being based on direct hands-on experience.

HDFS and a few add-ons are the most commonly used Hadoop products today. HDFS is near the top of the list (67% in Figure 2), because most Hadoop-based applications demand HDFS as the base platform. Certain add-on Hadoop tools are regularly layered atop HDFS today:

- MapReduce (69%). For the distributed processing of hand-coded logic, whether for analytics or for fast data loading and ingestion

- Hive (60%). For projecting structure onto Hadoop data, so it can be queried using a SQL-like language called HiveQL

- HBase (54%). For simple, record-store database functions against HDFS’ data

MapReduce is used even more than HDFS. The survey results (which rank MapReduce slightly more common than HDFS) suggest that a few respondents in this survey population are using MapReduce today without HDFS, which is possible, as noted earlier. The high MapReduce usage also explains why Java and R ranked fairly high in the survey; these programming languages are not Hadoop technologies per se, but are regularly used for the hand-coded logic that MapReduce executes. Likewise, Pig ranked high in the survey, being a tool that enables developers to design logic (for MapReduce execution) without having to hand-code it.

Some Hadoop products are rarely used today. For example, few respondents in this survey population have touched Chukwa (4%) or Ambari (6%), and most have no plans for using them (75% and 71%, respectively). Oozie, Hue, and Flume are likewise of little interest at the moment.

Some Hadoop products are poised for aggressive adoption. For example, half of respondents (50%) say they’ll adopt Mahout within three years, with similar adoption projected for R (44%), Zookeeper (42%), HCatalog (40%), and Oozie (40%).

TDWI sees a few Hadoop products as especially up-and-coming. Usage of these will be driven up according to user demand. For example, users need analytics tailored to the Hadoop environment, as provided by Mahout (machine-learning based recommendations, classification, and clustering) and R (a programming language specifically for analytics). Furthermore, BI professionals are accustomed to DBMSs, and so they long for a Hadoop-wide metadata store and far better tools for HDFS administration and monitoring; these user needs are being addressed by HCatalog and Ambari, respectively, and therefore TDWI expects both to become more popular.

Want more? Register for my Hadoop4BIDW Webinar, coming up April 9, 2013 at noon ET: http://bit.ly/Hadoop13

Posted by Philip Russom, Ph.D.0 comments

By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report “Integrating Hadoop into Business Intelligence (BI) and Data Warehousing (DW)” (Hadoop4BIDW) is finished and will be published in early April. I will broadcast the report’s Webinar on April 9, 2013. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #Hadoop, #TDWI and #Hadoop4BIDW to find other leaks. Enjoy!]

The Hadoop Distributed File System (HDFS) and other Hadoop products show great promise for enabling and extending applications in BI, DW, DI, and analytics. But are user organizations actively adopting HDFS?

To quantify this situation, this report’s survey asked: When do you expect to have HDFS in production? (See Figure 1.) The question asks about HDFS, because in most situations (excluding some uses of MapReduce) an HDFS cluster must first be in placed before other Hadoop products and hand-coded solutions are deployed atop it. Survey results reveal important facts about the status of HDFS implementations. A slight majority of survey respondents are BI/DW professionals, so the survey results represent the broad IT community, but with a BI/DW bias.

-

HDFS is used by a small minority of organizations today. Only 10% of survey respondents report having reached production deployment.

-

A whopping 73% of respondents expect to have HDFS in production. 10% are already in production, with another 63% upcoming. Only 27% of respondents say they will never put HDFS in production.

-

HDFS usage will go from scarce to ensconced in three years. If survey respondents’ plans pan out, HDFS and other Hadoop products and technologies will be quite common in the near future, thereby having a large impact on BI, DW, DI, and analytics – plus IT and data management in general, and how businesses leverage these.

Figure 1. Based on 263 respondents: When do you expect to have HDFS in production?

10% = HDFS is already in production

28% = Within 12 months

13% = Within 24 months

10% = Within 36 months

12% = In 3+ years

27% = Never

Hadoop: Problem or Opportunity for BI/DW?

Hadoop is still rather new, and it’s often deployed to enable other practices that are likewise new, such as big data management and advanced analytics. Hence, rationalizing an investment in Hadoop can be problematic. To test perceptions of whether Hadoop is worth the effort and risk, this report’s survey asked: Is Hadoop a problem or an opportunity? (See Figure 3.)

-

The vast majority (88%) consider Hadoop an opportunity. The perception is that Hadoop products enable new applications types, such as the sessionization of Web site visitors (based on Web logs), monitoring and surveillance (based machine and sensor data), and sentiment analysis (based on unstructured data and social media data).

-

A small minority (12%) consider Hadoop a problem. Fully embracing multiple Hadoop products requires a fair amount of training in hand-coding, analytic, and big data skills that most BI/DW and analytics teams lack at the moment. But (at a mere 12%) few users surveyed consider Hadoop a problem.

Figure 3. Based on 263 respondents: Is Hadoop a problem or an opportunity?

88% = Opportunity – because it enables new application types

12% = Problem – because Hadoop and our skills for it are immature

Want more? Register for my Hadoop4BIDW Webinar, coming up April 9, 2013 at noon ET: http://bit.ly/Hadoop13

Posted by Philip Russom, Ph.D.0 comments