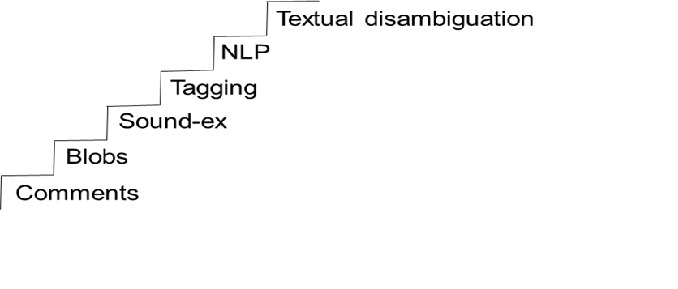

Textual Analytics Climbing the Ladder

Textual analytics has come a long way from the early days of comment fields. Each step toward textual disambiguation has grown a little closer to providing truly effective analytics for text.

- By Bill Inmon

- June 2, 2016

An evolution has occurred in textual analytics. The change has been so gradual that we have barely been aware of it. Each of the steps of evolution -- pictured below -- has solved a clear and present requirement, but each step has addressed only limited aspects of textual analytics.

Let's look at each step briefly.

Comments

In the beginning were comments. Programmers found they needed to capture additional information so they defined a field in their data structure called "comments" and stuffed text into the field.

Comments served a purpose, but they had challenges. Defining the field length was always problematic; the comment was always longer or shorter than what was specified. It was universally an uncomfortable fit.

Of course, the real problem with the comments field was that once data was in the comments field, you couldn't do anything with it.

Blobs

Next, blobs were a specially defined field that held lots of undefined data. For the most part, blobs solved the problem of the irregular length of the comments field, but blobs did not address the usability of data within the blob. You could have all sorts of data in your blob but you still couldn't do anything with it.

Soundex and Stemming

Next came Soundex and stemming -- the first methods that went into the blob and tried to make sense of the text. Originally developed in the 1910s for the U.S. census, Soundex became a tool for computer programmers in the 1960s.

Soundex grouped words according to a common pronunciation. Stemming reduced words to a common Greek or Latin stem. In many cases grouping words by one of these common foundations helped in understanding syntax.

Soundex and stemming were unquestionably pioneering steps in the quest to make sense of text. However, as important as Soundex and stemming were, there is far greater complexity to text and language than these aspects.

Tagging

The next step in the evolution of textual analytics was word tagging. In word tagging, a document was read and important words were tagged. Words could be tagged for many purposes: keywords, action words, red flag words, etc.

Tagging words was a significant step toward textual analytics, but it had its own set of drawbacks.

The primary difficulty was that you often needed to identify the words to be tagged before you read the document. Many words that should have been tagged were not, making the results of tagging less than satisfactory.

NLP and Taxonomies

Next came NLP (natural language processing), which uses taxonomies -- categories of related words -- to identify sentiment. Taxonomies were much more powerful and far more general than tagging (although it can be argued that the use of taxonomies and ontologies is an advanced form of tagging).

With NLP, it was possible to gather enough information to start to do real textual analytics, including sentiment analysis.

Textual Disambiguation

The most evolved form of textual analytics is textual disambiguation, which builds on the advances made by taxonomies and NLP. Textual disambiguation provides sentiment analysis plus two important advances over NLP: the focus on context and the ability to recognize word and sentence structures.

To understand the value of context, consider this simple example.

"I don't like scrambled eggs because they are too expensive."

Sentiment analysis tells us that the author does not like scrambled eggs, which is a useful piece of information.

Textual disambiguation tells us that the author doesn't like scrambled eggs, but also tells us something more. Textual disambiguation tells us why the author doesn't like scrambled eggs. The scrambled eggs are too expensive.

There could be many reasons why the author doesn't like scrambled eggs. The eggs may be runny. The eggs may be cold. The author may be a vegan. The eggs may have too much cholesterol. Textual disambiguation goes one step further than NLP and gives us a different level of useful information.

Textual disambiguation can also discern context for word and sentence structure, which increases its ability to provide meaningful analysis of text.

Like all evolutions, this one continues. Programmers began to create comments fields as early as 1965. Textual disambiguation is alive and well in 2016. The evolution has taken a paltry 50 years or so -- very quickly, as far as evolutions are concerned.

Even though textual disambiguation is an advanced technique, the evolution is not complete.

Stay tuned.

About the Author

Bill Inmon has written 54 books published in 9 languages. Bill’s company -- Forest Rim Technology -- reads textual narrative and disambiguates the text and places the output in a standard data base. Once in the standard data base, the text can be analyzed using standard analytical tools such as Tableau, Qlikview, Concurrent Technologies, SAS, and many more analytical technologies. His latest book is Data Lake Architecture.