Attendees of a recent TDWI Webinar asked excellent questions.

By Philip Russom, TDWI Research Director for Data Management

Recently, on Tuesday April 15, 2014, I broadcasted a TDWI Webinar in which I presented some of the findings from my new TDWI report, Evolving Data Warehouse Architectures in the Age of Big Data. You can download a free copy of the report in a PDF file. And you can replay the Webinar.

Attendees of the Webinar posed several very good questions about various issues in data warehouse architecture. Please allow me to share a few of the attendees’ questions and the answers I sent them via e-mail:

Q. As we update our data warehouse from more reporting to more analytics functions, should we design a brand new data warehouse architecture, or improve from the existing one?

If the existing data warehouse and its architecture fulfill business requirements and technical performance requirements (for speed and scale), then you should try to build out the existing architecture. For that to work, your existing vendor platform under the warehouse must perform well with multiple mixed workloads, including analytic workloads; ask your vendor representative for customer references who’ve succeeded with mixed workloads. Also, building up data sets for advanced analytics typically means loading large data volumes into the warehouse, which may cost more money with some licenses; again, ask your vendor if there are such ramifications under your current license.

If your current core warehouse platform cannot support mixed workloads with high performance (or adding analytic data costs too much money), you may decide to manage and process large data sets for advanced analytics on a separate standalone platform that integrates with your warehouse. But in that case, you still keep your existing data warehouse and most of its data structures intact, just making slight changes for better integration with the new additional platform(s) for advanced analytics.

Q. Given the lack of integration across this multi-platform [data warehouse] environment, how do we avoid the need to replicate DW transactional sources into the big data platforms, as transactions are required in mining?

Good question, and there are number of issues here. First, a well-designed multi-platform environment won’t suffer a “lack of integration.” TDWI’s definition of “logical data warehouse” is that the logical design specifies integration schemes (not just data models) across physically distinct platforms, whether that integration takes a data model approach (as in shared or conformed dimensions, etc.) or a data integration approach (as in jobs for ETL, replication, etc.) or both. Second, I take your point, that replicating data more than needed can lead to a variety of problems, as data gets out of sync and loses integrity. A good architecture can minimize replication, and sometimes alleviate it. Third, for decades, users have faced the same decision you’re looking at: do we store, manage, and analytically process our rich, valuable collection of transactional data in the warehouse proper or on a standalone but integrated platform, such as the usual operational data store (ODS)?

For years, a solution I’ve seen users successfully adopt is to deploy a homegrown ODS that they’ve designed and optimized for transactions. The ODS is on a standalone platform that’s integrated with the core warehouse (plus other ODSs, marts, etc.), running on a relational DBMS atop commodity priced hardware. Note that the upcoming trend is toward ODSs atop Hadoop (but only if the data volumes are massive). The idea is to manage transactional data on a platform that’s much cheaper than the DW, on a standalone platform where the relentless sorting, updating, and processing of that data won’t degrade warehouse performance. Yet, the ODS is easily reached from all tools, plus through data federation and virtualization as well, which minimizes the replication of transactional data.

If you give the ODS the capacity it needs to persist multiple sort orders and data subsets in the ODS, then copying data outside the ODS is further reduced. Also, if you use data mining tools that can work on data “in situ” (i.e., in the ODS’s relational database) without moving data to the tool, then that also reduces copying and moving transactional data.

Q. The need for data warehouses is never going to go away. But isn’t the separation between "operations" and "analytics" starting to blur? In other words, the future isn't DWE; it's a "data environment" that does both.

Operational BI is all about getting operational data into BI faster and more frequently, while also embedding BI functions in operational applications and their processes as well. Operational BI is a very popular practice. It has been for years, and will get even more popular, as organizations adjust their BI efforts to bring them closer to real time (to be more competitive, customer conscious, efficient, etc.). The widespread existence of operational BI corroborates that the line between operations and BI is already quite blurred and will become even more so.

In another trend, many organizations are purposefully evolving toward a more or less loosely unified data environment for most enterprise data. I say “more or less” and “loosely” because early adopters are quick to say that the architecture is not 100 percent of the enterprise and integration is spotty, on an “as needed” basis. As one architect joked, “it’s more archaeology than architecture, because the work usually consists of imposing a logical architecture over mature, preexisting systems.” For early adopters, it makes sense to architect data globally, when customer data and some other data domains are pervasively shared across multiple applications, departments, and processes. It also makes sense in firms where business processes ramble across multiple business units and IT systems. Obviously, there’s an infinitude of resulting enterprise data architectures.

The data warehouse environment (DWE) I’m describing is a local microcosm of such a broad and loosely unified multi-platform data architecture. However, in some organizations today, the data warehouse and similar data platforms are just a few among many other data platforms, integrated on an enterprise scale. But those organizations are as yet the minority, although we at TDWI expect it to be the norm for IT-intense organizations within five years. TDWI’s Vegas conference has been devoted to issues in enterprise-scale data architecture for years, and will continue to be. You might consider attending next February.

Q. Can you point us to white papers on the difference between reporting and analytics [and how that affects DW architecture]?

You can read my blog on the subject. Or you could read the new report on evolving data warehouse architectures, because I adapted material from the blog to become a section in the report, starting on page 24.

Q. What’s the role, or is there a role, for variants like an ODS in the new world [of data warehouse architectures]? Is it part of the real-time world?”

Historically, some of the first standalone systems in a multi-platform data warehouse (going back to the mid-1990s) were ODSs deployed on their own hardware sever with their own DBMS instances. These are still with us, and will continue to be with us, as data warehouse environments evolve into even more platforms used at once. An ODS can be designed and optimized by users for a wide range of data domains and uses (including real-time data), but I’m currently seeing a lot of users deploying ODSs for various types of big data and other data earmarked for advanced analytics.

Q. Saying Inmon vs. Kimball is no longer relevant is like saying Newton is no longer relevant in the world of physics today. It's still important, maybe not as fundamental as 1–2 decades ago.

For decades, Newton practiced alchemy in his copious spare time, because he was convinced that changing lead to gold was possible. Our heroes aren’t always 100 percent right.

Concerning Inmon and Kimball, see the top of page 7 in the report. Also please read the User Story on that same page. “No longer relevant” is your phrase, not mine. In my view, Inmon and Kimball’s innovations are as relevant as ever, and are still being applied daily. And they just keep giving: Inmon has recently extended our understanding of unstructured data and Kimball is currently working new best practices for Hadoop.

It’s the users who’ve changed. Instead of arguing about which to choose, users choose to apply Inmon and Kimball techniques (and others, too) in the same extended warehouse environment. And that’s a wise choice on their part, since hybrids and diversity seem to be winning strategies for a growing number of user organizations and their diversified DW architectures nowadays.

Q. Some organizations consider Hadoop a replacement for their current DW appliance. How is this possible?

As I said in the Webinar, I’ve only found two organizations that took out a data warehouse and put Hadoop in its place. While that corroborates that a replacement is possible, it’s not likely, nor is it a compelling trend.

Instead of replacement, we at TDWI see far more users augmenting their data warehouse environment with the Hadoop Distributed File System (HDFS), plus related Hadoop tools, especially MapReduce, Hive, HBase, and Pig. In short, HDFS handles things that relational warehouses are not designed for, such as unstructured data, algorithmic analytics, millions of files, and petabyte-size data sets. But the relational warehouse is still best for the structured and multidimensional data that goes into standard reports, performance management, and set-based analytics (typically OLAP or SQL-based analytics).

Another possibility is that Hive atop MapReduce and HDFS makes a highly scalable “row store” type of database. Sometimes you don’t need a full-featured (and expensive) relational DBMS, and hence a row store will do just fine. For example, many of the ODSs found today in data warehouse environments are candidates for migration to Hadoop. That includes ODSs that manage large “archives” (I use the word loosely) of transactional data and other operational data that’s persisted and kept long-term for advanced analytics that just need simple tabular structures. Most standalone ODSs of that description today run on mature DBMSs, but could run almost as well (for less money) on Hadoop.

Finally, let’s remember that not all organizations need a data warehouse, as represented by 15 percent of survey respondents.

Q. Can you recommend any sample success stories on how to integrate Hadoop or similar big data into an existing data warehouse [environment]?

Yes, many real-world use cases and user stories are discussed in the 2013 TDWI report Integrating Hadoop into Business Intelligence and Data Warehousing.

Posted by Philip Russom, Ph.D.0 comments

By Philip Russom

Research Director for Data Management, TDWI

To help you better understand new practices for managing big data and why you should care, I’d like to share with you the series of 30 tweets I recently issued on the topic. I think you’ll find the tweets interesting, because they provide an overview of big data management and its best practices in a form that’s compact, yet amazingly comprehensive.

Every tweet I wrote was a short sound bite or stat bite drawn from my recent TDWI report “Managing Big Data.” Many of the tweets focus on a statistic cited in the report, while other tweets are definitions stated in the report.

I left in the arcane acronyms, abbreviations, and incomplete sentences typical of tweets, because I think that all of you already know them or can figure them out. Even so, I deleted a few tiny URLs, hashtags, and repetitive phrases. I issued the tweets in groups, on related topics; so I’ve added some headings to this blog to show that organization. Otherwise, these are raw tweets.

Types of Multi-Structured Data Managed as Big Data

1. #TDWI SURVEY SEZ: 26% of users manage #BigData that’s ONLY structured, usually relational.

2. #TDWI SURVEY SEZ: 31% manage #BigData that’s eclectic mix of struc, unstruc, semi, etc.

3. #TDWI SURVEY SEZ: 38% don’t have #BigData by any definition. Hear more in #TDWI Webinar Oct.8 noonET http://bit.ly/BDMweb

4. Structured (relational) data from traditional apps is most common form of #BigData.

5. #BigData can be industry specific, like unstruc’d text in insurance, healthcare & gov.

6. Machine data is special area of #BigData, with as yet untapped biz value & opportunity.

Reasons for Managing Big Data Well

7. Why manage #BigData? Keep pace w/growth, biz ROI, extend ent data arch, new apps.

8. Want to get biz value from #BigData? Manage #BigData for purposes of advanced #analytics.

9. #BigDataMgt yields larger samples for apps that need it: 360° views, risk, fraud, customer seg.

10. #TDWI SURVEY SEZ: 89% feel #BigDataMgt is opportunity. Mere 11% think it’s a problem.

11. Key benefits of #BigDataMgt are better #analytics, datasets, biz value, sales/marketing.

12. Barriers to #BigDataMgt: low maturity, weak biz support, new design paradigms.

13. #BigDataMgt non-issues: bulk load, query speed, scalability, network bandwidth.

Strategies for Users’ Big Data Management Solutions

14. #TDWI SURVEY SEZ: 10% have #BigDataMgt solution in production; 10% in dev; 20% prototype; 60% nada. #TDWI Webinar Oct.8 http://bit.ly/BDMweb

15. #TDWI SURVEY SEZ: Most common strategy for #BigDataMgt: extend existing DataMgt systems.

16. #TDWI SURVEY SEZ: 2nd most common strategy for #BigDataMgt: deploy new DataMgt systems for #BigData.

17. #TDWI SURVEY SEZ: 30% have no strategy for #BigDataMgt though they need one.

18. #TDWI SURVEY SEZ: 15% have no strategy for #BigDataMgt cuz they don’t need one.

Ownership and Use of Big Data Management Solutions

19. Some depts. & groups have own #BigDataMgt platforms, including #Hadoop. Beware teramart silos!

20. Trend: #BigDataMgt platforms supplied by IT as infrastructure. Imagine shared #Hadoop cluster.

21. Who does #BigDataMgt? analysts 22%; architects 21%; mgrs 21%; tech admin 13%; app dev 11%.

Tech Specs for Big Data Management Solutions

22. #TDWI SURVEY SEZ: 97% of orgs manage structured #BigData, followed by legacy, semi-struc, Web data etc.

23. Most #BigData stored on trad drives, but solid state drives & in-memory functions are gaining.

24. #TDWI SURVEY SEZ: 10-to-99 terabytes is the norm for #BigData today.

25. #TDWI SURVEY SEZ: 10% have broken the 1 petabyte #BigData barrier. Another 13% will within 3 years.

A Few Best Practices for Managing Big Data

26. For open-ended discovery-oriented #analytics, manage #BigData in original form wo/transformation.

27. Reporting and #analytics are different practices; managing #BigData for each is, too.

28. #BigData needs data standards, but different ones compared to other enterprise data.

29. Streaming #BigData is easy to capture & manage offline, but tough to process in #RealTime.

30. Non-SQL, non-relational platforms are coming on strong; BI/DW needs them for diverse #BigData.

Want to learn more about managing big data?

For a much more detailed discussion—

in a traditional publication!—get the TDWI Best Practices Report, titled

Managing Big Data, available in a PDF file via a free download.

You can also register for and replay my

TDWI Webinar, where I present the findings of

Managing Big Data.

Please consider taking courses at the

TDWI World Conference in Boston, October 20–25, 2013. Enroll online.

============================

Philip Russom is the research director for data management at TDWI. You can reach him at

[email protected] or follow him as @prussom on Twitter.

Posted by Philip Russom, Ph.D.0 comments

By Philip Russom, TDWI Research Director

[

NOTE -- My new TDWI report “Integrating Hadoop into Business Intelligence (BI) and Data Warehousing (DW)” (Hadoop4BIDW) is finished and will be published in early April. I will broadcast the report’s Webinar on April 9, 2013 at noon ET. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #Hadoop, #TDWI, and #Hadoop4BIDW to find other leaks. Enjoy!]

Hadoop is still rather young, so it needs a number of upgrades to make it more palatable to BI professionals and mainstream organizations in general. Luckily, a number of substantial improvements are coming.

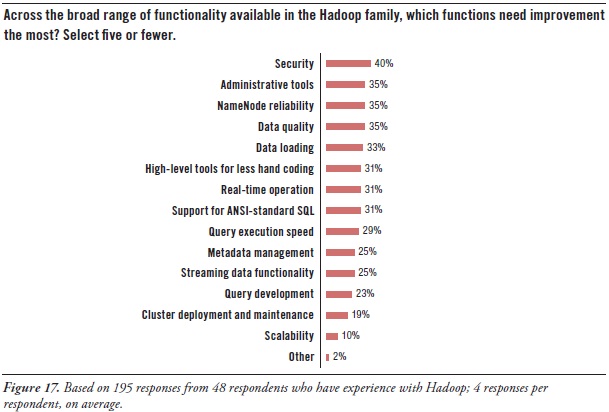

Hadoop users’ greatest needs for advancement concern security, tools, and high availability:

Security. Hadoop today includes a number of security features, such as file-permission checks and access control for job queues. But the preferred function seems to be Service Level Authorization, which is the initial authorization mechanism that ensures clients connecting to a particular Hadoop service have the necessary, pre-configured permissions. Furthermore, add-on products that provide encryption or other security measures are available for Hadoop from a few third-party vendors. Even so, there’s a need for more granular security at the table level in HBase, Hive, and HCatalog.

Administration. As noted earlier, much of Hadoop’s current evolution is at the tool level, not so much in the HDFS platform. After security, users’ most pressing need is for better administrative tools (35% in Figure 17 above), especially for cluster deployment and maintenance (19%). The good news is that a few vendors offer tools for Hadoop administration, and a major upgrade of open-source Ambari is coming soon.

High availability. HDFS has a good reputation for reliability, due to the redundancy and failover mechanisms of the cluster it sits atop. However, HDFS is currently not a high availability (HA) system, because its architecture centers around NameNode. It’s the directory tree of all files in the file system, and it tracks where file data is kept across the cluster. The problem is that NameNode is a single point of failure. While the loss of any other node (intermittently or permanently) does not result in data loss, the loss of NameNode brings the cluster down. The permanent loss of NameNode data would render the cluster's HDFS inoperable, even after restarting NameNode.

A BackupNameNode is planned to provide HA for NameNode, but Apache needs more and better contributions from the open source community before it’s operational. There’s also Hadoop SecondaryNameNode (which provides a partial, latent backup of NameNode) and third-party patches, but these fall short of true HA. In the meantime, Hadoop users protect themselves by putting NameNode on especially robust hardware and by regularly backing up NameNode’s directory tree and other metadata.

Latency issues. A number of respondents are hoping for improvements that overcome the data latency of batch-oriented Hadoop. They want Hadoop to support real-time operation (31%), fast query execution (29%), and streaming data (25%). These will be addressed soon by improvements to Hadoop products like MapReduce, Hive, and HBase, plus the new Impala query engine.

Development tools. Again, many users needs better tools for Hadoop, including development tools for metadata management (25%), query design (23%), and ANSI-standard SQL (31%), plus a higher-level approach that results in less hand coding (31%).

Want to learn more about big data and its management? Take courses at the TDWI World Conference in Chicago, May 5-10, 2013.

Enroll online.

Posted by Philip Russom, Ph.D.0 comments

Happy New Year to the TDWI Community! As we head into 2013, it’s clear that organizations will continue to face unpredictable economic currents and regulatory pressures, and will require better intelligence and faster decision processes. TDWI has just published a new Best Practices Report that I wrote, “Achieving Greater Agility with Business Intelligence.” This report focuses on how organizations can develop and deploy BI, analytics, and data warehousing to improve flexibility and decision-making speed. I hope you can attend our upcoming Webinar presentation of the report, to be held on January 15, which will look in-depth at the research findings and offer best practices recommendations for increasing agility.

Three key areas of innovation in technologies and practices that I covered in the report will clearly be important as organizations aim for higher agility in 2013. These include the following:

Managed, self-service BI and analytic data discovery of structured and unstructured data: Decision makers are demanding tools that will allow them to access, analyze, profile, cleanse, transform, and share information without having to wait for IT. They will need access to more than just historical, structured data found in traditional systems. Unified access to both structured and unstructured data is growing in importance as decision makers seek to perform complete, context-rich analysis against big data.

New data warehousing and integration options, including virtualization: Data integration can be the source of challenging and expensive problems. Organizations are evaluating the range of options, including data federation and virtualization, that can give users managed self-service. These could allow users to work more iteratively with IT to create comprehensive views of data in place without having to physically extract and move it into an application, data mart, or specialized data store.

Agile development methods: The use of agile methods, now a mainstream trend in software development, is having an increasing impact on BI and data warehousing. Organizations are proving that they by implementing Scrum and other techniques, they can remove a good deal of the wait and waste of traditional development processes.

In the report, we found that most organizations regard their agility – that is, their ability to adjust to change and take advantage of emerging opportunities – and merely “average.” No doubt, organizations seeking new competitive advantages in 2013 will demand better than that. They will be looking to their BI, analytics, and data warehousing systems to help them become reach a higher level of agility.

Posted by David Stodder0 comments

Business decision cycles are turning faster, and to keep up, executives and managers are in constant need of new data and new types of reporting and analysis. Dynamic organizations are demanding greater agility from their business intelligence (BI) systems. TDWI Research is currently examining how well organizations are able to adjust their BI and data warehouse (DW) development, deployment, and management to enable greater agility.

How is your organization doing in addressing user demands for more agile BI/DW? What are your toughest challenges? We would very much like to include your opinions and insights in the TDWI Research survey, which is live right now. Thank you to everyone who has already participated in the survey. As part of my research for what will ultimately be a TDWI Best Practices Report, I am also conducting interviews with professionals to understand their experiences with agile development methods for BI/DW and with deploying self-service BI, data virtualization, and other technologies that are helping organizations become more agile. If you are interested, please drop me a line at [email protected].

With survey data coming in, it’s hard not to take a peek at what we have so far. Respondents say that the business factors having the most disruptive impact, requiring greater business and IT agility, are increased competition (74%, with 20% calling it “very disruptive”), economic or global instability (68%), shorter decision cycles (65%), and technology modernization (62%). Changes in customer behavior form the fifth highest factor, with 60%. The largest percentage of respondents (45%) say that their organizations are “average” at adjusting to change and taking advantage of emerging opportunities, with 10% saying that their organization is “excellent,” 31% saying “good,” and 14% saying “poor.”

Other questions in the survey will provide data for deeper insight into where challenges are most acute in terms of BI/DW development processes and technologies. One of the biggest issues regarding agility is, of course, agile software development method adoption. Ralph Hughes, chief systems architect for Ceregenics and I will be speaking on this topic on September 20 at the upcoming TDWI World Conference in Boston. If you would like to hear a preview of what we will be talking about, including the ongoing research effort into use of agile methods, listen to our recent Webinar.

Achieving greater agility through better methods and technology is a hot area of interest in the TDWI community. Let us know your views on this important topic, both by taking the research survey and by getting in touch.

Posted by David Stodder0 comments

By Philip Russom

Before January runs out, I thought I should tender a few prognostications for 2012. Sorry to be so late with this, but I have a demanding day job. Without further ado, here are a few trends, practices, and changes I feel we can expect in 2012.

Big data will get bigger. But, then, you knew that. Enough said.

The connection between big data and advanced analytics will get even stronger. My base assumption is that advanced analytics has become such an important priority for user organizations that it’s influencing most of what we do in business intelligence (BI), data warehousing (DW), and data management (DM). It even influences our attitudes toward big data. After all, the current frenzy – which will become more operationalized than ad hoc in 2012 – is to apply advanced analytic techniques to big data. In other words, don’t do one without the other, if you’re a BI professional.

From problem to opportunity. The survey for my recent TDWI report on Big Data Analytics shows that 70% of organizations already think of big data as an asset to be leveraged, largely through advanced analytics. In 2012, the other 30% will come around.

From hoarding to collecting. As a devotee of irony, I’m amused to see reality TV shows about collectibles and hoarding run back-to-back. Practices lauded in the former are abhorred in the latter, yet the line between collecting and hoarding is a thin one. Big data is a case in point. Many organizations have hoarded Web logs, RFID streams, and other big data sets for years. The same organizations are now turning the corner into collecting these with a dedicated purpose, namely analytics.

Advanced analytics will become as commonplace as OLAP. Okay, I admit that I’m exaggerating for dramatic effect. But, I have to say that big data alone has driven many organizations beyond OLAP into advanced forms of analytics, namely those based on mining, statistics, complex SQL, and natural language processing. This trend has been running for almost five years; there may be another five in it.

God is in the details. Or is the devil in the details? I guess it depends on what we’re talking about. With big data analytics, expect to see far more granular detail than ever before. For example, most 360-degree customer views today include hundreds of customer attributes. Big data can bump that up to thousands of attributes, which in turn provides greater detail and precision for customer-base segmentation and other customer analytics, both old and new.

Multi-structured data. Are you as sick of the “structured data versus unstructured data” comparison as I am? This tired construct doesn’t really work with big data, because it’s often a mix of structured, semi-structured, and unstructured data, plus gradations among these. I like the term “multi-structured data” (which I admit that I picked up from Teradata folks) because the term covers the whole range and it reminds us that big data is often a kind of mashup. To get full business value out of big data through analytics, more user organizations will invest in people skills and tools that span the full range of multi-structured data.

You will change your data warehouse architecture. At least, you will if you’re truly satisfying the requirements of big data analytics. Let’s be honest. Most EDWs are designed and optimized by their technical users for reporting, performance management, OLAP, and not much else. This is both a user design issue and a vendor platform issue. In recent years, I’ve seen tons of organizations rearchitect their EDWs (and sometimes swap platforms) to accommodate massive big data, multi-structured data, real-time big streams, and the demanding workloads of advanced analytics. This painful-but-necessary trend is long from over.

I’m stopping here because I’ve reached my target word count. And my growling stomach says it’s lunch time. But you get the idea. The business value of advanced analytics and the nuggets to be mined from big data have driven a lot of change recently, and will continue to do so throughout 2012.

SUGGESTED READING:

For a detailed discussion, see the TDWI Best Practices Report, titled

Big Data Analytics, which is available in a PDF file via a free download.

You can also replay my

TDWI Webinar, where I present the findings of the Big Data Analytics report.

For a discussion of similar issues, download the TDWI Checklist Report, titled

Hadoop: Revealing Its True Value for Business Intelligence.

And you can replay last month’s

TDWI Webinar, in which I led a panel of vendor representatives in a discussion of Hadoop and related technologies.

Philip Russom is the research director for data management at TDWI. You can reach him at

[email protected] or follow him as @prussom on Twitter.

Posted by Philip Russom, Ph.D.0 comments

Blog by Philip Russom

Research Director for Data Management, TDWI

The current hype and hubbub around big data analytics has shifted our focus on what’s usually called “advanced analytics.” That’s an umbrella term for analytic techniques and tool types based on data mining, statistical analysis, or complex SQL – sometimes natural language processing and artificial intelligence, as well.

The term has been around since the late 1990s, so you’d think I’d get used to it. But I have to admit that the term “advanced analytics” rubs me the wrong way for two reasons:

First, it’s not a good description of what users are doing or what the technology does. Instead of “advanced analytics,” a better term would be “discovery analytics,” because that’s what users are doing. Or we could call it “exploratory analytics.” In other words, the user is typically a business analyst who is exploring data broadly to discover new business facts that no one in the enterprise knew before. These facts can then be turned into an analytic model or some equivalent for tracking over time.

Second, the thing that chaffs me most is that the way the term “advanced analytics” has been applied for fifteen years excludes online analytic processing (OLAP). Huh!? Does that mean that OLAP is “primitive analytics”? Is OLAP somehow incapable of being advanced?

I personally don’t think so. In fact, depending on how you design and implement it, OLAP can be quite advanced. For example, OLAP is very much about dimensions. In the 90s, eight dimensions was considered an advanced implementation. Nowadays I regularly talk with people who have twenty or more. I realize there’s a difference between advanced and mature. But I have to say that I’ve seen lots of mature OLAP implementations that support hundreds of cubes, hundreds of OLAP reports, and thousands of users. Over the years, different approaches to OLAP (multidimensional, relational, desktop, etc.) have consolidated into a hybrid OLAP, such that most vendor products today are quite mature, feature rich, and flexible.

Here’s another, related issue. While researching a new TDWI report on big data analytics, I ran across a few people (users, consultants, and vendors) who think that “advanced analytics” (or whatever you want to call it) will render OLAP obsolete. Therefore, user organizations should expunge OLAP from their BI portfolios. Uh, no. I don’t see that happening.

In defense of OLAP, it’s by far the most common form of analytics in BI today, and for good reasons. Once you get used to multidimensional thinking, OLAP is very natural, because most business questions are themselves multidimensional. For example, “What are western region sales revenues in Q4 2010?” intersects dimensions for geography, function, money, and time. Discoveries made in OLAP are easily “institutionalized” or “operationalized” (much more so than advanced analytics), so OLAP analyses are repeated over time with consistency. Since dimensions are easily expressed as parameters, an OLAP-based report can be as easy to use as a parameterized report, thereby putting OLAP-based analytics within the comprehension of a vast range of possible end-users.

The scope of discovery of an analytic method seems to be an important concern right now, as seen the current fascination with big data analytics. In that context, a possible limitation of OLAP is that most implementations are tightly coupled to datasets called cubes. If the information someone hopes to discover is not in a cube, then that can be a problem. Even so, so-called relational OLAP can be a solution, and OLAP tools are so friendly nowadays that just about anyone can create a cube. Depending on how an OLAP implementation is designed and which vendor tools are used, a cube can limit the scope of discovery, just as any analytic dataset can – even if it’s multi-terabyte big data.

In my mind, advanced analytics is very much about open-ended exploration and discovery in large volumes of fairly raw source data. But OLAP is about a more controlled discovery of combinations of carefully prepared dimensional datasets. The way I see it: a cube is a closed system that enables combinatorial analytics. Given the richness of cubes users are designing nowadays, there’s a gargantuan number of combinations for a wide range of users to explore.

So, OLAP’s not going away. Users would be nuts to abandon their large investments in such a handy technology. And it’s like most situations in IT. Few things go away. Organizations just keep adding more tools types and best practices to their portfolios. Therefore, user organizations should expect to maintain their useful investments in OLAP, while also digging deeper into other forms of exploratory and discovery analytics.

So, what do you think, folks? Let me know. Thanks!

Posted by Philip Russom, Ph.D.0 comments

To succeed with business intelligence (BI), sometimes you have to buck tradition, especially if you work at a fast-paced company in a volatile industry.

And that’s what Eric Colson did when he took the helm of Neflix’ BI team last year. He quickly discovered that his team of BI specialists moved too slowly to successfully meet business needs. “Coordination costs [among our BI specialists] were killing us,” says Colson.

Subsequently, Colson introduced the notion of a “spanner”—a BI developer who builds an entire BI solution singlehandedly. The person “spans” all BI domains, from gathering requirements to sourcing, profiling, and modeling data to ETL and report development to metadata management and Q&A testing.

Colson claims that one spanner works much faster and more effectively than a team of specialists. They work faster because they don’t have to wait for other people or teams to complete tasks or spend time in meetings coordinating development. They work more effectively because they are not biased to any one layer of the BI stack and thus embed rules where most appropriate. “A traditional BI team often makes changes in the wrong layer because no one sees the big picture,” Colson says.

Also, since spanners aren’t bound by a written contract (i.e., requirements document) created by someone else, they are free to make course corrections as they go along and “discover” the optimal solution as it unfolds. This degree of autonomy also means that spanners have higher job satisfaction and are more dedicated and accountable. One final benefit: there’s no fingerpointing, if something fails.

Not For Everyone

Of course, there are downsides to spanning. First, not every developer is capable of spanning. Some don’t have the skills, and others don’t have the interest. “We have lost some people,” admits Colson. Finding the right people isn’t easy, and you must pay a premium in salary to attract and retain them. Plus, software license costs increase because each spanner needs a full license to each BI tool in your stack.

Second, not every company is well suited spanners. Many companies won’t allocate enough money to attract and retain spanners. And mature companies in regulated or risk-averse industries may work better with a traditional BI organization and development approach.

Simplicity

Nonethless, experience shows that the simplest solution is often the best one. In that regard, spanners could be the wave of the future.

Colson says that using spanners eliminates much of the complexity of running BI programs and development projects. The only thing you need is a unifying data model and BI platform and a set of common principles, such as “avoid putting logic in code” or “account ID is a fundamental unifier.” The rest falls into the hands of the spanners who rely on their skills, experience, and judgment to create robust local applications within an enterprise architecture. Thus, with spanners, you no longer need business requirement analysts or requirements documents, a BI methodology, project managers , and a QA team, says Colson.

This is certainly pretty radical stuff, but Colson has proven that thinking and acting outside the box works, at least at Neflix. Perhaps it’s time you consider following suit!

0 comments

It’s easy to get mesmerized by analytics. The science behind it can be intimidating, causing some people to abandon common sense when making decisions. Just ask financial executives of major investment houses. Blinded by complex risk models, many took on too much debt and then faltered as the economy tightened in 2008.

Relying too much on analytics is just as disastrous as ignoring it and running the business on gut instinct alone. The key is to blend analytics and instinct—or art and science, if you will—to optimize corporate decision making. Interestingly, several business intelligence (BI) leaders use the phrase “art and science” when discussing best practices for implementing analytics.

Stocking Auto Parts

For example, Advance Auto Parts, a $5 billion retailer of auto parts, recently began using analytical models to help it move from a “one size fits all” strategy for stocking inventory at its 3,500 stores to customized inventory for each store based on the characteristics of its local market. This store-specific assortment strategy has reduced non-working inventory from 20% to 4%, generating millions in cost savings.

“By blending art and science, we gain the best of each and minimize the downsides,” said Bill Robinette, director of business intelligence at Advance Auto Parts in a presentation he delivered this week at TDWI’s “Deep Analytics for Big Data” Summit in San Diego.

Advance Auto Parts sells about 600,000 unique items—everything from windshield wipers and car wax to transmissions and engines—but each store can only stock about 18,000 parts. To determine the best items that each store should carry, the company combines business rules based on the accumulated experience of store managers and executives with analytical rules derived from logistic regressions, decision trees, and neural network algorithms.

This blend of business and analytical rules has proven more accurate than using either type alone, says Robinette. Plus, it’s easier to sell analytics to long-time managers and executives if they know the models are based on commonsense rules that they created. And of course, the results speak for themselves and managers now wholeheartedly back the system.

HIPPOs and Groundhogs

Echoing the theme of blending art and science, Ken Rudin, general manager of analytics and social networking, at Zynga, an online gaming company, recently discussed the dangers of making decisions solely using intuition or analytics at TDWI’s BI Executive Summit in August.

Rudin uses the term HiPPO to explain the dangers of making decisions without facts. A HiPPO is the highest paid person’s opinion in the room. “In the absence of data, HiPPOs always win the discussion,” says Rudin. Zynga now examine every idea proposed by game designers using A/B testing on its Web site to assess whether the idea will help the company increase player retention, which is a key corporate objectives, among other things.

Conversely, Rudin says companies should avoid the “Groundhog” effect. This is when people focus too much on scientific analysis and data when making decisions. Groundhogs get caught up in the details and fail to see the big picture. As a result, they make suboptimal decisions.

For example, A/B testing revealed that players of Zynga’s Treasure Island game preferred smaller islands to minimize the amount of time they had to dig for buried treasure. Consequently, Zynga’s game designers made islands smaller to reduce churn, but each time they did, player behavior didn’t change much. What the testing missed, says Rudin, was that the players didn’t mind digging as long as they were entertained along the way, with clues for solving puzzles or notes left by friends, for example.

Happiness and fulfillment in life comes from achieving balance often by blending opposites into a unified whole. The same is true in BI. Successful BI managers combine art and science—or intuition and analytics—to achieve optimal value from their analytical investments.

0 comments

As a parent, by the time you have your second or third child, you know which battles to fight and which to avoid. It’s time we did the same in business intelligence (BI). For almost two decades we’ve tried to shoehorn both casual users and power users into the same BI architecture. But the two don’t play nicely together. Given advances in technology and the explosion in data volumes and types, it’s time we separate them and create dual BI architectures.

Mapping Architectures

Casual users are executives, managers, and front-line workers who periodically consume information created by others. They monitor daily or weekly reports and occasionally dig deeper to analyze an issue or get details. Generally, a well-designed interactive dashboard or parameterized report backed by a data warehouse with a well-designed dimensional schema is sufficient to meet these information needs. Business users who want to go a step further and build ad hoc views or reports for themselves and peers—whom I call Super Users—are best served with a semantic layer running against the same data warehouse.

Power users, on the other hand, explore data to answer unanticipated questions and issues. No predefined dashboard, report, or semantic layer is sufficient to meet their needs. They need to access data both in the data warehouse and outside of it, beyond the easy reach of most BI tools and predefined metrics and entities. They then need to dump the data into an analytical tool (e.g. Excel, SAS) so they can merge and model the data in novel and unique ways.

For years, we’ve tried to reconcile casual users and power users within the same BI architecture, but it’s a losing cause. Power users generate “runaway” queries that bog down performance in the data warehouse, and they generate hundreds or thousands of reports that overwhelm casual users. As a result, casual users reject self-service BI and revert back to old habits of requesting custom reports from IT or relying on gut feel. Meanwhile, power users exploit BI tools to proliferate spreadmarts and renegade data marts that undermine enterprise information consistency while racking up millions in hidden costs.

Time for a New Analytic Sandbox

Some forward-looking BI teams are now creating a separate analytic architecture to meet the needs of their most extreme power users. And they are relegating their data warehouses and BI tools to handle standard reporting, monitoring, and lightweight analysis.

Compared to a traditional data warehousing environment, an analytic architecture is much more free-form with fewer rules of engagement. Data does not need rigorous cleaning, mapping, or modeling, and hardcore business analysts don’t need semantic guardrails to access the data. In an analytic architecture, the onus is on the business analyst to understand source data, apply appropriate filters, and make sense of the output. Certainly, it is a “buyer beware” environment. As such, there may only be a handful of analysts in your company who are capable of using this architecture. But the insights they generate may make the endeavor well worth the effort and expense.

Types of Analytic Architectures

There are many ways to build an analytic architecture. Below are three approaches. Some BI teams implement one approach; others mix all three.

Physical Sandbox. One type of analytic architecture is uses a new analytic platform—a data warehousing appliance, columnar database, or massively parallel processing (MPP) database—to create a separate physical sandbox for their hardcore business analysts and analytical modelers. They offload complex queries from the data warehouse to these turbocharged analytical environments , and they enable analysts to upload personal or external data to those systems. This safeguards the data warehouse from runaway queries and liberates business analysts to explore large volumes of heterogeneous data without limit in a centrally managed information environment.

Virtual Sandbox. Another approach is to implement virtual sandboxes inside the data warehouse using workload management utilities. Business analysts can upload their own data to these virtual partitions, mix it with corporate data, and run complex SQL queries with impunity. These virtual sandboxes require delicate handling to keep the two populations (casual and power users) from encroaching on each other’s processing territories. But compared to a physical sandbox, it avoids having to replicate and distribute corporate data to a secondary environment that runs on a non-standard platform.

Desktop Sandboxes. Other BI teams are more courageous (or desperate) and have decided to give their hardcore analysts powerful, in-memory, desktop databases (e.g., Microsoft PowerPivot, Lyzasoft, QlikTech,Tableau, or Spotfire) into which they can download data sets from the data warehouse and other sources to explore the data at the speed of thought. Analysts get a high degree of local control and fast performance but give up data scalability compared to the other two approaches. The challenge here is preventing analysts from publishing the results of their analyses in an ad hoc manner that undermines information consistency for the enterprise.

Dual, Not Dueling Architectures

As an industry, it’s time we acknowledge the obvious: our traditional data warehousing architectures are excellent for managing reports and dashboards against standard corporate data, but they are suboptimal for managing ad hoc requests against heterogeneous data. We need dual BI architectures: one geared to casual users that supports standard, interactive reports and dashboards and lightweight analyses; and another tailored to hardcore business analysts that supports complex queries against large volumes of data.

Dual architectures does not mean dueling architectures. The two environments are complementary, not conflicting. Although companies will need to invest additional time, money, and people to manage both environments, the payoff is worth the investment: companies will get higher rates of BI usage among casual users and more game-changing insights from hardcore power users.

0 comments