The Role of Hardware in High-Performance Data Warehousing

By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report about High-Performance Data Warehousing (HiPer DW) is finished and will be published in October. The report’s Webinar will broadcast on October 9, 2012. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #HiPerDW to find other leaks. Enjoy!]

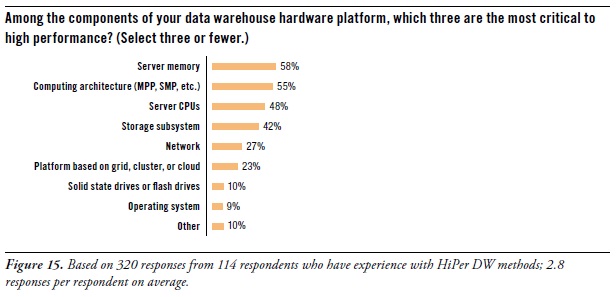

Let’s focus for a moment on the hardware components of a data warehouse platform. After all, many of the new capabilities and high performance of data warehouses come from recent advances in computer hardware of different types. To determine which hardware components contribute most to HiPer DW, the survey asked: “Among the components of your data warehouse hardware platform, which three are the most critical to high performance?” (See Figure 15. [shown above])

You may notice that the database management system (DBMS) is omitted from the list of multiple answers for this question. That’s because a DBMS is enterprise software, and this question is about hardware. However, let’s note that – in other TDWI surveys – respondents made it clear that they find the DBMS to be most critic component of a DW platform, whether for high performance, data modeling possibilities, BI/DI tool compatibility, in-database processing logic, storage strategies, or administration.

Performance priorities for hardware are server memory, computing architecture, CPUs, and storage.

Server memory topped respondents’ lists as most critical to high performance (58% of survey respondents). Since 64-bit computing arrived ten years ago, data warehouses (like other platforms in IT) have migrated away from 32-bit platform components, mostly to capitalize on the massive addressable memory spaces of 64-bit systems. As the price of server memory continues to drop, more organizations upgrade their DW servers with additional memory; 256 gigabytes seems common, although some systems are treated to a terabyte or more. To a lesser degree, users are also upgrading ETL and EBI servers. “Big memory” speeds up complex SQL, joins, and analytic model rescores due to less I/O to land data to disk.

Computing architecture (55%) also determines the level of performance. In other TDWI surveys, respondents have voiced their frustration at using symmetrical multi-processing systems (SMP), which were originally designed for operational applications and transactional servers. The DW community definitely prefers massively parallel processing (MPP) systems, which are more conducive to the large dataset processing of data warehousing.

Server CPUs (48%) are obvious contributors to HiPer DW. Moore’s Law once again takes us to a higher level of performance, this time with multi-core CPUs at reasonable prices.

We sometimes forget about storage (42%) as a platform component. Perhaps that’s because so many organizations now have central IT departments that provide storage as an ample enterprise resource, similar to how they’ve provided networks for decades. The importance of storage grows as big data grows. Luckily, storage has kept up with most of the criteria of Moore’s Law, with greater capacity, bandwidth, reliability, and capabilities, while also dropping in price. However, disk performance languished for decades (in terms of seek speeds), until the recent invention of solid-state drives, which are slowly finding their way into storage systems.

USER STORY -- Caching OLAP cubes in server memory provides high-performance drill down. “Within our enterprise BI program, we have business users who depend on OLAP-based dashboards for making daily strategic and tactical decisions,” said the senior director of BI architecture at a media firm. “To enable drill down from management dashboards into cube details, we maintain cubes in server memory, and we refresh them daily. We’ve only been doing this a few months, as part of a pilot program. The performance is good, and we received very positive feedback from the users. So it looks like we’ll do this for other dashboards in the future. To prepare for that eventuality, we just upgraded the memory in our enterprise BI servers.”

On a related topic, one of the experts interviewed for this report had this to add: “As memory chip density increases, the price comes down. Price alone keeps most server memory down to one terabyte or less today. But multi-terabyte server memory will be common in a few years.”

Want more? Register for my HiPer DW Webinar, coming up Oct.9 noon ET.

Read other blogs in this series:

Reasons for Developing HiPer DW

Opportunities for HiPer DW

The Four Dimensions of HiPer DW

Defining HiPer DW

High Performance: The Secret of Success and Survival

Posted by Philip Russom, Ph.D.