Generative AI and Its Implications for Data and Analytics

Generative artificial intelligence has captured the imaginations of people around the world. Though not entirely new, generative AI is evolving so rapidly that technology professionals need to track its maturation closely to effectively evaluate the associated opportunities and risks. Here are the basics you need to know.

What Is Generative AI?

Put simply, generative AI applications automate the generation of data-driven outputs. The technology uses machine learning models to find statistical patterns within training data and then generate new outputs -- such as documents, images, and videos -- that are consistent with these patterns.

Popular generative AI use cases include intelligent chatbots, personalized marketing messages, and drug discovery. One of the most noteworthy implementations of generative AI is ChatGPT, an intelligent chatbot released in November 2022 by OpenAI. The widely adopted ChatGPT, powered by a generative AI technology known as a large language model (LLM), can summarize documents, write marketing copy, roll up search results, and support other complex content creation tasks.

How Do Large Language Models Power Generative AI?

An LLM is a vast neural network that operates a bit like “autocorrect on steroids.” It has been trained to predict the next word in a sentence, responding to a query-like “prompt” by considering all the surrounding words within the text database upon which it’s been trained. Through an iterative training process, the LLM learns the probabilities of word sequences appearing together in natural language that may be appropriate in a generated response to that prompt.

Another popular generative AI use case is automated generation of program code. For data and analytics professionals, some LLM-driven generative AI applications support generation of Python code. Some also generate synthetic data for machine learning use cases where authentic, from-the-source data is unavailable, sparse, biased, privacy protected, or too costly to acquire. Generative AI tools, such as LLMs, can also analyze and visualize data, making them a worthy addition to many data professionals’ self-service, no-code business analytics toolkits.

Yet another sophisticated generative AI application is text-to-image generation. Applications such as OpenAI’s DALL-E 2 leverage LLMs to generate realistic images and art in response to text-based prompts. In this use case, the pre-training database generally includes images as well as the text-based captions with which they’re associated.

How Do Foundation Models Enhance the Agility of Generative AI?

Many observers consider LLMs and other generative AI technologies a key stepping stone to what’s often called “artificial general intelligence.” This is because many generative AI applications are built on foundation models. These are machine learning algorithms that have been pre-trained on huge unlabeled data sets and, though they have been trained to address specific core tasks, are easily adaptable to adjacent tasks through additional steps known as “fine-tuning.” For example, ChatGPT-4’s core LLM -- the GPT-4 foundation model -- is built from a highly adaptable machine learning approach known as transformer-based architecture.

Foundation models are proliferating for a wide range of use cases, with both open-source and closed-source offerings available to anyone who wants to develop new generative applications. For instance, Hugging Face, Meta, and IBM all provide libraries of pre-trained, open-source foundation models for different tasks. A wide range of proprietary foundation models come from OpenAI, Cohere, AI21Labs, Anthropic, DeepMind, Google AI, and others.

Training a foundation model begins with an established data science technique called unsupervised learning, where a large corpus of data is fed into the model, which then algorithmically discovers “parameters” associated with facets of the task to be learned. Regardless of their source or the specific use cases for which they’re suited, foundation models can be adapted for specific enterprise requirements by fine-tuning them through a variety of techniques, such as tweaking a pre-trained model with new weights, training it with labeled task-specific data, and supervising its output with reinforcement learning and human feedback. Through these techniques, a foundation model can be adapted to new tasks similar to its original function, such as when an LLM is adapted to translate English text into many other natural languages.

Vendors Jumping on the Bandwagon

Vendors, of course, are embracing generative AI in numerous ways:

- New start-ups. Hundreds, if not thousands, of start-ups have sprung up in the last few years to provide point solutions that utilize generative AI. These include content generators such as Jasper.ai and Compose, music generators such as Loudly and Boomy, image generators such as Picsart, speech generation such as PolyAI, and chat/virtual characters such as Gemsouls.

- Infusing generative AI in their products. Many data and analytics vendors are infusing generative AI into their products. For example, Databricks recently announced Lakehouse IQ which enables users in an organization to interact with their data using natural language to search, understand, and query data. Snowflake recently announced Document AI which has an LLM-based interface to understand PDF documents. Informatica announced Claire GPT, a generative AI data management platform. IBM announced WatsonX.ai to train, validate, tune, and deploy generative AI. Tableau announced Tableau GPT to provide an easier way to surface insights and interact with data. Alteryx recently announced AiDIN which infuses generative AI into its analytics platform. The list goes on.

- Supporting and providing foundation models. Because many organizations want to run generative AI on their own data, on their own data management platforms, most of the cloud data management vendors have announced how they will support foundation models and generative AI on their platforms. Some have created their own foundation models, as well.

Where We Are Now

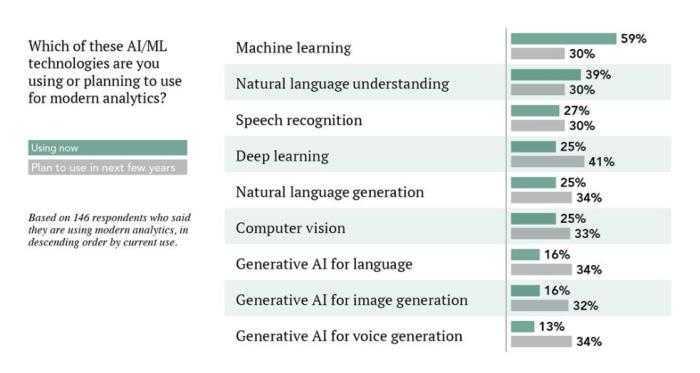

We see strong interest in generative AI at TDWI. The chart below comes from a 2023 TDWI Best Practices Report. In it, we asked those organizations who claimed to be using modern analytics what technologies they were using. Less than 20% of respondents are using generative AI for language, image, or voice today; more than 30% are planning to use it in the next few years.

|

Figure 1: AI/ML technologies in use. Source: 2023 TDWI Best Practices Report: Achieving Success

with Modern Analytics, available at tdwi.org/bpreports. (Click to enlarge)

|

That isn’t to say that there aren’t concerns. For instance, in a recent TDWI webinar on LLMs, attendees expressed concerns about data privacy and security when using generative AI (77%). They were concerned about the complexity involved in integrating generative AI models in their environment (51%), and skills shortages with generative AI (58%). Half were concerned about the lack of trust in generative AI models.

All of these concerns are valid. This is new territory for everyone, and approaches are evolving. TDWI sees issues in the following areas:

Security and privacy. Many organizations don’t want to use open AI platforms with their company’s data. They prefer to bring pre-trained foundation models to their data, on their platforms, so they can keep their data secure. This will require new processes as well as new skills.

Skills. There will be a new learning curve associated with generative AI, especially for use cases that go beyond asking ChatGPT or some other LLM to produce content for you. As organizations strive to build applications that use pre-trained models against their own data, they will need to learn how this works. Additionally, they will need to determine how to put these models into production, which will impact the already burgeoning field of MLOps.

Trust and transparency. If you’re using a pre-trained foundation model, you have to make sure that what is generated is not false or misleading because these systems can generate low-quality data and there is generally little transparency into how LLMs and other generative AI models work. You’re relying on that foundation model, which is typically a black box. Although there is some work going on to bring explainability to foundation models, it is still early days. This trust and transparency will impact use cases from content generation to data analysis. Enterprises will need controls to ensure that results are usable.

Responsibility and ethics. Aside from producing flat-out incorrect results, generative AI may also generate biased, exclusionary, or otherwise unfair representations. This is no different than what happened in some machine learning models that incorrectly predicted who would be a good engineer (males) or who should not get parole (people of color). A layer of responsibility and ethics needs to be considered, as well as how that layer will work in your environment.

Cost. Ultimately, foundation models can be computationally intensive to train, so you’ll need to make plans if you are going to execute the models in your environment.

Beyond these concerns, there are the usual challenges associated with the immaturity of today’s market for generative AI solutions. Innovations in LLMs and other generative AI technologies are accelerating, threatening to make early investments obsolete. Many commercial solutions and vendors are still new to this market, and there is no guarantee that they’ll survive the fierce competitive fray. Furthermore, increased regulatory and legal scrutiny of these technologies in coming years may slow their adoption and increase the overhead burden for enterprises that adopt generative AI into their core business models.

Nevertheless, generative AI is on a fast track to widespread adoption. It has demonstrated wide-ranging potential in publishing, media and entertainment, customer engagement, and countless other real-world applications. In the coming year, we are sure to see amazing new patterns of living unlocked by the disruptive possibilities of generative AI.

About the Authors

Fern Halper, Ph.D., is well known in the analytics community, having published hundreds of articles, research reports, speeches, webinars, and more on data mining and information technology over the past 20 years. Halper is also co-author of several “Dummies” books on cloud computing, hybrid cloud, and big data. She is VP and senior research director, advanced analytics at TDWI Research, focusing on predictive analytics, social media analysis, text analytics, cloud computing, and “big data” analytics approaches. She has been a partner at industry analyst firm Hurwitz & Associates and a lead analyst for Bell Labs. Her Ph.D. is from Texas A&M University. You can reach her at [email protected] or LinkedIn at linkedin.com/in/fbhalper.

James Kobielus is a veteran industry analyst, consultant, author, speaker, and blogger in analytics and data management. He was recently the senior director of research for data management at TDWI, where he focused on data management, artificial intelligence, and cloud computing. Previously, Kobielus held positions at Futurum Research, SiliconANGLEWikibon, Forrester Research, Current Analysis, and the Burton Group. He has also served as senior program director, product marketing for big data analytics for IBM, where he was both a subject matter expert and a strategist on thought leadership and content marketing programs targeted at the data science community. You can reach him by email ([email protected]), on X (@jameskobielus), and on LinkedIn (https://www.linkedin.com/in/jameskobielus/).