Why Understanding Data Gravity is the Key to Data Migration Success

Data platform migration succeeds when you invest enough time, money, and leadership to build a critical mass, shift the gravitational pull, and block old routes.

- By Stan Pugsley

- July 16, 2021

Large-scale data migrations to the cloud are, or should be, generally part of most organization's technology road map. However, their cost and risk are often underestimated, to disastrous results. Projects frequently fail to deliver value and often extend far beyond the original timeline. Legacy servers live on in the data center years past their expected shut-down date and continue to support hard-to-migrate production processes. The reason for these conditions is that the projects fail to reach escape velocity from the data gravity; the gravitational pull from their legacy platform has kept data and users stuck in the old location while the under-powered projects are stuck orbiting the old system.

The concept of data gravity explains the challenge in shifting platforms. In 2010, Dave Mcrory wrote, "Services and applications can have their own gravity, but data is the most massive and dense, therefore it has the most gravity. Data if large enough can be virtually impossible to move." The difficulty is not just in the technical tasks involved with moving data, but also in the human components of change management and leadership. Large data warehouses may have hundreds of interfaces, reports, and hidden ODBC connections that are difficult to identify and move, but they may have hundreds of users who have grown to trust and rely on the data to do their jobs.

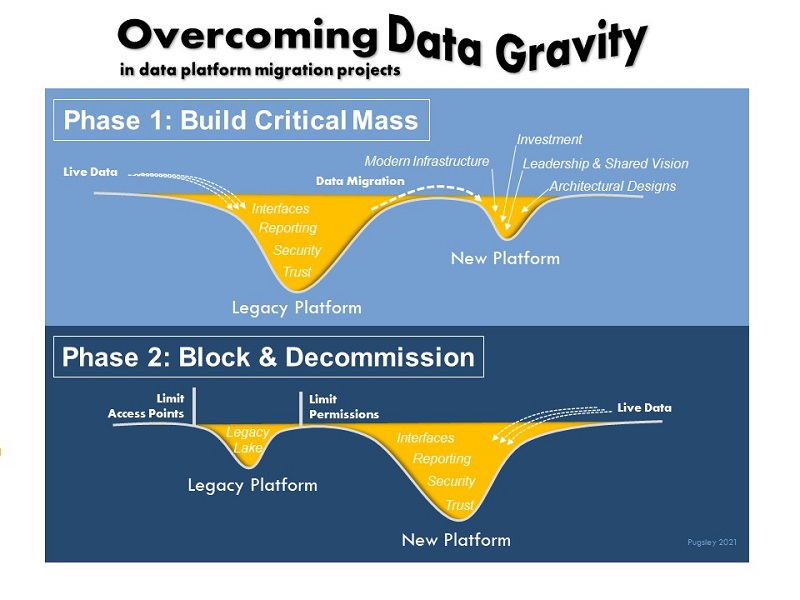

This figure shows the two phases in overcoming data gravity.

Phase 1: Build Critical Mass

The goal in this phase is to identify the data sets that deliver the greatest value to the business and move those to the new platform. Initially, you can disregard the minor data sets and most difficult niche processes. Your objective is to build a critical mass in the new location, and build enough value to draw even more interest and resources to the new platform. Data pipelines may feed both systems for an interim period, but a road map spells out target dates for full transition. As attitudes and data pipelines shift, the momentum drives the business to build a strong case to invest the resources to migrate the most difficult processes and interfaces.

Part of shifting the balance of gravity from old to new platforms is a consistent effort to provide messaging and proof-of-concepts showing that the new architecture and infrastructure is clearly superior and worth the effort.

One idea is to take a high-profile report or dashboard used widely in the organization and migrate it early in the project. Then set up a series of meetings with key stakeholders to demonstrate the new report, showing how performance improved while the complexity of the code that created the report has been simplified. You can send out regular emails announcing the latest developments in the migration project and highlighting improvements. All of these efforts around change management will help shift the focus and energy to the new platform.

Leadership is key to creating a sense of inevitability and improvement. The teams must have a shared vision of the future state, enabling faster development, cleaner interfaces, and better performance. Teams must also know that there is no turning back. The data they rely on is on a one-way rocket ship heading to the new destination; ideas for improvement are welcome but resistance is futile. This unified resolve from leaders in the organization will create the resolve to overcome obstacles and complications along the way.

Phase 2: Block and Decommission

The goal in this second phase is to maintain project momentum until the legacy platform is fully decommissioned. You want to drain any remaining gravity by technically limiting the old system and organizationally committing to having a single source of truth. The decommissioning process must appear to be steady and unstoppable.

This phase involves a continued push to migrate data and code while methodically blocking ingress and egress to the legacy platform. Some niche processes, such as Excel spreadsheets connected to data via ODBC, will never show up on an architecture diagram or list of systems, so they may only rise to the surface if their connection route is blocked. As you identify the last remaining reports and interfaces, you can determine a date for the end of life for the legacy platform and communicate that to the business stakeholders -- while continuing to celebrate gains on the new platform.

A Final Word

Data gravity will only shift with significant leadership effort, investment, and actual, demonstrated value delivered by a new platform. It is not enough to simply announce a project, buy a license, and let the data engineers work behind the scenes on a migration. Organizational and technical resistance to change will sink your project before it can reach escape velocity from the gravity of the current platform.

The final goal is to have a single source of truth on a new platform that will enable better performance and more flexibility into the not-too-distant future. Cloud platforms are constantly improving and expanding without requiring your data to move, so if you pick the right technology, your next migration could be your last!

About the Author

Stan Pugsley is an independent data warehouse and analytics consultant based in Salt Lake City, UT. He is also an Assistant Professor of Information Systems at the University of Utah Eccles School of Business. You can reach the author via email.