ETL Test Automation Planning for DW/BI Projects

Applying DevOps-style test automation to your projects can guarantee a high level of data quality.

- By Wayne Yaddow

- March 22, 2019

According to a CIO.com article, Gartner Group recently stated that between 70 and 80 percent of business intelligence initiatives are initially considered failures but many resume due to their importance to the organization.

As businesses create (and need) more data than ever before, the sheer number of BI failures threatens to grow exponentially. This could have a far-reaching impact on the underlying digital transformation initiatives that these projects are designed to enable.

Given that companies are releasing new applications faster than ever -- some releasing updates on demand and multiple times a day -- too many organizations are using manual ETL test processes and the wrong tools to manage critical parts of releases for highly visible, often customer-facing, applications. That translates into risk to customer loyalty, the brand, confidential data -- and worse.

This article explores how applying DevOps-style test automation to DW/BI and other data integration projects can guarantee a high level of data quality -- instilling the trust that is essential for the success of BI projects and the digital transformation initiatives that are ultimately driving them.

Taking a DevOps Approach to DW/BI Testing

DevOps, with its focus on tool automation across the entire development life cycle, addresses an enormous challenge for big data and DW/BI developers. Many of today's big data and DW/BI projects are already leveraging (or actively planning to adopt) agile and DevOps processes -- but not for testing. DW/BI projects in general are not currently using automated testing tools to the extent that is needed for project successes. Perhaps this is because they believe the required testing functions are not commercially available or are too complex and expensive to develop in-house.

When thinking about what you need to test to ensure data integrity, consider that BI is more than just data warehouses (DW) and extract, transform, and load (ETL). Services between the ETL processes, as well as the middleware and dashboard visualizations, also come under the purview of BI. Messages and negotiating pacts between these layers are complex and require considerable coordination and testing.

DevOps helps facilitate this with constant deployments and testing. Implementing a DevOps testing approach to DW/BI means automating the testing of different source and target data sets to keep data current. This can be tremendously beneficial when handling many (possibly hundreds of) diverse data sources and volumes. Your team will be able to detect errors before they threaten BI applications in production. Moreover, you will have more time to fix issues before applications reach production.

Why Test Automation?

Continuous quality is a systematic approach to process improvement in order to achieve the quality goals of development and the business it supports. In the 2018 Magic Quadrant for Software Test Automation, Gartner states: "Test automation tools are essential elements of a DevOps toolchain and enablers for achieving the continuous quality approach required for successful DevOps."

However, as for any IT project, repeated ("regression") testing is important to guarantee a high level of (data) quality. The more we test, the more bugs we will resolve before going live. This is especially crucial for business intelligence projects. When the users can't trust the data, it's likely that the BI solution itself will not be trusted -- and thus fail.

As mentioned earlier, ETL testing is primarily conducted manually, which makes it a labor-intensive and error-prone process. Automation can not only help execute tests; it can also assist with designing and managing them.

Which Tests Should be Automated?

Automating ETL tests allows frequent smoke and regression testing without much user intervention and supports automatic testing of older code after each new DB build.

The decision to implement automated tools for ETL testing depends on a budget that supports additional spending to meet advanced testing requirements. It is important to remember test tools built and maintained in-house are better than no test automation at all. In the end, test automation will save time. Additionally, business users will appreciate the quality of BI deliverables and accept the data from the data platform solution as the "single version of the truth."

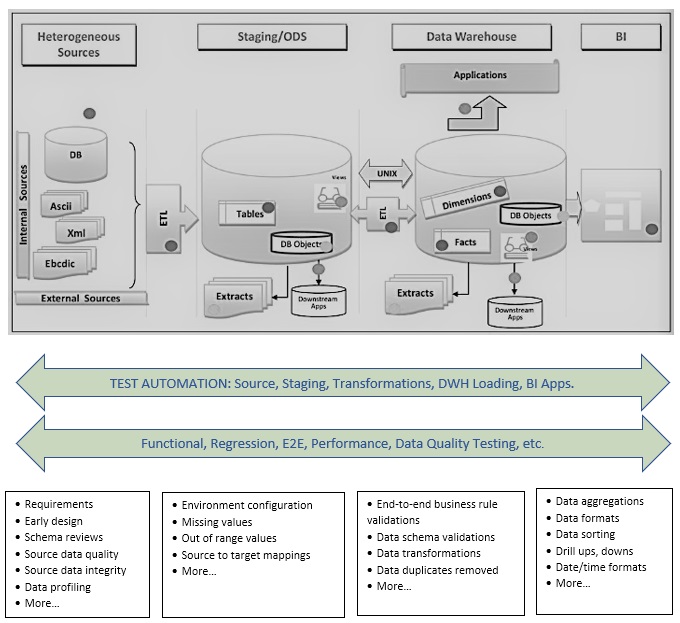

Figure 1: A sampling of tests and validations that should be considered for most DW/BI projects.

Planning for the ETL Test Automation Process

Below are several highly-recommended test automation planning steps for DW/BI projects. As with all projects, the decisions made during the planning stages of a test automation project set the stage for success or failure. For this reason, we suggest that you set goals, analyze current processes, and build the right implementation team prior to launching the test automation project.

1. Analyze your current testing process -- from unit testing and component testing to data quality testing

2. Define the stakeholders and IT team

3. Identify and prepare several test scenarios for test automation

4. Research and select two or three top commercial or open source ETL and data quality automation tools for an in-depth evaluation

5. Conduct proof-of-concept exercises, preferably with the collaboration of tool vendors who can help ensure you fully understand the tool's potential and conduct the most accurate assessment in the shortest time possible

6. Implement the selected automation tools

7. Make time for training and the learning curve

8. Begin automating already-documented test cases

9. Review your process and results

Planning Which ETL and DW/BI Verifications are Best for Automation

When you build scenarios for test automation, evaluate your scenarios and determine which are the best candidates for automation based on risk and value. Which types of defects would cause you to stop an integration or deployment? Which types of tests exercise critical core functionality? Which tests cover areas of the application that have historically been known to fail? Which tests are providing information that is not already covered by other tests in the pipeline?

Common manual DW tests

- Exploratory testing requires the tester's knowledge, experience, analytical/logical skills, creativity, and intuition. Human skills are needed to execute the testing process in this scenario.

- Ad hoc testing employs no specific approach. It is often an unplanned method of testing where the understanding and insight of the tester is the important factor.

Common automated DW tests

- Source-to-target data reconciliation testing (including transformation testing, regression testing, and smoke testing) can benefit from automated testing because of frequent code changes and the ability to run the regression assessments in a timely manner.

- Repeated execution requires the repeated execution of a task, so it is best automated.

- Load testing is another type of testing where automation is essential for efficiency.

- Performance testing, which requires the simulation of thousands of concurrent users, requires automation.

- End-to-end testing can be time-consuming because of the variety of stages, technologies, and vast volume of data involved. Each phase of ETL testing requires different strategies and types of testing -- for example, one-to-one comparisons, validations of migrated data, validations of transformation rules, reconciliations (e.g., sources to targets), data quality check, and front-end testing of BI reports.

Table 1 below lists most types of testing often considered for test automation and test automation tool implementations (commercial, open-source, and in-house tools). Utilizing a list of test scenarios such as this can be a good start on your road to DH/BI test automation.

|

Test Scenarios

|

Purpose and Test Cases

|

|

Validate metadata

|

Validate the source and target table structure as per the mapping and metadata documents

- Data types are validated in the source and the target systems

- The length of data types in the source and the target system should be the same

- Data field types and their format are verified to be the same in the source and the target system

- Validate the column names in the target system

|

|

Validate mapping documents

|

Validate mapping and metadata documents to ensure all the information has been implemented; the mapping document should have a change log, maintain data types, length, transformation rules, etc.

|

|

Validate constraints

|

Validate all column and transformation constraints and ensuring that they are applied on the expected tables

|

|

Data consistency and integrity checks

|

Check the misuse of integrity constraints such as foreign keys -- no orphan foreign keys

The length and data type of an attribute may vary in different tables, although their definition remains the same at the semantic layer

|

|

Data completeness validations

|

Verify that all data is loaded to the target system from the source system

- Record counts in the source and the target data

- Boundary value analysis (tests of min/max, no truncations)

- Validate the unique values of primary keys

|

|

Data correctness validations

|

Verify values of data in the target system

- Misspelled or inaccurate numeric data in target table

- Distinct values in columns (not unique data) is stored when you disable integrity constraints at the time of import

|

|

Data transformations applied according to business rules

|

Create a matrix of scenarios for input values and expected results and then validate with end users

- Validate parent-child relationship in the data by creating scenarios

- Use data profiling to verify the range of values in each field

- Validate if the data types in the warehouse are same as mentioned in the data model

- Default values, data trimming, etc.

- Verify source table joins for aggregations, etc.

|

|

Data quality validations

|

Perform number check, date check, precision check, data check, null checks, etc. on both source and target data

Example: Date format should be the same for all the values per the column definitions

|

|

Duplicate data validations

|

- Validate duplicate values in target system columns and rows when data is loaded from multiple columns in sources

- Validate primary keys and other columns if there are any duplicate values as per the business requirement

- Verify that multiple columns specified as a unique key can be grouped without resulting in duplicate records.

|

|

Date validation checks

|

Validate the date field for all defined actions performed in ETL processes

- From_Dates are not greater than To_Dates

- Min and max values within bounds ( 01/01/1970, 2099-12-31)

- Date and time values as specified

- Date values contain no junk values or null values

|

|

CDC, SCD, fact table updates

|

Verify that all changed data (CDC) is captured from sources and applied according to changing dimensions (SCD) and fact table specifications in requirements

|

|

Compare staging and DW tables

|

Verify that target DW tables are precisely the same as staging where specified and DW tables are correctly loaded where differences are specified between them

|

|

Dropped records

|

Validate that no records are dropped where they should not be between all sources and targets; verify that records with error_status = “E” are dropped and that any records in the same or related tables that with foreign keys to these dropped records are processed according to specifications

|

|

Extra records, additional columns in target

|

Verify that extraneous data not meant to be loaded was not actually loaded

|

|

Unique key exclusivity

|

Verify that all columns specified as unique key are unique among all records

|

|

Record counts

|

Verify record counts as correct when compared with source records and when compared from one database load to another

|

|

Exploratory testing

|

Providing distinct values from all columns to support input exploratory testing.

|

|

Maintain source table IDs through to DW

|

Providing functions that allow tracking/verification of surrogate. native, or ID keys from source to final target

|

|

ETL Lookup processing

|

Verify that ETL “lookups” were processed correctly

|

|

Aggregated values

|

Verify aggregation of values from sources to targets

|

Table 1: Test scenarios and test cases frequently considered for automated testing.

Summary

Many projects' DW/BI teams have found that it's possible to succeed with automated testing. Automated coverage will generally not replace all manual unit, component, or end-to-end testing. However, it will assure that the more costly manual work is focused on high-risk, high-value activities and, in the process, complement the QA process.

Creating automated DW/BI tests is well worth the up-front effort, especially in the data warehouse testing phases. Automated tests can be run hundreds of times at modest cost with almost no physical time constraints.

We know that testing takes time. We know that testing costs money. If planning and other up-front automation efforts reduce time and costs, that has to benefit your organization's bottom line.

About the Author

Wayne Yaddow has over 20 years of experience leading data migration/integration/ETL testing projects at organizations including J.P. Morgan Chase, Credit Suisse, Standard and Poor’s, AIG, Oppenheimer Funds, IBM, and Achieve3000. Additionally, Wayne has taught International Institute of Software Testing (IIST) courses on data warehouse, ETL, and data integration testing. He continues to lead numerous ETL testing and coaching projects on a consulting basis. You can contact Wayne via email.