Data Warehousing: Thirty Years Old and Still Delivering Value

In an industry where technology is changing so rapidly, such longevity is rare and must point to some fundamental and unchanging truths underlying the design. What fundamental and unchanging truths does such longevity reveal?

- By Barry Devlin

- February 1, 2016

This year marks the 30th birthday of the data warehouse. It was way back in 1986 when I and colleagues in IBM Europe defined the first architecture for internal use in managing sales and delivery of such exotic machines as System/370 mainframes and System/38 minicomputers. The architecture was subsequently described in the IBM Systems Journal in 1988.

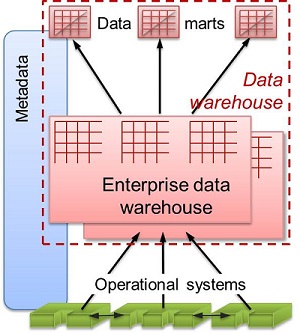

This technology, as well as state-of-the-art PCs running DOS 3.3 on Intel 80286-based machines with disks as large as 20 MB, shows just how much the world has changed in the intervening period. Today’s smartphones are more powerful than the mainframes of that era, yet the data warehouse architecture changed very little. The architecture shown in the figure below, dating from the mid-1990s, is functionally equivalent to that defined 10 years earlier and remains the basis for many data warehouse designs today.

Figure 1: Typical data warehouse architecture.

In an industry where technology is changing so rapidly, such longevity is rare and must point to some fundamental and unchanging truths underlying the design. These are the principles of data management. They remain valid irrespective of changes in underlying technologies. Current implementations -- whether data warehouses or data lakes -- must honor these principles and embed them in their designs. On the other hand, recent developments in the areas of big data and the Internet of Things (IoT) clearly reset the priorities for different aspects of these selfsame data management principles.

The Timeliness/Consistency Trade-off

One of the most important principles that emerged in data warehousing was the timeliness/consistency trade-off. The lessons learned here through 30 years of data warehousing are as relevant today as they ever were and must also be applied to current developments.

The timeliness/consistency trade-off is this: When interrelated data is created or managed in more than one place (application or geographical location), the extent of consistency you can achieve is inversely related to the level of timeliness required. Despite marketing claims to the contrary, this is a reality dictated by the known laws of physics! When data about one object exists in two or more places, the systems managing it must communicate with one another and exchange data to ensure consistency. The speed of such communication is limited by the speed of light. Furthermore, the closer to the speed of light you want to get, the more it will cost.

The original data warehouse architecture emphasized consistency simply because that was management decision makers’ primary business concern at the time. This consistency was achieved by reconciling data in the enterprise data warehouse and, as a consequence, delaying its availability to users. If you wanted timeliness, you had to go directly to the operational systems or build an “independent” data mart fed directly from a limited set of sources. This trade-off was well understood and widely accepted.

With today’s culture of instant gratification, supported and driven by big data and IoT technologies, the emphasis has shifted to timeliness. Data lake “architectures” (many are architectures only in name) are designed with high-speed data availability as the key driver. However, in many cases, little or no attention is paid to the need for consistency. This requirement is real, however, and finally lands on the desks of the data scientists and decision makers using the systems.

The 30-year-old data warehouse architecture still has much of value to teach us. Balancing the business needs for consistency and timeliness remains essential even though the relative priorities change. The role of the enterprise data warehouse in driving consistency remains vital in every enterprise. It needs to be supplemented, of course, by other techniques offered by newer technologies, both to support consistency and to enable higher levels of timeliness. Even after 30 years on the go, the data warehouse architecture continues to have legs.

About the Author

Dr. Barry Devlin is among the foremost authorities on business insight and one of the founders of data warehousing in 1988. With over 40 years of IT experience, including 20 years with IBM as a Distinguished Engineer, he is a widely respected analyst, consultant, lecturer, and author of “Data Warehouse -- from Architecture to Implementation" and "Business unIntelligence--Insight and Innovation beyond Analytics and Big Data" as well as numerous white papers. As founder and principal of 9sight Consulting, Devlin develops new architectural models and provides international, strategic thought leadership from Cornwall. His latest book, "Cloud Data Warehousing, Volume I: Architecting Data Warehouse, Lakehouse, Mesh, and Fabric," is now available.