How Big Data Accelerators Enable Faster, Cost-Effective Analytics

CPU speed is no longer growing fast enough to keep up with demanding workloads, highly complex computing, and astronomical data growth, but specialized processors can fill the gap.

- By Steve Tuohy

- May 14, 2021

The Need for Speed

The world moves fast. Today's leading companies need to be data-driven and, more important, able to act on data insights with speed. This takes many forms, from personalizing customer experiences at the point of interaction to processing billions of data points to detect fraudulent activity to pivoting strategies as new data indicates changing environments.

There's been no shortage of innovation among data applications over the past decade, helping organizations yield value from growing data volumes. Modern data platforms -- data lakes, data warehouses, data lake houses -- have largely risen to the challenge.

This is all intertwined with the exponential improvement in hardware, computing, storage, and memory. In this article, we highlight how the innovation in data platforms and the separate innovation in computing has opened up the need for data accelerators for businesses and the world at large to meet continued demand for speed and efficiency.

Moore's Law (think: smaller, cheaper microchips every couple of years) has been essential to that data and analytics innovation for decades, pairing faster central processing units (CPUs) with the growing volumes of data and use-case demands. The reliable growth in processor speed is slowing, and business leaders and data teams should take notice. Although these teams rarely interact with the underlying servers, there's a quiet revolution happening that impacts the business and its expectations of speed.

The Solution: Accelerators

To understand this revolution, it is worth taking a quick look at the history of the CPU. Whether for your personal computer, a small server, or a large data center, one of the primary strengths of the CPU is that it is general purpose and can handle almost all processing. Whether you are writing an email on your computer, serving websites, or performing complex artificial intelligence (AI) algorithms, the CPU can handle the job. The challenge is that CPU speed is no longer growing at a pace to match the demands across a variety of workloads, highly complex computing, and astronomical data growth.

In a way, much of the big data innovation of the past decade also reflects the limitations of CPUs. Most modern big data platforms add performance by distributing computing across many CPU nodes, or computers, in the network. They offer the promise that as your needs grow, you can scale out or scale up with more nodes or faster processors. At best, this is an expensive proposition, but more realistically performance falls off because the communication overhead between nodes leads to diminishing returns.

CPU growth is slowing, but other types of specialized processors can fill the gap. It turns out that while a general-purpose processor can handle most computation tasks adequately, matching workloads with an optimal computing framework can significantly increase performance. Translation: the processor features needed for typing an email are different from those required to render a website with advanced graphics.

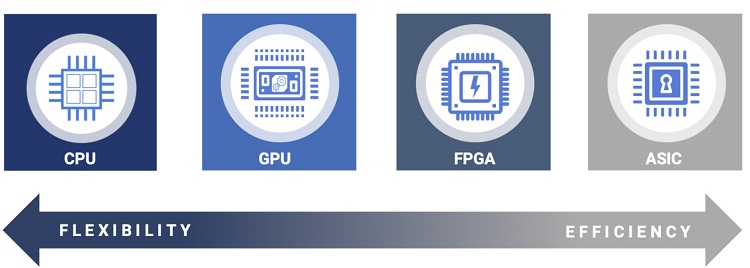

The general-purpose CPU is not going away. The future is not swinging the pendulum to where there are hundreds of different processors for different tasks. There are large classes of alternatives, each optimized for different classes of use cases, including graphical processing units (GPUs), field programmable gate arrays (FPGAs), and application-specific integrated circuits (ASICs) in high-value use cases.

How Data Acceleration Happens in the Real World

Here are a few examples of how these special processors improve performance and enable data acceleration.

Google TPUs, ML, and ASICs

Machine learning (ML) is a good use case to start with to illustrate the benefits of acceleration. The rapid advances in ML have depended on modern data platforms and the increasing ability to manage enormous data sets. Processing this data alone has vast computing requirements. Additionally, ML model training and inference are highly parallel workloads, making them good candidates for alternative processors.

In 2018, Google introduced tensor processing units (TPUs) which are ASICs narrowly designed for neural networks, specifically Google TensorFlow. Developing ASICs requires significant time and money. Google uses these TPUs internally for tools such as Photos and Maps, and they provide a robust ML solution for customers, who do not have to manage the hardware programming or integration.

Facebook has also developed ASIC accelerators for internal use such as user prediction; Amazon has done similar work with Alexa.

Amazon AQUA: The Data Warehouse and FPGAs

Where the CPU is general-purpose, the ASIC is the other end of the spectrum -- a chip designed and built for a specific, typically narrow, usage. Between those ends of the spectrum sits the field programmable gate array (FPGA), another acceleration option. FPGAs offer many of the performance advantages of an ASIC, but true to the "P" in the acronym, can be programmed and reprogrammed for distinct applications.

Amazon recently announced a preview of its Advanced Query Accelerator (AQUA), which uses FPGAs to accelerate its Redshift data warehouse. The data warehouse market is fiercely competitive and evolving fast, with legacy providers trying to keep up with the rapid cloud adoption led by Redshift, Snowflake, and others. Data warehouse providers largely innovate at the software level, yet AWS has identified a path to acceleration beyond the application itself, moving beyond the traditional CPU that underlies the data warehouse.

By building acceleration directly into their application, Amazon will presumably offer a seamless experience to the user, providing a competitive advantage similar to what TPUs give Google.

Nvidia RAPIDS: GPUs, Machine Learning, and More

Graphics processing units (GPUs) are another accelerator. Like FPGAs, they offer more flexibility than an ASIC, and they are relatively ubiquitous with the popularity of gaming, video processing, and even cryptocurrency mining. The GPU was created (and named) for its specialized ability to process graphics better than a CPU; the "general purpose" GPU (GPGPU) is well-suited for highly parallelized workloads and has aided deep learning's rapid adoption. NVIDIA, the leading GPU provider, has developed RAPIDS software libraries to connect data science applications with GPUs.

RAPIDS represents an interesting contrast to TPUs and Aqua Redshift because it is not specifically designed for a single application. BlazingSQL, Plotly, Databricks, and other vendors have announced RAPIDS support, giving users access to GPU acceleration for SQL, gradient-boosted decision trees (GBDT), and Delta Lake. These vendors offer an integrated solution with their applications. Organizations can also utilize GPU acceleration by programming RAPIDS for open source data tools and other applications.

Bigstream and Apache Spark

The data acceleration market is largely filling a gap to bridge data applications and the specialized processors that can potentially improve performance. The economics of ASICs -- high investment for a narrow but valuable operation -- will only make sense for a few applications like TPUs. Although some will integrate accelerators into their own applications such as Aqua, most application providers won't venture into complex programming. An increasing number of independent software vendors (ISVs) are endeavoring on these programming paths to provide seamless acceleration for users.

Bigstream, for instance, accelerates Apache Spark with a software framework that takes advantage of FPGAs and other available accelerators. Spark users seamlessly add Bigstream software, which evaluates each task and determines the optimal processing engine -- FPGA or CPU. Such acceleration software ensures that Spark users do not need to modify their code or understand FPGA programming to gain the benefits of acceleration.

Data Acceleration in the Cloud

The growing share of big data workloads on cloud platforms is driving the adoption of acceleration technology. On a public cloud such as AWS or Azure, users increasingly can add GPU or FPGA instances with a few clicks and include them as much or as little as needed. The ROI math obstacle of "how much utilization is needed to justify the proof of concept (POC) and implementation" basically disappears with pay-as-you-go pricing. Heavy data users now have the opportunity to match data workloads with the optimal infrastructure.

That's not to say acceleration is limited to cloud deployments. Because ISVs are addressing the programming challenges, it is far easier to justify and implement specialized processors on-premises. Cloud availability also can help on-premises customers run cloud proofs of concept to gain more confidence before making the hardware investments and accepting IT time requirements to add acceleration to their data centers.

The Acceleration Market

It's easy to think of this shift as just a hardware evolution: specialized computing filling a gap of diminishing CPU performance gains. ARK, an investment firm, forecasts that "accelerators, such as GPUs, TPUs, and FPGAs" will become a $41 billion industry in the next ten years, even surpassing CPUs." This is heavily driven by big data analytics and AI and is one reason AMD announced it will spend $35B to acquire leading FPGA provider Xilinx.

This market and the value for customers is equally dependent on the software needed to connect the applications with the hardware. Some have tinkered with accelerators in the past, but widespread adoption depends on turnkey solutions that don't require developers to integrate hardware or change existing application code. Fully integrated products such as Aqua Redshift and software such as Bigstream and RAPIDS are different, compelling approaches.

Although we have focused on hardware-based acceleration, many of the same software approaches also deliver acceleration with existing CPUs. By evaluating applications such as Spark for FPGA or GPU acceleration, users can also accelerate specific operations with native C++ code.

Looking Forward: Acceleration's Impact

It may be true that we can't depend on Moore's Law anymore, but as is often the case, when one door closes, another opens. Innovations are providing more power and efficiency, but it only matters if these innovative solutions are seamless to the business user and benefit their organization.

Highlighting a big data acceleration market inherently focuses on the speed side of performance gains. Broadening beyond the general-purpose CPU means also optimizing such things as energy efficiency and cost. Most organizations have learned that if you're not paying attention, the shift to the cloud can get very expensive. The meter runs while the general-purpose CPU slowly tackles every workload. Acceleration gets you to the finish line faster and helps reduce cloud spending.

About the Author

Steve Tuohy is the head of marketing at Bigstream. Steve has spent his career at the intersection of data and markets, from data science-driven economic analysis to marketing operations to telling the stories of big data innovators. Prior to Bigstream, he led marketing teams at Alation, Cloudera, and Cisco. You can reach the author via email, Twitter, or LinkedIn.