A New Approach to Streaming Analytics That Can Track a Million Data Sources

Combining streaming analytics and in-memory computing can provide a potent tool for tackling new problems.

- By William L. Bain

- July 13, 2020

Almost all devices from thermometers to drones have become increasingly intelligent. They ceaselessly emit telemetry for collection and analysis, but the software platforms needed for streaming analytics have struggled to keep up. Their goal is to make sense of all this data and respond in a timely manner. The potential benefits are huge: lives can be saved, operational costs can be lowered, and opportunities can be extracted.

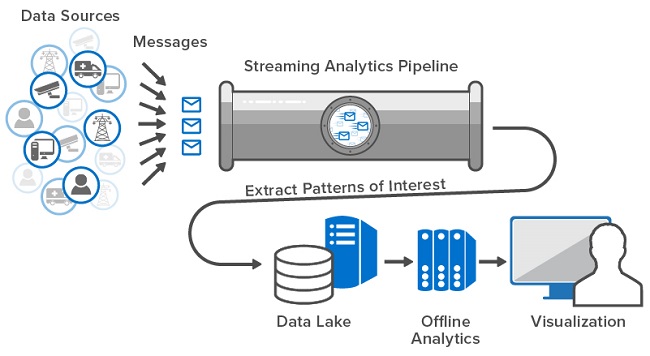

The trouble is that it is difficult to get the “big picture” in real time. There is just too much data, and it’s flowing in too rapidly. To sidestep this challenge, most streaming analytics software is organized as a pipeline that ingests messages and examines them as they flow by to extract patterns of interest or issues that require action. This is especially true when there are thousands or even millions of data sources creating streams of messages. Only a limited amount of inspection can be accomplished and reacted to in real time. For the most part, messages are passed along to data lakes for offline analysis and visualization, using popular big data tools such as Spark.

For example, when you check out at a supermarket, the coupons you receive have been selected using big data tools that ran the previous night (or earlier). The system does not attempt to examine your shopping cart, match it with your shopping history, and make thoughtful suggestions on the spot. It’s just not feasible with today’s techniques for streaming analytics.

Let’s look at a streaming analytics approach that might make it possible.

Reimagining the Possibilities with Real-Time Digital Twins

Here’s another example. Suppose you are tracking one million smart watches delivering medical telemetry such as heart rate, blood pressure, and oxygen saturation -- with a streaming analytics platform. To be useful, this type of streaming analytics can’t wait for offline processing. If a medical issue is spotted, action needs to be taken immediately. Knowledge of each person’s age, medical history, medications, and current activity is also crucial to evaluate this telemetry meaningfully, but where would the data be kept?

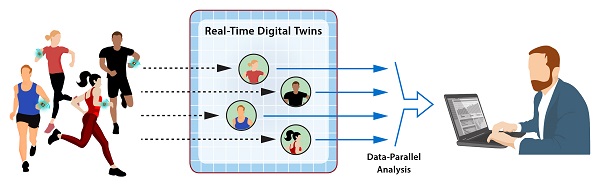

Instead of watching a pipeline full of messages flow by, what if we could direct all the messages from each data source (here, a smart watch) to a separate snippet of analytics code? This code would have immediate access to contextual information being tracked and be updated for that specific data source as well as the latest incoming messages. It could focus on just that one data source instead of trying to extract patterns from an aggregated data stream, and it could complete its analysis in milliseconds.

That’s what we call a “real-time digital twin.” This novel approach to streaming analytics refactors the problem and dramatically simplifies the developer’s task. It also empowers the analytics platform to inspect and respond individually for each data source.

Real-time digital twins also surface key contextual data that can be analyzed in aggregate. This would otherwise have to be done offline with traditional approaches. For example, they could track which watch wearers are potentially in distress, and this information could be aggregated for all users by activity and age to quickly spot emerging issues that need attention (such as overworked participants in an exercise class).

The Technology That Makes It Possible

What makes this breakthrough approach to streaming analytics possible is a technology called in-memory computing (IMC). It can store huge volumes of data objects in memory that is distributed across a cluster of servers (instead of in a much slower database) and can efficiently run application code on those servers. Because IMC scales on demand, it can host millions of real-time digital twins and perform streaming analytics for these twins and data aggregation extremely fast.

The combination of this innovative streaming analytics approach and IMC technology enables developers to tackle new problems, and it gives live systems an unprecedented level of situational awareness. That may be just what we need to keep an eye on all those intelligent devices that surround us.

About the Author

Dr. William L. Bain is the founder and CEO of ScaleOut Software. In his 40-year career, Bain has focused on parallel computing and has contributed to advancements at Bell Labs Research, Intel, and Microsoft. He has founded four software companies and holds several patents in computer architecture and distributed computing. You can reach the author via email, Twitter, or LinkedIn.