5 Reasons Why the Presidential Election Defies Social Media Analysis

As the U.S. Presidential campaign heats up, examining Twitter posts shows the limitations of sentiment analysis.

- By Fern Halper

- September 7, 2016

If you’re like me, you’ve been following the 2016 presidential election from debates to convention to nomination to full-bore campaigning. Trump has been garnering attention for his statements on social media. He’s built a huge Twitter following as a reality TV host. Many believe he is using his social media know-how to help him in the election. Clinton also has a huge Twitter following, although she doesn’t necessarily do her own tweeting. Her campaign states that “Tweets from Hillary signed –H.”

Over the last month, Trump has received negative press about his trip to Mexico and his wall, his flip-flopping on immigration reform, his “what have you got to lose” speech to African-American voters, and possibly hiring Roger Ailes as an advisor. Clinton, on the other hand, is still getting push-back on her email-server issues and improprieties regarding the Clinton Foundation. Trump has been insinuating that Clinton is somehow ill and is not physically fit for the Presidency. Clinton continues to question Trump’s judgement and mental fitness for the office.

How is this playing out in social media? In particular, which candidate is garnering more negative sentiment than the other from the public? What are these citizens concerned about?

To find out more, I downloaded a weeks’ worth of tweets about Trump and Clinton using twdocs in order to perform sentiment analysis. My hypothesis was that the tweets would be more negative about Clinton because Trump has basically given others the license to say whatever they please, just as he does. My plan was to look at sentiment between now and the election to track how people were reacting to the two campaigns and to see how that played out in the general election.

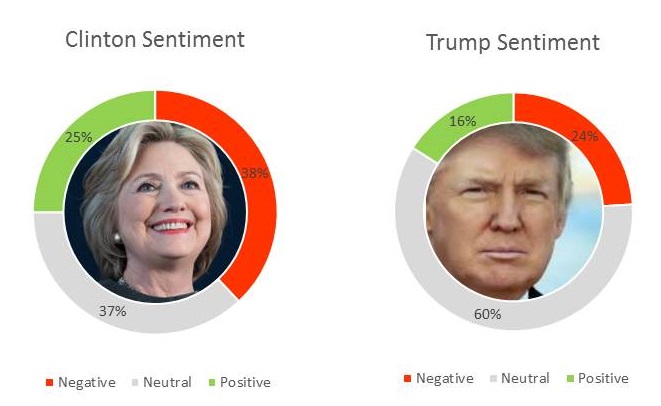

After downloading the tweets and completing the analysis with a few sentiment engines, I uncovered the following results:

Week ending 9/6/16

On the surface, it seemed that my hypothesis was partly correct. The negative tweets about Clinton (38 percent) are substantially higher than those about Trump (24 percent). However, the positive tweets about Clinton (25 percent) are higher than the positive tweets about Trump (16 percent).

Not one to take any analysis at face value, I started to look at the tweets and how they had been classified. Sentiment analysis engines are only directionally correct at best. In other words, the best you can hope for is to classify text properly in about 70 percent of the cases. As I looked through these tweets, however, it was obvious that much more than sentiment analysis would be needed in this election to understand the voters. Here are five reasons why.

1. It is hard to analyze lies. We know that social media spreads lies. It is easy to write a lie, and lies repeated somehow take on the patina of truth. Yet, a sentiment analysis tool is not designed to pick up on lies. It looks for positive, negative, and neutral sentiment.

For example, although the hooha around Clinton’s health has not been substantiated, that doesn’t stop people from tweeting about it. For instance: “#HillaryClinton supporters your candidate just admitted she has memory loss due to brain damage #CNNSOTU” This tweet was classified as neutral.

What do you do with these statements? How do you classify, “It’s a lie!” That is neither positive nor negative.

2. It is hard to analyze sarcasm. A known issue with sentiment analysis is that it doesn’t do well with sarcasm or irony. For example, the tweet “Giving #DonaldTrump access to classified intelligence is like giving an arsonist a case of lighters...Putin will be clued in by tonight!” is given a neutral sentiment rating by a sentiment analysis engine I consider did better than most at categorizing statements.

Part of this boils down to the fact that it is hard to analyze hatred. Reading through these tweets, I was struck by the raw hatred in many of them. Hashtags such as #FinalSolution, #BlackProblem, #ClintonCrimeFamily, #BuildtheWall, and #CrookedHillary don’t help.

3. It is hard to analyze sexism. Although the media rarely talks openly about it, there is an undercurrent of sexism running through this election (although there was the discussion of Trump calling Rosie O’Donnell a pig, his comments to Megan Kelly, and his association with Ailes). For instance, “Hillary Clinton is untouchable she can do anything she wants the police wouldn't dare throw that bitch in jail” or “ What do you call a woman who accepts gifts of large sums of money in order to ‘spend time’ with her? Madam Secretary! #HillaryClinton”.

It would be helpful if the analysis classified this tweet as “sexist,” but it doesn’t.

4. It is hard to analyze racism/facism. Although many Trump supporters want Muslims out of the United States and want to build a wall to keep non-whites out of the country, it now seems okay to tweet about it -- and more. For instance, one tweet read, “RT Are you hopeful that #DonaldTrump will provide a #FinalSolution? You're allowed to speak honestly and openly.”

It would be helpful if the analysis classified this tweet as “racist,” but it doesn’t.

5. It is hard to analyze homophobia. Along with the sexism and racism comes homophobia. When is it okay to tweet: “@HillaryClinton @realDonaldTrump And Hillary calls women liars. Hillary pays her female employees less than males. Hillary is a dyke. #FACTS?” or even “Donald Trump is the reason ‘the gays’ can finally get married.”

It would be helpful if the analysis classified such tweets as “homophobic,” but -- you guessed it -- it didn’t.

Defying Simple Categorization

Even if the analysis couldn’t identify sexism, racism, facism, or homophobia, it would help to simply classify all of the tweets as “intolerance.”

The reality is that what many people are tweeting about in this election perhaps defies simple positive, negative, and neutral sentiment. People are often not tweeting about the issues; rather, a good part of the “debate” on social media is about long-held beliefs and opinions which this election is helping to ignite publicly. Given that studies have found that positive sentiments are more contagious than negative sentiments and emotions on Twitter, this is even more concerning.

What this election does need is more talk about the issues and less mudslinging. What this analysis needs is classification by themes and concepts that denote not only the issues but emotion, long-held beliefs, and opinions. It would be interesting to conduct a deeper analysis (not just a weeks’ worth) about what the Twitter universe says about us as people and voters.

Some text analytics vendors have the capability to get at this level of analysis and some are trying to move in this direction. My offer to these analytics vendors; If you’re willing to accept my challenge, loan me your software (or we can work together) and I’ll perform the weekly analysis! We’ll publish the results right here on Upside.

About the Author

Fern Halper, Ph.D., is well known in the analytics community, having published hundreds of articles, research reports, speeches, webinars, and more on data mining and information technology over the past 20 years. Halper is also co-author of several “Dummies” books on cloud computing, hybrid cloud, and big data. She is VP and senior research director, advanced analytics at TDWI Research, focusing on predictive analytics, social media analysis, text analytics, cloud computing, and “big data” analytics approaches. She has been a partner at industry analyst firm Hurwitz & Associates and a lead analyst for Bell Labs. Her Ph.D. is from Texas A&M University. You can reach her at [email protected] or LinkedIn at linkedin.com/in/fbhalper.