Q&A: Big Data Calls for New Architecture, Approaches

Depending on its size, scale, velocity, and complexity, big data calls for new approaches, including rethinking standard architecture frameworks.

- By Linda L. Briggs

- October 24, 2012

Earlier technologies made it cost-prohibitive to store data at its most granular level, explains HP's Lars Wriedt. In the era of big data, modern technology is changing that. However, as he discusses in this interview, big data brings challenges that call for fresh approaches, including new frameworks with cost-effective scaling capacity for increased data volumes.

Wriedt, who focuses on big data as part of HP's information management and analytics practice in Europe, has 17 years of experience delivering business intelligence solutions to large enterprises in the finance, retail, and media industries. His expertise ranges from established to emerging business intelligence technologies, including scale-out massive parallel processing, and in-memory and columnar databases.

BI This Week: At a recent TDWI Webinar with Colin White, you spoke on the topic of emerging architectures for big data analytics. First, how does HP define big data?

Lars Wriedt: "Big data" is data that cannot be managed in a practical way by existing business intelligence (BI) infrastructure. The challenge of big data comes from what technology is already in use, what the new big data sources are, how it is supposed to be used and integrated, and what the use case is.

Based on the use case and the big data sources in question, the challenges can be described as falling within four dimensions: volume, velocity, variety, and complexity. Depending on the dimension or dimensions of the challenge, new technology could be needed for various areas, such as data acquisition, analysis, or reporting. The situation can require one or a combination of solutions that lead to upgrades, re-design, or integration of new tools or existing infrastructure and processes.

How does big data change the standard architecture framework?

Most often, big data is not nicely based on rows and columns, like traditional data. Big data is often in the form of human language, rich media machine logs, or events. The existing database systems must be extended using infrastructure and new tools that can support these types of data.

With previous technologies, it was cost-prohibitive to store data in its most detailed, granular, and complete fashion. Modern technology makes it feasible to extend the current infrastructure to manage more granular, detailed data and provide more accurate analysis or reporting. New frameworks must include cost-effective scaling capacity for increased data volumes.

Some use cases involve analyzing machine-generated events in real-time. Moving from a traditional batch-oriented model to real-time data streams requires new technology and possibly a redesign of different parts of the infrastructure.

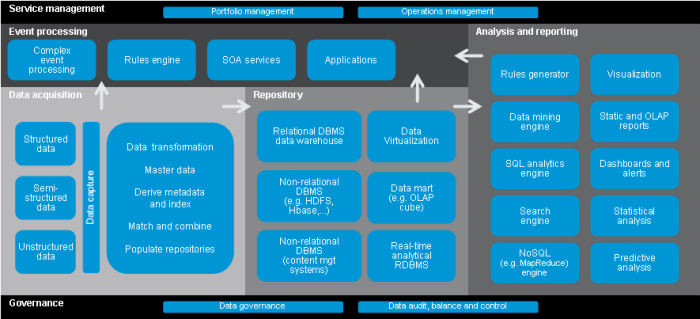

[Editor's note: The figure below shows an architecture framework, positioning the components that need to be considered for big data infrastructure architecture.]

Figure 1: Architecture framework

What new technologies are emerging around big data, and which do you see as particularly important?

For now, we see emerging technologies working with (rather than replacing) traditional database systems in data warehouses.

Today, Hadoop technology is used primarily as a repository for storing un-aggregated data with long historic spans. This repository is used to hold original content for reference, for ETL processing, and to profile and explore new data sets. It allows teams to experiment with how to create value before deploying into production.

Newer Web-based companies such as search and gaming are using Hadoop technology for analysis and reporting in production. However, the momentum behind developing features and commercial models around Hadoop will lead to a wider adaption, such as using Hadoop to report on weblogs or social media data.

Meaning-based computing systems -- which can interpret all sources of human language and rich media -- are used for enterprise store, search, and analysis across all data types such as e-mail, SharePoint, document repositories, audio or video, and so forth. It can also be used for social media analysis such as sentiment ratings for a given concept or clustering information based on conceptual relevance.

Specialized real-time analytical relational databases are used to process big data in real time using SQL language enhanced with analytical functions. Systems like these are often used to offload more traditional data warehouse systems in a more cost-efficient way.

To be able to scale to big data volumes, most emerging technologies are designed for a massive parallel processing (MPP) model using cost-effective industry standard servers, network, and storage. For availability, they store data duplicated across the MPP clusters of servers.

Existing products are being retrofitted with functions for supporting big data projects. For example, some ETL and RDBMS vendors can integrate with Hadoop and complex event processing engines to deal with real-time, machine-generated event data.

Cloud services are becoming available as a quick and convenient way to experiment with technology, develop applications, or explore and profile datasets for potential value.

Tools for easier building of applications for statistical analysis (for example, with the open- source programming language R and new visualization methods) are completing the end-to-end architecture framework.

Can you give a use case that illustrates how a company is using analytics successfully on big data, and what they had to go through to get there?

Most use cases seem to fall into two categories:

- The analysis of a combination of unstructured human language data with structured row and column data already in data warehouse systems to improve customer interaction

- Real-time processing of machine-generated data in terms of sensor reading, logs or events to optimize processes

Many companies are trying to understand how their brands are perceived in the marketplace using human language information from social media. This can help protect brand value by early capture of issues or negative discussions, as well as provide an understanding of a developing problem. This helps mitigation by shaping early responses and monitoring the effect as it develops. Brand measurements can also show historical trends and competitive position.

For example, a large online game provider's business model is to sell virtual artifacts to gamers. The challenge is to optimize top-line revenue. One of the techniques used is to capture game events in real time. By performing graph analysis to identify leaders, followers, and game use patterns, the company can develop targeted campaigns that can be delivered at the right time during game play. The staggering amounts of detailed machine-generated game data need to be captured and stored to support graph analysis and other advanced statistical reports.

What kinds of skill sets are generally needed within a company to tackle a big data project? Does the expertise tend to come from the business side of the enterprise, or from the IT side, or both?

The most important skill is knowledge of the business model and processes of the domain in question combined with an understanding of the available data and how to analyze it.

You need people with a business background combined with statistical mathematicians. You may also need people with experience in data governance and data modeling. If you are implementing new technologies or open source systems, you will need people with skills to evaluate the technology, as well as install, configure, operate, and run these systems, as well as to write applications for them. In particularly short supply are people skilled with Hadoop.

New, upcoming analytical relational database systems pose less of a challenge. They are operated by SQL with some analytical extensions and you access them by standard tools, so they should integrate well into what you already do.

In terms of strategic planning to accommodate big data, what recommendations can you share?

Two short key points. First, know your business case and secure sponsorship from the business unit where the processes change and know who is responsible for realizing the actual business results. Second, understand technology maturity -- at a minimum what will be expected in terms of skills and cost to deliver the road map to the end scenario -- so you can avoid negative surprises.

When you've done your business value assessment and selected some use cases, then review your existing BI solution and add the relevant emerging technology. It can be tempting to manage new unstructured data sources separately from the rest. There are differences in how you model, ensure quality, and analyze and manage this data, but deploying without any governance will lead to issues later. Reuse existing data such as master data or fact data where it fits the use case, which means you need the right way of integrating your new capabilities with what is already in place.