Using Apache Hadoop for Operational Analytics

Hadoop has become the preferred choice for big data analytics; it can handle both structured and unstructured data at very high volumes with an unprecedented price/performance ratio. Hadoop's ecosystem advances now also make it suitable for real-time analytics. We explore how organizations can put Hadoop to work to improve operations and gain a competitive edge.

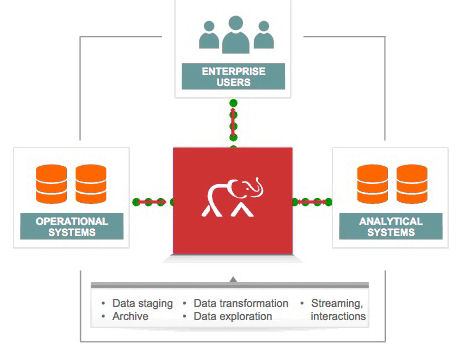

Most people associate Hadoop with batch-oriented analytical systems, but a growing number of organizations are now using Hadoop for operational analytics. As shown in Figure 1, this enables Hadoop to become a strategic platform in any organization's data architecture.

Figure 1. Advances in the Hadoop ecosystem now make it possible for this popular and traditionally batch-oriented data analytics platform to be used in real-time operational systems.

This expanded role for Hadoop has been enabled by enhancements specifically designed to accommodate the operational system requirements for high availability and high transactional performance, as well as for solid security and mission-critical data protection.

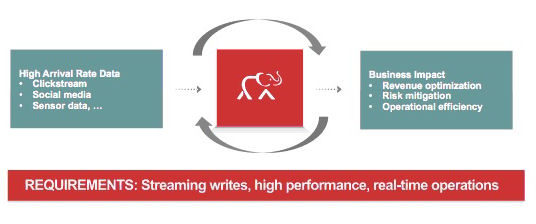

In contrast to Hadoop's role in big data analytics, operational analytics requires making many relatively small decisions in real time. The operational data often also arrives in continuous streams in real time, and the analytics often operate as closed-loop processes (with or without intervention) to continuously improve results, as shown in Figure 2.

Figure 2. Hadoop can be extended to address operational analytics and impact business as it happens.

Hadoop at Work in Operational Systems

Highlighted here are a few operational applications that can benefit from Hadoop's analytical prowess. The "horizontal" applications can be found in virtually any organization; the "vertical" applications are normally found only in the specific industries identified. Neither list is meant to be exhaustive. Readers are encouraged to imagine other ways real-time analytics might improve operations in their own organization.

Horizontal Applications

Clickstream analysis: This operational application identifies and responds to customer preferences and behaviors, achieves a better understanding of how customers analyze information and make decisions, and optimizes websites and Web pages to personalize the user experience and improve customer conversion rates. By using Hadoop to ingest and analyze clickstream data in real time, one MapR user was able to increase its online customer conversion rate by 10 percent, resulting in millions of dollars in additional revenue.

Social media analysis: When a hot topic that could impact an organization's bottom line goes viral, time is of the essence. This application affords greater insight into customer behavior in social media, thereby providing the means to better influence sentiment with timely and targeted messages and offers.

Preventive maintenance: Enterprises can proactively maintain equipment and replace old parts before a costly failure occurs by continuously identifying and assessing all factors that contribute to equipment failure, including the mean time between failure (MTBF) for all component parts. Such applications can also be used to alert customers of the need for preventive maintenance in the products they own. In addition, monitoring equipment or product usage logs can help identify usage patterns or other factors that contribute to premature failures.

Enhanced network security: Advanced persistent threat (APT) protection involves real-time analytics of traffic, particularly for massive volumes of event and user-activity data. Hadoop significantly increases the amount of contextual data that can be analyzed in real time, affording a more complete view of anomalous activities and other attack indicators and providing a more thorough and timely correlation to better detect intrusions as they are occur.

Vertical Applications

Advertising: Enterprises can optimize advertising and promotions by measuring effectiveness and adjusting campaign tactics in real time while enabling better ad targeting, personalization, and placement. This application can also be used on the back end to get better results in ad auctions with better tailoring and targeting to the advertisers. With its Hadoop cluster, the Rubicon Project has been able to handle 100 billion ad auctions per day, three times more trades than NASDAQ and 15 times more transactions than Visa handles daily.

Retail: According to a study by McKinsey in 2012, by making good suggestions of potentially desirable products in real time, Amazon's sophisticated recommendation engines deliver over 35 percent of the company's online sales. This powerful operational application is often used to improve the online shopping experience and increase customer loyalty. One large retail chain was able to generate a 1,000x return on their Hadoop investment by retargeting consumers leaving their website or abandoning a shopping cart.

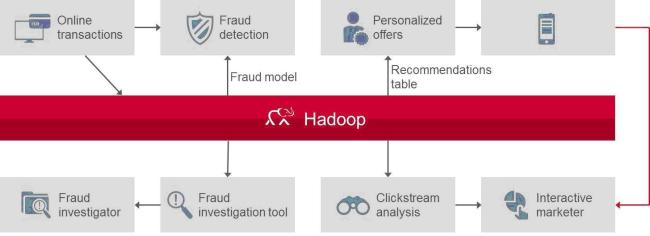

Financial services: As with network security, banks and other financial firms are constantly faced with threats of fraud. As with advanced persistent threat protection, detecting fraud requires real-time analysis of anomalous activities that deviate from known patterns for both individual customers and those based on modeling and simulations.

Manufacturing: By identifying and correcting problems as early as possible in the manufacturing process, manufacturers can increase both product yield and reliability. Such an early-warning system requires an analytics engine that can correlate continuous measurements and other disparate data in real time and apply predictive modeling techniques to correct problems, reduce rework and scrap expenses, optimize manufacturing processes, and improve asset utilization and worker productivity.

Transportation: This application calculates the optimal routes by considering all pertinent conditions (including current vehicle locations, traffic, and weather) and analyzing all available options. Optimizing routes and other aspects of logistics reduces personnel and fuel costs and vehicle wear and tear and improves customer satisfaction by assuring more on-time deliveries.

Utilities: By identifying which equipment or appliances are being used at what times, electric utilities can make recommendations to customers about how to improve efficiency and/or avoid use during periods of peak consumption (when rates are also at their peak and demand response events are activated to avoid rolling brownouts or blackouts). Understanding usage patterns at a granular level also helps utilities be smarter about how they purchase and trade electricity.

Figure 3. Multiple operational analyses can run in the same Hadoop cluster concurrently, as shown here for an online retailer.

Laying the Operational Analytics Foundation

Applications for operational analytics normally require both a real-time data stream and an existing dataset. One cost-effective way to accommodate this is to create a data hub as part of the Hadoop cluster. Existing datasets from data warehouses and other systems can be migrated or replicated to the cluster, and ETL processes can be used to integrate additional data that might be required for the particular application.

The Hadoop data hub optimizes storage and ETL processing from an existing enterprise data warehouse and can optionally unite or integrate existing data silos. Hadoop is particularly adept at performing the complex transformations that might be needed to integrate multiple and disparate datasets. Hadoop clusters also scale affordably to accelerate performance, handle growth, and/or support new applications, and can be used for exporting archives and snapshots to satisfy separate needs for data retention and offline analyses.

Creating a data hub in a small cluster is a great way to explore Hadoop's potential with one or more pilot operational applications. A variety of resources, including training and professional services, are available to help achieve better results faster.

Jack Norris, chief marketing officer at MapR Technologies, has over 20 years of enterprise software marketing experience. Jack's broad experience includes launching and establishing analytic, virtualization, and storage companies and leading marketing and business development for an early-stage cloud storage software provider. Jack has also held senior executive roles with EMC, Rainfinity, Brio Technology, SQRIBE, and Bain and Company. Jack earned an MBA from UCLA Anderson and a BA in economics with honors and distinction from Stanford University. You can contact the author at

[email protected].