How (and Why) Hadoop is Changing the Data Warehousing Paradigm

Hadoop will not replace relational databases or traditional data warehouse platforms, but its superior price/performance ratio can help organizations lower costs while maintaining their existing applications and reporting infrastructure. How should your enterprise get started?

By Jack Norris, Chief Marketing Officer, MapR Technologies

The emergence of new data sources and the need now to analyze virtually everything, including unstructured data and live event streams, has led many organizations to a startling conclusion: a single enterprise data warehousing platform can no longer handle the growing breadth and depth of analytical workloads. Being purpose-built for big data analytics, Hadoop is now becoming a strategic addition to the data warehousing environment, where it is able to fulfill several roles.

Why Hadoop (and Why Now)

Organizations across all industries are confronting the same challenge: data is arriving faster than existing data warehousing platforms are able to absorb and analyze it. The migration to online channels, for example, is driving unprecedented volumes of transaction and clickstream data, which are, in turn, driving up the cost of data warehouses, ETL processing, and analytics.

Compounding the challenge is that much of this new data is unstructured. Many businesses, for example, now want to analyze more complex high-value data types (such as clickstream and social media data, as well as un-modeled, multi-structured data) to gain new insights. The problem is, these new data types do not fit the existing massively parallel processing model that was designed for structured data in most data warehouses.

The cost to scale traditional data warehousing technologies is high and eventually becomes prohibitive. Even if the cost could be justified, the performance would be insufficient to accommodate today's growing volume, velocity, and variety of data. Something more scalable and cost-effective is needed, and Hadoop satisfies both of these needs.

Hadoop is a complete, open-source ecosystem for capturing, organizing, storing, searching, sharing, analyzing, visualizing, and otherwise processing disparate data sources (structured, semi-structured, and unstructured) in a cluster of commodity computers. This architecture gives Hadoop clusters incremental and virtually unlimited scalability -- from a few to a few thousand servers, each offering local storage and computation.

Hadoop's ability to store and analyze large data sets in parallel on a large cluster of computers yields exceptional performance, while the use of commodity hardware results in a remarkably low cost. In fact, Hadoop clusters often cost 50 to 100 times less on a per-terabyte basis than today's typical data warehouse.

With such an impressive price/performance ratio, it should come as no surprise that Hadoop is changing the data warehousing paradigm.

Hadoop's Role in the New Data Warehousing Paradigm

Hadoop's role in data warehousing is evolving rapidly. Initially, Hadoop was used as a transitory platform for extract, transform, and load (ETL) processing. In this role, Hadoop is used to offload processing and transformations performed in the data warehouse. This replaces an ELT (extract, load, and transform) process that required loading data into the data warehouse as a means to perform complex and large-scale transformations. With Hadoop, data is extracted and loaded into the Hadoop cluster where it can then be transformed, potentially in near-real time, with the results loaded into the data warehouse for further analysis.

In all fairness, ELT processes began as a way of taking advantage of the parallel query processing available in the data warehouse platform. Offloading transformation processing to Hadoop frees up considerable capacity in the data warehouse, thereby postponing or avoiding an expensive expansion or upgrade to accommodate the relentless data deluge.

Hadoop has a role to play in the "front end" of performing transformation processing as well as in the "back end" of offloading data from a data warehouse. With virtually unlimited scalability at a per-terabyte cost that is more than 50 times less than traditional data warehouses, Hadoop is quite well-suited for data archiving. Because Hadoop can perform analytics on the archived data, it is necessary to move only the specific result sets to the data warehouse (and not the full, large set of raw data) for further analysis.

Appfluent, a data usage analytics provider calls this the "Active Archive" -- an oxymoron that accurately reflects the value-added potential of using Hadoop in today's data warehousing environment. They have found that for many companies, about 85 percent of their tables go unused, and that in the active tables, up to 50 percent of the columns go unused. The combination of eliminating "dead data" at the ETL stage and relocating "dormant data" to a low-cost Hadoop Active Archive can be considerable, resulting in truly extraordinary savings.

Although able to provide superior price/performance ratio in both the front and back ends of a data warehouse, Hadoop's best role may well be as an end in and of itself. This is particularly true given how much Hadoop has evolved since its early days of batch-oriented analysis of Web content for search engines.

Consider the inclusion of HBase, for example, in the Hadoop ecosystem. HBase is a non-relational, NoSQL database that sits atop the Hadoop Distributed File System (HDFS). HBase applications have several advantages in certain distributions, including the ability to achieve high performance and consistently low latency for database operations.

Of course, Hadoop's original MapReduce framework -- purpose-built for large-scale parallel processing -- is also eminently suitable for data analytics in a data warehouse. In fact, MapReduce is fully capable of everything from complex analyses of structured data to exploratory analyses of un-modeled, multi-structured data.

An exploratory analysis, for example, could derive structure from unstructured data, enabling the data to be loaded into HBase, Hive, or the existing data warehouse for further analysis. Such "pre-processing" is so effective and cost-effective that a growing number of ETL processes are being re-written as MapReduce jobs. These efforts are often assisted by Hive's ability to convert ETL-generated SQL transformations into MapReduce jobs.

Although these MapReduce conversions work well, performance can be improved by re-writing the intermediate shuffle phase that occurs after the Map and before the Reduce functions. Optimizing the shuffle benefits the sorting, aggregation, hashing, pattern-matching and other processes that are integral to ETL/ELT.

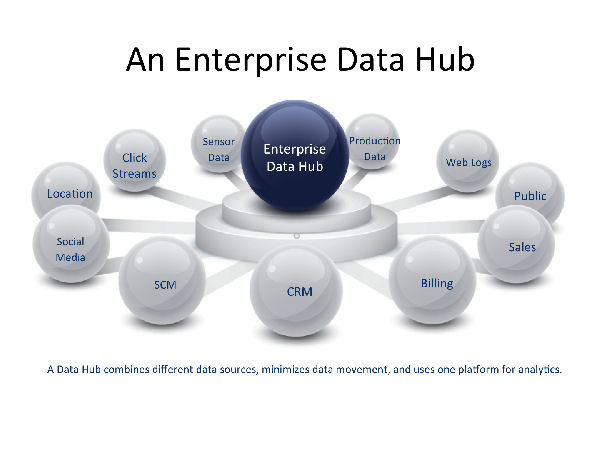

Because it is quite common with MapReduce to have the output of one job become the input for another, Hadoop effectively makes ETL integral to, and seamless with, data analytics and archival processing. It is this beginning-to-end role in data warehousing that has given impetus to what is Hadoop's ultimate role as an enterprise data management hub in a multi-platform data analytics environment. Indeed, it is almost as if Hadoop is destined to fulfill this role based on its versatility, scalability, compatibility, and affordability.

Although Hadoop appears perfectly suited for use as an enterprise data management hub, there is (as always!) a caveat: some Hadoop distributions and/or configurations lack "enterprise-class" capabilities. As a hub, the Hadoop cluster must offer mission-critical high availability and robust data protection. The former can be achieved by eliminating any single points of failure, the latter by supporting both snapshots for point-in-time data recovery and remote mirroring for disaster recovery.

Conclusion

The data deluge -- with its three equally-challenging dimensions of variety, volume, and velocity -- has made it impossible for any single platform to meet all of an organization's data warehousing needs. Hadoop will not replace relational databases or traditional data warehouse platforms, but its superior price/performance ratio will give organizations an option to lower costs while maintaining their existing applications and reporting infrastructure.

So get started with Hadoop at the front end with ETL, at the back end with an Active Archive, or get started in-between by supplementing existing technologies with Hadoop's parallel processing prowess for both structured and unstructured data -- depending on your greatest need. For those still reluctant to make the investment at this time, consider getting started in in the cloud, where Hadoop is now available as an "on-demand" service.

However your organization gets started, be prepared to become a believer in the new multi-platform data warehousing paradigm, in general, and in Hadoop as a potential and powerful enterprise data management hub.

Jack Norris is the chief marketing officer of MapR Technologies and leads the company's worldwide marketing efforts. Jack has over 20 years of enterprise software marketing and product management experience in defining and delivering analytics, storage, and information delivery products. Jack has also held senior executive roles with EMC, Rainfinity, Brio Technology, SQRIBE, and Bain and Company. Jack earned an MBA from UCLA Anderson and a BA in economics with honors and distinction from Stanford University. You can contact the author at [email protected].