View online: tdwi.org/flashpoint

View online: tdwi.org/flashpoint

November 6, 2014

ANNOUNCEMENTS

NEW 3 Interesting Results from the Big Data Maturity Assessment

CONTENTS

Your Data Analytics Project Team: 5 Perspectives You Need to Deliver Value

The Modern Data Warehouse—How Big Data Impacts Analytics Architecture

2014 TDWI BI Benchmark Report: Staffing, Development, and Usage

Mistake: Forgetting about Self Service

See what's

current in TDWI Education, Events, Webinars,

and Marketplace

Your Data Analytics Project Team: 5 Perspectives You Need to Deliver Value

Tony Rathburn

The Modeling Agency

Topics:

Program Management

As analytics becomes increasingly important to organizations, the role of the data scientist has emerged, an oversimplified job title if ever there was one. No individual possesses the complete set of skills necessary for a significant individual project, let alone the broad set of analytics opportunities within an enterprise.

Organizations that are successful in adopting analytics-based decision-making processes instead create data analytics project teams that bring together the skill sets necessary to complete projects. These teams represent five distinct perspectives on data analytics: project sponsor, domain, end user, IT, and quantitative.

The Project Sponsor Perspective

The role of project sponsor on a project team is to ensure that the project is defined and developed to deliver value to the organization by enhancing the decision-making process under analysis. In a modern organization, corporate-level strategy encompasses a complex set of often-conflicting objectives and performance metrics.

Data analytics is one of many ways to enhance business performance. An effective project sponsor focuses on identifying opportunities where data analytics has the potential to deliver both business value and a significant return on investment.

The Domain Perspective

Domain expertise is highly specialized and nuanced. The true domain experts in an organization have often immersed themselves in the specific decision process under review for many years.

The data utilized to develop our mathematical formulas provides a complex set of examples for our algorithms to learn from. It is critical that our domain experts participate in the development of our training data sets by capturing the true business nature of the relationships being evaluated.

The specification of these behaviors, with all of their complexities and nuances, is what our algorithms learn from. If they are not represented appropriately, our algorithms develop sophisticated solutions to the wrong problems and fail to deliver business value.

The End-User Perspective

The implementation environment evolves rapidly. The end-user perspective is often significantly different from that of the project sponsor and domain expert.

In modern organizations, the tactical implementation issues of a decision-making process are often as critical as the underlying logic captured within the process. This perspective is further complicated by the need for agile evolution of the implementation process to meet the demands of a rapidly changing external environment. Our analysis must actively incorporate the current implementation perspective and seamlessly integrate into day-to-day operations.

The IT Perspective

Modern IT infrastructures are hugely complex environments and are becoming more so.

The strategic and tactical issues facing IT departments are staggering. As our need to capture, organize, cleanse, transform, and deliver an exponentially growing amount of data becomes increasingly critical, the organization’s IT infrastructure becomes increasingly complex and highly specialized.

The set of skills required to develop and maintain these environments is diverse and requires the development of a team of highly qualified staff.

Our project teams must include the IT perspective both as a source of the data that feeds our analysis and as the environment that our completed projects will function within.

The Quantitative Perspective

Predictive modeling doesn’t give us the “right” answer.

Every time we run a different algorithm against a set of data, we get a different formula. This is because each algorithm optimizes performance on a specific analytic performance metric.

Until software companies (a) recognize the inconsistency of the human behaviors impacting an organization’s business performance metrics, (b) allow those performance metrics to be specified in the user interface, and (c) engineer software environments that train, test, and validate decision-making processes that capture both the formula and the strategy for its utilization, we will continue to develop increasing complex solutions that are, at best, theoretically correct but fail to deliver value to the organization.

Our project teams must include a quantitative specialist with experience in delivering business value in real-world environments.

Wrap Up

Organizations that successfully complete significant analytics projects adopt a team approach. These teams are generally temporary, assembled for the completion of specific projects, and disbanded upon project completion.

As organizations mature in their analytics capabilities, the organizational dynamics involved in the formation of these project teams, and the roles of those who actively contribute the necessary skills, becomes increasingly sophisticated.

Tony Rathburn is a senior consultant and training director at The Modeling Agency, LLC. He is a frequent presenter at the TDWI conferences and will be a speaker at TDWI Orlando, December 7–12, 2014.

The Modern Data Warehouse—How Big Data Impacts Analytics Architecture

Karen Lopez and Joseph D’Antoni

The advent of big data technologies—and associated hype—can leave data warehouse professionals and business users doubtful but hopeful about leveraging new sources and types of data. This confusion can impact a project’s ability to meet expectations. It can also polarize teams into “which one will we use” thinking.

Good architectures address the cost, benefits, and risks of every design decision. Good architectures draw upon existing skills and tools where they make sense and add new ones where needed. We architects always use the right tool for the job.

In this article, we describe the parts of the Hadoop framework that are most relevant to the data warehouse architect and developer. We sort through the reasons an organization should consider big data solutions such as Hadoop and why it’s not a battle of which (classic data warehouse or big data) is best. Both can—and should—exist together in the modern data architecture.

Learn more: Read this article by downloading the Business Intelligence Journal, Vol. 19, No. 3

Highlight of key findings from TDWI's wide variety of research

2014 TDWI BI Benchmark Report: Staffing, Development, and Usage

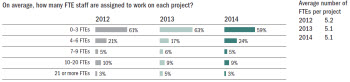

Small, nimble project teams describes the prevailing approach for a majority of organizations, according to the 2014 BI Benchmark Survey. Eighty-three percent of BI organizations have six or fewer FTE employees deployed per project, up slightly from 80 percent in 2013. Meanwhile, the average number of per-project FTEs is virtually unchanged from recent years, at 5.1. Organizations are clearly benefiting from the diversity of skills that many BI professionals possess, enabling relatively small team sizes.Just 12 percent of organizations average 10 or more FTEs on a given project.

Read the full report: Download the 2014 TDWI BI Benchmark Report: Organizational and Performance Metrics for Business Intelligence Teams

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake: Forgetting about Self Service

Mark Giesbrecht

One area that business users and project teams may neglect to discuss during the assessment phase is self service. Business users may not know to ask, and the project team is rarely looking for additional scope, but in both cases neither party is thinking of life post-project. The role of BI practitioners is to ensure this requirement is part of every new solution.

Self service usually involves the creation of a semantic layer, such as a cube, that abstracts the underlying data mart into a structure that is easily understood by business users. These “power users” are then taught to use the query-writing tool that accompanies the semantic layer to create their own reports. Ideally, the self-service application is deployed at an enterprise level so the learning curve for new BI solutions is on the data, not the tool.

Fully realized, a self-service capability has the significant advantage of reducing IT’s post-project role to infrequent data modeling and transformation rule changes, leaving the more frequent query and report changes to the power users, many of whom service a second tier of casual users.

A classic misstep that short-circuits self-service capabilities is when a project team incorporates business logic into the reports. Although it initially takes less effort than embedding the logic in the data mart, the logic must be replicated to be reused, driving up complexity and TCO.

Read the full issue: Download Ten Mistakes to Avoid When Building a Sustainable Agile BI Practice (Q3 2014)