View online: tdwi.org/flashpoint

View online: tdwi.org/flashpoint

December 6, 2012

ANNOUNCEMENTS

NEW TDWI E-Book:

Self-Service BI

CONTENTS

For Big Data, Does Size Really Matter?

2012 Industry Review from the Trenches

Soft Skills for Professional Success

Barriers to Adoption of Customer Analytics

Mistake: Failing to Carefully Design a Process for Evaluation and Implementation

See what's

current in TDWI Education, Events, Webinars,

and Marketplace

For Big Data, Does Size Really Matter?

David Loshin

Knowledge Integrity, Inc.

Topics:

Big Data Analytics, Business Analytics

There is a lot of talk today about big data analytics, with a significant amount of editorial space dedicated to the concepts of big data and, correspondingly, big data analytics. Traditionally, the realm of advanced predictive analytics has been limited to organizations that are willing to invest in the infrastructure and skills necessary to vet, test, and then “productionalize” the analytics program. However, recent market trends, such as the introduction of usable open source tools for developing and deploying algorithms and applications on scalable commodity platforms, have lowered the historical barriers to entry. This allows small and midsize businesses to experiment with the development of big data technologies.

What does “big” really mean in this context? Of course, if you’re Google or Yahoo, you are probably running large-scale indexing and analysis algorithms on billions (if not more) of Web pages and other types of documents, and that would certainly qualify as “big” by anyone’s definition. However, a visit to “PoweredBy,” a growing list of sites and applications powered by Hadoop, shows some interesting results, especially considering the reported sizes of some of the applications being run. Among the users of this open source framework, some examples show a less-than-massive deployment:

- “4 nodes cluster (32 cores, 1 TB)”

- “5 machine cluster (8 cores/machine, 5 TB/machine storage)”

- “3 machine cluster (4 cores, 1 TB/machine)”

None of these examples reflect a reliance on the massive data volumes ascribed to big data. Further review of this self-identified sample of Hadoop users shows that the applications are not always predictive modeling tools or recommendation engines but more mundane applications such as analyzing Web logs and site usage, generating reportable statistics, or simply archiving large data. How big does data have to be to qualify as “big data”?

You might consider that the size of the data is only relevant in relation to available resources--that is, the use of big data technology resolves the resource gaps for managing applications that are expected to consume data set volumes exceeding their existing capacity. In other words, “big” data is data that is larger than what you can already handle and employs tools (such as Hadoop, Hive, or HDFS) to allow your organization to exploit scalability and data distribution by employing resources that are already available or readily accessible.

Again, a review of the applications on the “PoweredBy” list reveals that a number of the applications are effectively using utility computing through cloud-based services such as Amazon’s EC2 service. This utility model is especially appealing when your demand for both storage and computational power is driven by available data sets and not by a continuous need for a significant capital investment in hardware.

However, data sets that are large to one organization may be puny to others, and lumping all applications under a single umbrella might do a disservice to the technology. For example, suggesting that a predictive analytics application must absorb and process 12 TB of tweets to do customer sentiment analysis is bound to overwhelm smaller organizations that are unlikely to be the subject of those messages. Yet that should not preclude a smaller organization from using specialized analytical appliances for developing and testing predictive models.

Tools such as Hadoop, NoSQL databases, graph database systems, columnar data layouts, and in-memory processing enable smaller organizations to try things they were unable to do before. The value of big data technology lies in encouraging the creation of greater competitive advantage through more liberal approaches to new technologies: showing that they sometimes work well and sometimes do not, allowing some to fail, and allowing others to succeed and be moved into production.

Perhaps instead of asking about size, focus on whether an organization can leverage these technologies in a cost-effective way to create greater value. As with any innovative technique, there is a need to incorporate big data into a long-term corporate information strategy. This strategy allows for controlled experimentation with newer technologies, frames success criteria for piloted activities, and streamlines the transition of successful techniques into production. Framing the evaluation of big data analytics within a strategic plan can help rationalize the evaluation and adoption of new technology in a controlled and governed way.

David Loshin, president of Knowledge Integrity, Inc., is a recognized thought leader, TDWI instructor, and expert consultant in the areas of data management and business intelligence. David has written numerous books and papers on data management. His most recent is Business Intelligence: The Savvy Manager's Guide, second edition.

2012 Industry Review from the Trenches

Steve Dine and David Crolene

Datasource Consulting

Topics:

Business Analytics, Business Intelligence, Data Management, Program Management

Each year, we reflect upon the business intelligence (BI) and data integration (DI) industry and provide a review of the noteworthy trends that we encounter in the trenches. Our review emanates from five sources: our customers, industry conferences, articles, social media, and BI software vendors. This year has proved to be an interesting one on many fronts. Here are our observations for 2012 and our expectations about 2013.

1. BI programs matured

As we reported in 2011, larger numbers of existing BI programs are continuing to mature beyond managed reports, ad hoc queries, dashboards, and OLAP. Companies are increasingly looking to derive more value from their data via technologies and capabilities such as:

- Text and social analytics

- Advanced data visualization

- Predictive and descriptive analytics

- Geospatial analysis

- Collaboration

These capabilities all require significant computing power, and consequently we have seen a corresponding rise in technologies such as analytic databases and analytic applications. We see this trend continuing into 2013 and beyond.

2. Greater focus was placed on operational BI

Over the past few years, we have observed an increased focus on operational BI. As corporate BI programs mature, this is a natural evolution. There is considerable value in using BI to support and enhance operations within a company, but companies that are successful doing this must realize that operational BI is a different class of data. Operational BI generally requires lower data latency, higher data selectivity, and a larger amount of query concurrency than traditional analytic workloads. These factors often require a different architecture than what was designed for the data warehouse.

Furthermore, support of the system may need to be executed differently. If a load on a traditional data warehouse fails, it is often acceptable to address it within hours, not minutes. For operational BI, a 24/7 support model is more often required because load failures may immediately impact the bottom line.

We see the trend toward operational BI and lower latency analytics continuing as organizations broaden their focus from enterprise data warehousing to enterprise data management.

3. BI wanted to be agile

We've always recognized the high cost of, and long lead times for, implementing BI, but customers have finally said “enough” and BI teams have to listen. New software-as-a service (SaaS) BI offerings and departmental solutions enable businesses to move forward without IT, putting even more pressure on BI programs to deliver results faster. Businesses are looking for new ways to implement BI and are finding that many agile practices (smaller, focused iterations; daily scrum meetings; embedded business representatives; prototyping; and integrated testing) help accelerate BI projects and bolster communication between business users and IT. Certain technologies are also helping influence this shift. Data virtualization, for example, allows a “prototype, then build” capability and doesn’t require physicalizing all the data required for analysis. However, agile was created for software development, not BI, and early adopters are learning that there are many differences. For example, the tools to automate software code testing are far more numerous and mature than for ETL mappings and data warehouses.

We expect to see BI practitioners continue to refine which agile principles are effective with BI and which ones don't translate as well. We also expect to see a rise in enabling technologies, such as desktop analytic software, data virtualization, and automated testing/data validation.

4. Momentum shifted to “in-memory”

With the rise of 64-bit architectures and the ever-decreasing cost of memory, we have recognized a shift in momentum from in-memory applications to the database (namely with data warehouse appliances). The past several years have seen the rapid ascent of in-memory analytic technologies. Tools such as Tableau, IBM TM1, Oracle TimesTen, Spotfire, and Qlikview have offered the promise of near-instantaneous analytic response.

However, as memory size continually increases and the cost of memory decreases, databases such as SAP HANA, Oracle Exalytics, Netezza, Kognitio, and Teradata have been able to optimize their platforms to maintain more data in memory. This has resulted in significantly faster database operations for data residing in memory while allowing larger data sets to still reside on disk. This hybrid capability allows administrators to tune their environments to their specific analytic workloads.

Although we see the trend toward in-memory databases increasing, we also recognize that data volumes are increasing faster than the cost of memory decreases. We don’t foresee all enterprise data being stored in memory anytime soon; therefore, we believe there will soon be a greater focus on temperature-based storage management solutions in 2013.

5. Failed BI projects remained a challenge

Although the industry has learned and documented the reasons projects fail, it hasn't done much to stem the tide of failed projects. From our perspective, the overarching reason is that implementing successful BI projects is difficult, requiring a balance of strong business involvement, thorough data analysis, scalable systems and data architectures, comprehensive program and data governance, high-quality data, established standards and processes, excellent communication, and BI-focused project management.

From our perspective, we don't necessarily see this trend changing unless project teams:

- Institute and enforce enterprise data management practices.

- Ensure high levels of business involvement for BI projects.

- Institute measurable, value-driven metrics for each BI project.

- Change their mindset from offshoring BI projects to “smart-sourcing” them. It is important to not treat outsourcing as an all-or-nothing concept, but rather intelligently outsource components that work well (e.g., simple staging ETL, simple well-defined reports, operational support).

6. BI continued moving to the cloud

Cloud BI has continued to evolve and expand over the past several years, but more slowly than expected. A recent Saugatuck Technology survey concludes that only about 13 percent of enterprises worldwide are harnessing cloud-based BI solutions. However, vendors are innovating technology to address traditional deficiencies and hindrances such as data security, a lack of meaningful ETL capabilities, and performance challenges (of both hardware and the network). They are also addressing concerns that “infrastructure-as-a-service” may be too complex for many BI programs to consider.

To address these concerns, we have observed the emergence of an increasing number of vendors in the SaaS space. Solutions such as MicroStrategy Cloud, Microsoft Azure, Informatica Cloud, Pervasive Cloud Integration, and GoodData have begun to address these difficulties. These products show great promise, and over the coming years we expect them to continue to mature and validate the technical and fiscal viability of the space.

A note of temperance: a key challenge that still looms is the ability to effectively and efficiently integrate these solutions with existing security infrastructure (such as LDAP and AD). Until then, an additional security layer will be required to support BI in the cloud.

7. Interest in big data/Hadoop grew

Everyone has heard a lot about big data and Hadoop. This is understandable because data volumes continue to grow and technologies must innovate to support the increasing volumes in a cost-effective way. Furthermore, these technologies have been very well marketed; vendors have invested significantly in creating buzz.

However, like most emerging technologies, the capabilities are still not well understood by many BI organizations. The technologies do certain things very well but are typically not a wholesale replacement platform for a traditional data warehouse. Over the coming years, we expect companies to better understand how these technologies fit and leverage them accordingly.

Final Thoughts

This past year was certainly a year of change in the industry. We realize that a broad range of technologies, trends, and capabilities emerged in 2012. Our industry review is based on what we observed in the trenches. This doesn’t always align with what industry analysts and vendors chose to promote. Rather, our observations tend to be more practical and focus on trends and technologies that are currently making a difference on the ground. We are curious to know if you agree. Please contact us and let us know what you think at [email protected].

About Datasource Consulting

Datasource Consulting is a trusted business intelligence and data integration implementation partner and strategic advisor to Fortune 500 and midsize companies alike. We deliver innovative, end-to-end BI and data integration solutions with a focus on scalability, maintainability, and business value.

We are experts in data integration, data architecture, data modeling, data visualization, mobile analytics, analytic and operational reporting, and BI project management. We are also considered thought leaders for BI in the cloud, big data, and agile BI. Empowered with a proven BI methodology that embraces agile and lean principles, Datasource knows how to make your BI projects and programs successful.

To learn more, please visit us at datasourceconsulting.com.

Steve Dine is the managing partner at Datasource Consulting, LLC. He has extensive hands-on experience delivering and managing successful, highly scalable, and maintainable data integration and business intelligence solutions. Steve is a faculty member at TDWI and a judge for the TDWI Best Practices Awards. Follow Steve on Twitter: @steve_dine.

David Crolene is a partner at Datasource Consulting, LLC. With 15+ years of experience in business intelligence and data integration, David has worked for two leading BI vendors, managed data warehouses for an electronics giant, and consulted across a range of industries and technologies.

Soft Skills for Professional Success

Hugh J. Watson

Business intelligence managers and professionals must be technically competent. Although the skills vary, there are at least some technical requirements for every BI position, from data modeling and SQL, to business process management, Java, systems analysis and design, or Web-based application development.

It may be possible to be successful based solely on superior technical skills, but the more likely case is that you also need to master some soft skills, especially if you want to progress up the organizational ladder.

Below is a list of essential soft skills. Let’s consider each of them and how they might apply to you.

- People skills

- Find mentors

- Networking

- Build a brand

- Long-term career perspective

- Value nontechnical courses

- Understand data gathering and analysis

- Presentation skills

- Separate personal and professional lives

- Work/life balance

- Prioritizing and executing

Read the full article and more: Download Business Intelligence Journal, Vol. 17, No. 3

Highlight of key findings from TDWI's wide variety of research

Barriers to Adoption of Customer Analytics

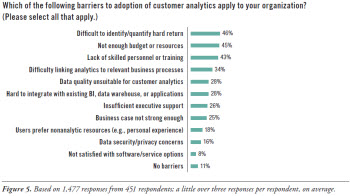

What factors are giving organizations the most difficulty in adopting and implementing customer analytics technologies and methods? TDWI Research found that difficulty in identifying and quantifying hard return (46%) is the obstacle confronted by the largest share of respondents (see Figure 5; respondents could select multiple answers). This is followed closely by not having enough budget or resources (45%) and lack of skilled personnel or training (43%). This third factor is particularly troublesome when organizations need enough data scientists to develop models and algorithms for customer analytics. “Data scientists are a scarcity,” said one senior marketing director.

Read the full report: Download Customer Analytics in the Age of Social Media

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake: Failing to Carefully Design a Process for Evaluation and Implementation

John O’Brien

Evaluating emerging technologies should be an ongoing process for the BI team or IT. This is not a process that should be reinvented each time an opportunity is identified. More important, this process should be continuously improved from the lessons learned with each evaluation and implementation of an emerging technology.

Establishing a structured approach and process for evaluating and implementing an emerging technology ensures that evaluations and decisions are made consistently within a continuously improving process. A generalized approach will include resources to monitor for appropriate technologies and trends; trusted research firms and organizations offering independent reviews; a standard value and cost model designed for multi-year total valuation; key milestones in the evaluation process for setting expectations and regulating speed; and a well-defined set of evaluation criteria.

Be cautious of industry publications, news, and blogs because many are vendor sponsored and may be misleading and not completely objective. However, not all sponsored materials are a bad thing; those that focus on early customer success stories and case studies are typically the most interesting and relatable.

Read the full issue: Download Ten Mistakes to Avoid When Adopting Emerging Technologies in BI (Q3 2012)