View online: tdwi.org/flashpoint

View online: tdwi.org/flashpoint

October 4, 2012

ANNOUNCEMENTS

NEW TDWI Checklist Report:

Operational Analytics: Balancing Business Benefits and Technology Costs

NEW TDWI Checklist Report:

Using Location Information for Geospatial Analytics

NEW TDWI E-Book:

Big Data Analytics

CONTENTS

What’s Really New about Big Data and Big Analytics?

Collaborative Business Intelligence: Socializing Team-Based Decision Making

Mistake: Failing to Articulate Value to the Business Sponsors

See what's

current in TDWI Education, Events, Webinars,

and Marketplace

What’s Really New about Big Data and Big Analytics?

Colin White and Claudia Imhoff

BI Research // Intelligent Solutions, Inc.

Topic:

Big Data Analytics

The current industry obsession with big data and big analytics suggests that these are radical new approaches for managing and analyzing large amounts of data. Well, not really! Organizations have always struggled with efficiently and cost-effectively handling data workloads that push the boundaries of existing hardware and software technologies. Today’s big data and big analytics solutions do, however, enable us to deploy applications that were not previously possible because the required information was not available, the costs were prohibitive, or the technology couldn’t support the extreme workloads involved.

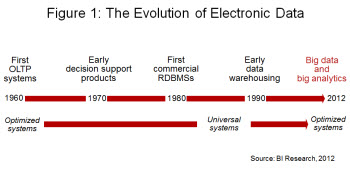

The Evolution of Extreme Workloads

The struggle to support extreme workloads can be traced back to the early 1960s (see Figure 1). In these early days of high-volume processing, performance issues were solved with custom-built systems that were optimized to support the extreme workloads involved. One of the first online transaction processing (OLTP) systems, for example, was the SABRE airline reservation system. This system was custom built by American Airlines, in conjunction with IBM, to automate flight booking, which had hitherto been a manual process. When introduced in the early 1960s, SABRE cost some $40 million (the equivalent of about $400 million today) to develop and install, and handled roughly 83,000 reservations each day. Today, SABRE operates 24 hours a day and handles in excess of 60,000 transactions per second during peak periods, using more than 8,000 servers.

The introduction of retail point-of-sale terminals and bank automated teller machines during the ‘60s and ‘70s continued to challenge IT in supporting OLTP workloads. As with SABRE, custom-built and optimized systems often had to be built to manage these workloads and provide the required performance.

During the 1980s, transaction processing and database management technologies improved dramatically, and by the time relational database systems matured toward the end of the decade, the need to build custom systems decreased. Organizations were able to use relational database technology to support most OLTP workloads. This helped reduce both development and maintenance costs as well as improve time to value for IT investments.

The early ‘90s saw the introduction of data warehousing, which enabled organizations to move beyond basic operational reporting by providing detailed analytics about business performance. Relational database technology had improved to support both transaction and analytical processing, albeit in separate systems. In 1992, Walmart was one of the first companies to deploy a terabyte data warehouse environment. Today, the Walmart data warehouse stores several petabytes of data, and multi-terabyte data warehouses are now commonplace.

As the new century approached, Internet growth continued to add to the data mountain. More recently, increasing use of sensors and sensor networks has increased data volumes to unprecedented levels. This data growth involves not only traditional structured data, but also increasing amounts of multi-structured data from a variety of new internal and external data sources.

Today, the ability of organizations to integrate, manage, and analyze growing data volumes is a major issue, and once again IT has resorted to using customized and optimized solutions to support extreme workloads. The difference this time around is that vendors provide a number of different technologies and tools that reduce the amount of custom coding required. This is what big data and big analytics are about--enabling the implementation of analytic applications that are difficult to support using traditional “one-size-fits-all” solutions.

An important distinction this time around is that these new optimized solutions don’t replace the systems we have today; they extend them. These solutions not only enable companies to improve the information content of existing decision-making applications, but also offer the possibility of using this information to identify potential new business opportunities.

The Next Generation of Innovation

We can now support today’s extreme workloads by removing many of the technology limitations of the past. At the same time, we can now perform big data analytics more cost effectively and on entire sets of data as well as new data sources. These innovations have given new life to our BI environments by broadening their analytical capabilities with minimal expense and disruption. Now all enterprises can perform highly complex and sophisticated analytics to become the much-desired fact-based organization.

Colin White is the founder of BI Research and DataBase Associates. As an analyst, educator, and writer, he is well known for his in-depth knowledge of data management, information integration, and business intelligence technologies.

Claudia Imhoff, Ph.D., is the president of Intelligent Solutions and founder of the Boulder BI Brain Trust. She is a popular speaker and internationally recognized expert on business intelligence and its technologies and architectures.

Collaborative Business Intelligence: Socializing Team-Based Decision Making

Barry Devlin

For many of us, making decisions is a challenge; for others, it can be torture. Despite nearly half a century of work in decision support and business intelligence (BI), many businesses’ decisions look vaguely dysfunctional.

If we examine how most organizations really make important and innovative decisions, we see that most are made by teams (permanent or transitory) of people rather than by individuals. It’s high time we designed an effective approach to true decision-making support--what we might call innovative team-based decision making.

This article presents a new model that maps the path from the information cues that signal change is required, through the team interactions and implementation, and on to measurable and repeatable innovation. It is the first comprehensive framework in which team-based decision making can be understood, designed into software solutions, and implemented in highly innovative and forward-looking organizations.

Read the full article and more: Download Business Intelligence Journal, Vol. 17, No. 3

Highlight of key findings from TDWI's wide variety of research

BI Benchmark Report: Maturity

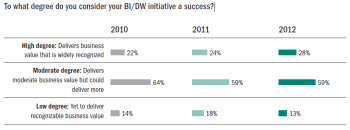

The last three years show a steady progression among survey respondents who characterize their BI/DW environments as delivering a “high degree” of business value--that number reached a record high for this survey series, 28 percent of organizations. Although it’s good to see increases in the incidence of high value from BI systems, this data point also highlights room for continued improvements that are achievable with the growing body of industry best practices, shareable over the Web, and today’s more advanced technologies for data integration, reporting, analytics, and other disciplines. On the other end, just 13 percent feel their BI systems deliver a “low degree” of business value.

Read the full report: Download 2012 TDWI BI Benchmark Report: Organizational and Performance Metrics for Business Intelligence Teams

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake: Failing to Articulate Value to the Business Sponsors

John O’Brien

Identifying and evaluating emerging BI technologies should remain the role of the BI programs that guide your company’s BI strategy and goals. The BI program must be able to clearly articulate the technology’s value to business sponsors for adoption and cost justification to demonstrate a clear and accountable value proposition, and that assumptions and risks are recognized and accounted for. Adopting emerging technologies can consume key resources for extended periods of time, distract other development teams, and involve user training.

Even for non-business delivery technologies such as cloud computing, virtualization, or multi-tiered storage architectures, emerging technologies offer significant value in BI delivery efficiency, agility, and lower total cost of ownership. However, introducing these technologies will also require new policies and procedures for how the BI delivery process will incorporate them. For example, multi-tiered storage is cost effective but requires the creation and definition of data-aging policies by the business for every data element managed across the tiers, with modifications to service-level agreements for performance of data access related to each data storage tier.

Emerging technologies fall into several broad categories: enabling new business capabilities, reducing costs or making operations more efficient, or radically changing how you do business. This diligence in articulating the technology’s value helps eliminate the “technology for technology’s sake” unease that the business is usually concerned about.

Read the full issue: Download Ten Mistakes to Avoid When Adopting Emerging Technologies in BI (Q3 2012)