View online: tdwi.org/flashpoint

View online: tdwi.org/flashpoint

August 7, 2014

ANNOUNCEMENTS

NEW TDWI Checklist Report

Applying Analytics with Big Data for Customer Intelligence: Seven Steps to Success

CONTENTS

From Business Intelligence to Visual Analytics: Craft a Winning Data Strategy

Prescriptive Analytics: Move from Predicting the Future to Making It Happen

BI Experts’ Perspective: Business Intelligence as a Career Choice

Problems and Opportunities for Data Warehouse Architectures

Mistake: Forgetting that People and Process Are a Big Part of Big Data Analytics

See what's

current in TDWI Education, Events, Webinars,

and Marketplace

From Business Intelligence to Visual Analytics: Craft a Winning Data Strategy

Stephen McDaniel and Eileen McDaniel

Strategic Analytics Consultants

Topics:

Data Management

Until recently, visual analytics was considered a niche area. Those days are quickly passing; almost every major analytics and BI vendor is either launching or developing a product focused on visual analytics.

As a data professional, you’ll face challenges in integrating these products into your existing BI infrastructure. How can you successfully implement new visual analytics tools and keep your business customers engaged and happy? Step in front of the inevitable progression to visual analytics by crafting a winning data strategy.

We suggest starting in these three areas:

1. Learn the basics of the visual analytics tools used by the business analysts in your organization. Follow the process of how a real-world project is executed. Solving a typical business problem will give you a chance to experience firsthand what users are doing. You will be surprised at how the tools change your view of the data warehouse and “proper” data structures.

We’ve had many data professionals attend our analytics workshops. Even those with years of experience in the field tell us that managing code and databases is a completely different way of thinking about data compared to analyzing an issue, which has drastically different constraints and goals. Investigating a real problem that the business is facing should help you to see many possible ways that your data stores can be adjusted to enable successful analysis.

2. Find an ally in each of your key business areas, preferably one that is an expert analyst for a viewpoint from “the other side.” Leverage these analysts for invaluable knowledge to design better data structures in the form of tables, graphs, and system maps in your data systems. This is far more effective than decoding the whole process by yourself. When building data warehouses and downstream analytic data stores, we’ve discovered that expert analysts are often excited and motivated to collaborate on improving the efficiency and value of the data sources in their analyses.

3. Commit to the reality that self-service data management with desktop spreadsheets and databases among business users is not going away. Instead, it will only continue to accelerate over the next few years. Part of this reality is driven by the fact that the appropriate data structure is often dependent on the analysis problem at hand. Another reason driving this growth is that more data streams are flowing into organizations, often at a rate that is overwhelming for analysts and data teams alike.

In our experience, when we help business users improve their data management skills, they are less likely to make mistakes or inaccurate assumptions about the data. They also better comprehend and appreciate the hard work involved in maintaining central systems.

Seize the opportunity to be more successful in your career as a data professional by understanding and incorporating the new landscape of BI and visual analytics into your data warehouse and collaborating closely with business users to establish a strong environment for analytics. Ultimately, data warehouses are about making better decisions in a timely manner, and these suggestions can help you further the utility of your data warehouse.

Stephen McDaniel is an independent data scientist and author of several books on analytic software. Eileen McDaniel, Ph.D., is author of The Accidental Analyst and director of analytic communications at Freakalytics. Both work with clients on strategic analytic projects, teach courses on analytics, are on the faculty at INFORMS, and can be reached at www.freakalytics.com.

Prescriptive Analytics: Move from Predicting the Future to Making It Happen

Troy Hiltbrand

Idaho National Laboratory

Topics:

Business Analytics

Across the BI/DW industry, predictive analytics is garnering attention from companies looking to predict what will happen next so they can proactively respond. The focus of predictive analytics is identifying the most probable outcomes based on a pattern of historical events and behaviors. The next logical step in business analytics is to determine which drivers are associated with these predictions and tweak them to achieve optimal outcomes for the business. This is where prescriptive analytics comes into play. Although it is limited in usage to early-adopter enterprises, prescriptive analytics has the potential to alter the way business is done.

Prescriptive analytics is commonly referred to as optimization. It focuses on problems where the inputs, representing real-world resources, can be configured or applied in multiple ways, resulting in different target outcomes. The output is governed by a set of constraints that define the boundaries associated with the problem. Prescriptive analytics relates to determining which configuration of resources either minimizes or maximizes the targeted outcome.

An example of prescriptive analytics is a production optimization problem. Production companies have access to a finite set of resources, including labor, capital, and raw material. Real-world constraints limit how these inputs can be combined, including the number of working hours that labor resources can work by law or policy, production floor capacity, and demand from customers. The company can apply these resources to the production process in many ways, but in the end, the target is to match demand with supply in a way that maximizes profit.

When the number of inputs is small or the constraints are simple, determining all possible combinations of resources to find an optimal solution is manageable. As more problems become driven by big data, the number of inputs to these problems grows and the number of possible solutions multiplies exponentially. It quickly reaches a state beyond an individual’s ability to manually cycle through all possible combinations to determine the optimal resource allocation, even with access to significant computing capabilities. Prescriptive analytics can help an enterprise find an optimal solution in the quickest manner possible.

Prescriptive analytics uses two major types of optimization algorithms. The first is an exact algorithm, which is guaranteed to find the definitive optimal solution. It calculates all possible solutions and chooses the optimal one. Even though it provides 100 percent assurance of optimality, it is expensive from the perspective of polynomial time, and has significant scalability limitations.

The second algorithm type is an approximation algorithm, which uses a process to reach a high level of confidence that the optimal solution has been identified without evaluating every possible solution. The approximation algorithms are much faster in terms of polynomial time, but lose the assurance of identifying the absolute optimal solution.

At times, there are no known exact or approximation algorithms that solve the problem. For these, a class of algorithms known as heuristics exist. Heuristics are a set of techniques that can be applied to identify a “good-enough” solution. These heuristic approaches are ideal for many situations because they can be more easily described to business stakeholders and applied to a wide range of problems. Unfortunately, unlike the exact or approximation algorithms, heuristics have a much lower level of assurance that the solution has absolute optimality. Their target is a good solution, not necessarily the best solution possible. Examples of heuristics include genetic algorithms, simulated annealing algorithms, and tabu search algorithms.

Genetic algorithms attempt to mimic biological systems when solving optimization problems. They take sets of solutions and iteratively pair them to generate new stronger solutions that inherit attributes of the parent solutions. The solutions that end up stronger and better than the previous generation are kept; weaker solutions are discarded. Generic algorithms are a form of “survival of the fittest,” where the solutions become stronger with each successive generation.

Simulated annealing algorithms take their process from that of annealing metal by incrementally heating and cooling it to make it stronger. The algorithms start with a random solution and start jumping around to neighboring solutions. At first, the jumps are large but become incrementally smaller as the solutions approach an optimal level.

Tabu search algorithms are also known as “local” search algorithms. These algorithms look at neighboring solutions and move to one if there is incremental improvement over the current solution. This continues until the algorithm reaches a point where the current solution is more optimal than any of the neighboring solutions.

As more problems are defined in terms of choices, constraints, and outputs, and the optimization process is automated, computers can start to identify and implement solutions without the need for human intervention. This allows machines to perform complex work that was once reserved only for humans. This moves an enterprise from seeing the future to identifying ways to shape it, all through the use of prescriptive analytics.

Although prescriptive analytics is seen less often than predictive analytics in business decision making, it will be seen more in the coming years. Enterprises with limited resources are recognizing the value of implementing prescriptive analytics and are delving into the associated complexity to evolve their business analytics programs from seeing the future to influencing it. It will become a larger, more important part of business analytics programs across the industry.

Troy Hiltbrand is the deputy CIO at Idaho National Laboratory and adjunct faculty at Idaho State University.

BI Experts’ Perspective: Business Intelligence as a Career Choice

Dave Schrader, Ron Swift, and Coy Yonce

Susan Stephenson is the BI director for a national auto parts company. She has 10 years of BI experience, with the last three as director. She’s been asked to speak at a local college’s fall management information systems (MIS) banquet. The 100 or so students attending have been through courses in programming languages, databases, systems analysis and design, project management, and architecture, but have learned little about BI.

Our BI Experts have some definite ideas about what Susan should tell these future BI professionals regarding what BI is, why it’s important, and how to prepare for and start a BI career.

Learn more: Read this article by downloading the Business Intelligence Journal, Vol. 19, No. 2

Highlight of key findings from TDWI's wide variety of research

Problems and Opportunities for Data Warehouse Architectures

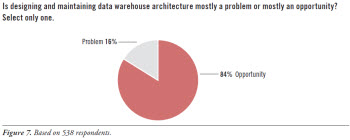

Establishing and sustaining data warehouse architecture takes time, specialized skills, team collaboration, and organizational support. With these requirements in mind, this report’s survey asked: “Is designing and maintaining data warehouse architecture mostly a problem or mostly an opportunity?” (See Figure 7.)

The vast majority consider DW architecture an opportunity (84%). Conventional wisdom today says that architecture gives a data warehouse and dependent programs for reporting and analytics greater data quality, usability, maintainability, and alignment with business goals.

A small minority consider DW architecture a problem (16%). Data warehouse architecture faces a number of technical and organizational challenges, as noted in the next section of this report, but few organizations find that the challenges outweigh the benefits of architecture.

Read the full report: Download Evolving Data Warehouse Architectures in the Age of Big Data (Q2 2014)

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake: Forgetting that People and Process Are a Big Part of Big Data Analytics

Fern Halper

Big data projects often fail not because of the technology but because of people, politics, and processes around the technology. Organizational issues and culture are a big part of big data analytics. Building out analytics can take time and requires building trust among all parties involved. Cultural issues can be pervasive in such efforts.

Clearly, strong analytics leadership is an important part of this equation. With leadership that supports analytics and uses analytics to drive decisions, it is easier to build an analytics culture. Companies that don’t have analytics leadership often try to grow efforts organically, which usually requires running more pilots to prove the value of analytics. It may also take more hand-holding.

Read the full issue: Download Ten Mistakes to Avoid in Big Data Analytics Projects (Q2 2014)