View online: tdwi.org/flashpoint

View online: tdwi.org/flashpoint

January 10, 2013

ANNOUNCEMENTS

NEW TDWI Best Practices Report:

Achieving Greater Agility with Business Intelligence

CONTENTS

How to Put Your BI Environment on a Solid Foundation

Best Practices for Turning Big Data into Big Insights

Filling the Role of the Data Scientist for Customer Analytics

Mistake: Paying Only Lip Service to Data Stewardship

See what's

current in TDWI Education, Events, Webinars,

and Marketplace

How to Put Your BI Environment on a Solid Foundation

Danette McGilvray

Granite Falls Consulting, Inc.

Topics:

Business Intelligence, Data Management

5:12 a.m., April 18, 1906. A great earthquake struck the city of San Francisco, California. The imposing City Hall “collapsed in a shower of bricks, stone and steel,” according to an article in the San Francisco Chronicle. “It was the largest municipal building west of Chicago. ... The City Hall was supposed to be earthquake proof, but it collapsed in seconds after the great quake struck. It had been open for less than 10 years.”

(Click for larger image)

National Archives (111-SC-95102)

2:00 p.m., January 10, 2013. Your company, anywhere in the world. Another meeting collapsed in a shower of defensive words going back and forth across the conference table.

“There is no way that our sales were that low!”

“My report shows that sales are up!”

“Your report has to be wrong!”

“My numbers are right!”

Your new BI reporting tool was supposed to be error proof, but the frequency of the arguments and distrust of the information derail many meetings. The recently implemented reporting tool has been in production for less than a year and the data warehouse for 10. The real tragedy is the lack of action moving the business forward--more time is spent arguing about the numbers than acting on the information.

After the 1906 earthquake, all that was left of the old City Hall was a “grandiose dome 300 feet high held up by the skeletal remains of a building. ... [R]umors circulated that contractors who built City Hall had cut corners ... [b]ut a report by architects after the disaster found the construction to have been solid; it was the design that failed. City Hall, they said, had been built ‘without any of the principles of the steel frame construction having been used.’”

Is your business intelligence environment built on a well-designed and solid structure that you can depend on? Does it manage data in such a way that you trust the information in your reports? Or is it just a pretty front end barely held up by a skeletal foundation of faulty design and resulting poor-quality information?

Distrust of the information in your reports is a symptom of a poorly designed and poorly managed BI environment. One approach to correcting the problem is to look at your information from a data quality perspective using the principle of “information life cycle thinking.” I created the acronym POSMAD as a reminder of the six fundamental, high-level phases in the information life cycle: plan, obtain, store and share, maintain, apply, and dispose. Information life cycle thinking means that you trace your BI data (the facts that interest you) throughout the POSMAD life cycle and analyze the associated processes, technology, people, and organizations that impact the data.

Sketch out what you know about the life cycle. You can begin anywhere, but a good starting point for analytics is to look at the most critical reports where users disagree about the information. Trace the information back to the actual data in the warehouse, through any ETL processing, and to the source systems feeding the warehouse. Evaluate the associated processes, people, organizations, and technology.

The beauty of this approach is that you will bring together what you know about your information in a way that you have not brought it together before. You will see things in a new way and discover connections, gaps, and redundancies you might not have seen before. From there, perform root cause analysis. Expect to find several root causes, such as technology bugs, poor processes, misaligned organizational structures, undefined roles and responsibilities, and a lack of training. Some will be easy to fix; others will require more effort. Even better, include “life cycle thinking” in the initial development of your data warehouse and the implementation of your BI tool.

You might say, “Danette, this sounds pretty basic.” It is, but we need the basics to move forward with confidence. Foundational principles are often overlooked as we hurry to install the latest and greatest tool. We must have the tools, of course; I do appreciate the technology. I don’t want to go back to carbon paper and slide rules. (Does anyone remember those?) We must also bring additional conscious effort to manage the quality of our information in the same way we manage our people, processes, and technology. Notice I did not say to reduce efforts in the other areas. Give equal focus to data and information.

2:00 p.m., March 12, 2013. Your company, anywhere in the world. Another meeting generates excitement as new ideas flow back and forth across the conference table.

“Given this latest information, we can adjust our strategy immediately!”

“Here are the operational changes we can make to support that!”

High-quality information and trust in the reports will create an environment where the focus is on informed decisions and forward action for the business.

A solid way to start includes information life cycle thinking using POSMAD; understanding associated data, processes, technology, people, and organizations; and addressing root causes. So get going! Otherwise, your business intelligence is just a fancy dome with no foundation and a disaster waiting to happen.

Danette McGilvray is president and principal of Granite Falls Consulting, Inc., a firm that helps organizations increase their success and enhance the value of their data assets. She is the author of Executing Data Quality Projects: Ten Steps to Quality Data and Trusted Information (Morgan Kaufmann, 2008).

References

McGilvray, Danette [2008]. Executing Data Quality Projects: Ten Steps to Quality Data and Trusted Information, Morgan Kaufmann, pp. 23–30.

Nolte, Carl [2012]. “Workers discover ruins of City Hall,” San Francisco Chronicle, September 25, A1.

Best Practices for Turning Big Data into Big Insights

Jorge A. Lopez

Big data is surfacing from a variety of sources--from transaction growth and increases in machine-generated information to social media input and organic business growth. What does an enterprise need to do to maximize the benefits of this big data?

In this article, we examine several best practices that can help big data make a difference. We discuss the role that extract, transform, and load (ETL) plays in transforming big data into useful data. We also discuss how it can help address the scalability and ease-of-use challenges of Hadoop environments.

Read the full article and more: Download Business Intelligence Journal, Vol. 17, No. 4

Highlight of key findings from TDWI's wide variety of research

Filling the Role of the Data Scientist for Customer Analytics

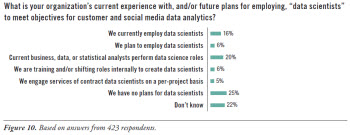

Many organizations are trying to determine whether they need to hire “data scientists” to perform advanced customer analytics. Industry analysts and experts offer differing definitions of this person and role, but most organizations know one thing: there are not enough of them. A 2011 McKinsey Global Institute study warned of the shortage of data scientists, noting that “the U.S. alone could face a shortage of 140,000 to 190,000 people with deep analytical skills as well as 1.5 million managers and analysts with the know-how to use the analysis of big data to make effective decisions.”

Our research found that 16% of organizations surveyed currently employ data scientists, and 6% plan to do so (see Figure 10). The results confirm what TDWI Research heard anecdotally through interviews for this report: that organizations are taking a variety of paths to expand their data science capabilities. About one-fifth are giving data scientist roles to current business, data, or statistical analysts (20%), and another 6% are training and shifting personnel to become data scientists. One-quarter of those surveyed have no plans for data scientists. A study of cross-tabulations in our research indicates that organizations with no plans for data scientists are less likely to be using Hadoop and MapReduce; they are also confronting a lack of budget or resources as the chief barrier to adopting customer analytics.

Read the full report: Download Customer Analytics in the Age of Social Media

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake: Paying Only Lip Service to Data Stewardship

Dave Wells

We often designate people as data stewards without recognizing that they already have full-time jobs, that they may not know what is expected of a data steward, and that effective data stewardship requires particular knowledge and skills. For data stewards to succeed, we must acknowledge the importance of the role by allocating time, selecting the right people, defining expectations, and equipping stewards with the requisite knowledge and skills.

Data stewardship isn’t always a full-time job, but the work of research, facilitation, communication, and consensus building is time-consuming. When an individual is designated as a data steward, be clear about the percentage of time that is allocated for stewardship work. Be sure the time allocation is realistic in two respects: (1) that it is sufficient to meet the expectations for the steward, and (2) that other responsibilities are shifted or removed to make time available.

Some people are better suited to stewardship than others. Although data knowledge is important, human skills may be even more valuable. Stewardship can’t succeed without engaging and involving people. The stakeholders of enterprise data are many, and the priorities, preferences, and needs often conflict. Successful stewards rely first on people skills--communication, facilitation, and consensus building--to achieve their data management goals.

Be clear about expectations and responsibilities. Is the data steward responsible for data definition, metadata management, quality, security and access, or regulatory compliance? Which responsibilities are in scope? How are they reflected as employee performance criteria and other incentives?

Build knowledge and skill through training, conferences and networking, and internal data steward communities of practice. Cover the entire range of skills, from people to process and technology, to develop well-rounded and effective data stewards.

Read the full issue: Download Ten Mistakes to Avoid In Data Resource Management (Q4 2012)