View online: tdwi.org/flashpoint

View online: tdwi.org/flashpoint

August 4, 2011

ANNOUNCEMENTS

New Checklist Report: Using Data Quality to Start and Sustain Data Governance

CONTENTS

Requirements Interviews:

Beyond the Questions

Big Data and the Data Supply Chain

Benefits of Operational

Data Integration

Mistake: Thinking You Can Just

Turn off an Old Platform

See what's

current in TDWI Education, Research, Webinars,

and Marketplace

Requirements Interviews:

Beyond the Questions

Chris Adamson

Oakton Software LLC

Topic:

Business Requirements

The interview is a popular mechanism for identifying business intelligence requirements. Unfortunately, these precious opportunities are often squandered. A successful interview requires more than a good list of questions. As you plan for and conduct business interviews, a few simple guidelines will maximize the value of these sessions.

1. Request Time the Right Way

The people you are going to interview are busy professionals, and you will be using their valuable time. You may also be discussing some of the more frustrating aspects of their jobs--areas in which there are severe information shortcomings. It is therefore important that the initial request for their time come from your project’s sponsor, not from you.

Ghost write an introduction from your sponsor. Most people do not read long messages, so keep it short. In two or three sentences, describe the project and its importance to the business. This is your “elevator pitch,” and every project should have one. The next paragraph should be a single sentence, stating that the recipient has been selected to be interviewed about business requirements, and introducing you as the interviewer. If any materials or preparation are required, a third section should introduce a short bulleted list.

2. Limit Those Present During the Interview

Because interviews are so important, there is often a strong desire to have as many team members present as possible. Unfortunately, this can have undesired effects on the tone and value of the interview. When facing a large group, most people adopt a more formal style of interaction. You will hear more of the “party line” and less about the important details. Limit the number of team members present to three.

For the team members who will attend, establish ground rules. One person should be designated as the lead interviewer. This person will do most of the talking. Others should be instructed to hold their questions or comments, no matter how difficult, until the lead interviewer invites them to speak. Without such guidelines, interviewers wind up at cross purposes, never seeing a particular topic to completion.

3. Check Your Ego at the Door

Requirements interviews offer rare access to fascinating people, many of whom may be senior executives. Naturally, you will want to make a good impression. However, the interview is not a good time to try to impress these people. If you attempt to exhibit business knowledge, your subject may make some assumptions about what you know, and filter their responses accordingly.

Tell the subject to assume you know very little, and act accordingly. Your opportunity to impress will come later, when you deliver effective business analytics. This is one of the most difficult lessons for novice analysts to learn.

4. Set Expectations

When you sit down to an interview, don’t just dive in. Explain the project in no more than one minute--again, this is your elevator pitch. Describe how the interview will unfold, invite the subject to be candid, and encourage them to point out when you say something that is incorrect (more on this in a moment).

Most important, you must place the interview in the context of an overall road map. Requirements projects do not end with the delivery of a new system. Make sure your subject understands how the requirements project will feed whatever comes next, such as a prioritization phase or an implementation project.

5. Listen and Repeat

During the interview, you need to do more than ask questions and write down answers. If you’ve prepared questions in advance, use them only to get the ball rolling. Allow the conversation to flow. You can always refer to your questions toward the end of the session to be sure you haven’t missed any.

Practice active listening. Pay attention to the subject, and repeat things back to them in your own words. You will find that this sets the subject at ease, and often leads to useful elaboration. Don’t be afraid to get things wrong. After all, you’ve already checked your ego at the door, haven’t you? Each time the subject corrects you, a minor victory has been scored; you have uncovered something important.

6. Leave the Door Open

You may leave each interview thinking you understand what has transpired, but inevitably, questions will arise. Some will come up the next day as you review and summarize your notes. Others questions will surface much later--after you have talked with others or even during a subsequent implementation project.

At the end of each interview, leave the door open for follow-ups. Sometimes a simple phone call or e-mail may be needed; in other cases, a second meeting may be necessary. Be ready to take these steps, and make sure the subject is open to them.

Chris Adamson provides strategy and design services through his company, Oakton Software LLC. For more on requirements interviews, see his latest book, Star Schema: The Complete Reference (McGraw-Hill, 2010).

Big Data and the Data Supply Chain

Jill Dyche and Evan Levy

Baseline Consulting

Topic:

Big Data

The buzz around big data is getting louder. No doubt you’ve seen the statistics. In 2008, an IDC study reported that 1,200 exabytes of data would be generated by businesses that year. Vendor-heralded reports announcing that data doubles every year portend a veritable infoglut. The dual-threat promise of unstructured data expands the vision of data proliferation even further. Exabytes are ceding to zettabyes and, if you believe the hype, yottabytes aren’t far behind.

In the BI community, it’s tempting to believe that because data volumes are exploding, data usage increases apace. The more information business people consume, the more they want--but having more data doesn’t necessarily mean users are building new reports, never mind advancing their analytic capabilities.

Indeed, the vast majority of new data is simply “forklifted” from operational systems into the data warehouse or mart. Everyone wants new data ASAP--data model be damned! Wanting to be responsive, IT heaves the data onto the platform. Thus, many a corporate data warehouse has become nothing more than a capacious cul-de-sac where the data goes to die.

Watching our clients struggle with their BI data over the years, we came up with the concept of the “data supply chain.” The idea was born out of our realization that data isn’t static--that it has its own innate lifecycle.

Although lines of business often run autonomously, they are interdependent when it comes to information. For instance, the sales department relies on information from finance; distribution relies on information from order management; marketing needs information from R&D. You get the idea. Data is shared across an organization--or it should be.

We found that most of our clients were very good at standardizing their business processes (for instance, their merchandise return or quarterly close processes), but not very good at standardizing their data. Most lines of business end up consuming data created in other lines of business. The only way to integrate and connect these disparate business processes is through the data they hand off to one another. The output of one department’s business process is very likely the input to another department’s business process.

The reality is that data changes between these business processes as it flows through them. Consider Figure 1, below:

Disparate data flows across systems and business processes in different ways. The same data might look different depending on the business processes. Viewing data in terms of a data supply chain means putting data standards in place so that data can be shared in the context in which it’s consumed.

In most companies, though, no one owns this problem. Thus, each individual business process has to work overtime to prepare data to be used. In reality, the business processes that generate the data need to understand how other business processes consume that data. In a data supply chain, data is standardized at each stage to prepare it for the next process.

Many of our clients are living the daily drama of ERP implementations. It’s not uncommon for 30 percent or more of ERP development time to be devoted to identifying, analyzing, and converting data from other operational systems. If there are no data standards in place, developers have to find and figure out the incoming data and convert it into a usable format. XML isn’t the answer, because each application calls its data something different. Revenue? Booked revenue? Sales? In order to unveil data meanings, each operational system requires an archeological expedition!

It’s the same, of course, in the data warehouse world, where ETL is the most time-consuming phase in BI development and the data on the data warehouse is often only as valid as the ETL developer’s understanding of its operational source. Adding more ETL programmers to find and interpret source system data simply exacerbates the problem. Not to put too fine a point on it, but a large team of ETL developers doesn’t guarantee robust data documentation or better metadata.

You probably think that this article is an appeal for a solid master data management (MDM) strategy, and it is. More important, though, it’s a hearty reminder that data is an enterprise asset, with emphasis on the word “enterprise.” As such, data needs to be governed using a solid set of policies that guarantee its definition, standardization, and reuse. This includes the fundamentals of data management. It’s not the new MDM frontier; it’s the precursor to effective MDM--and effective data stewardship.

With a nod to outdated folklore about data stewardship, data may actually require different stewardship at different points in the supply chain. That’s right: the definitions and rules around customer data in the sales department will likely be very different than the definitions and rules in marketing. Depending on the breadth and reach of the data, this could be overwhelming for a single customer data steward. You may need a whole team of customer data stewards. More likely, you may choose to have a customer data steward dedicated to sales and another one dedicated to marketing, and so on.

The fact that most of the growing data volumes will be, as The Economist reported in 2010, “created by machines and used by other machines” shouldn’t deter us from data governance. On the contrary, the sooner we start, the better, because it’s only going to get worse.

Jill Dyche and Evan Levy are cofounders of Baseline Consulting and co-authors of Customer Data Integration: Reaching a Single Version of the Truth (Wiley). Jill and Evan will be teaching classes on MDM, business-IT alignment, and data governance at the TDWI World Conference in San Diego, August 7–12.

Highlight of key findings from TDWI's wide variety of research

Benefits of Operational Data Integration

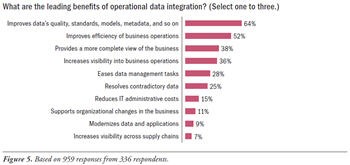

When asked whether OpDI projects yield significant benefits, a whopping 81% of survey respondents answered “yes.” Given that benefits clearly exist in users’ minds, it now behooves us to determine what those benefits are and which seem to be the most prominent. And that’s why the survey asked: “What are the leading benefits of operational data integration?” (See Figure 5.) OpDI’s benefits fall into three categories.

Improvements to data are the leading benefits of OpDI. More than any other answer to the question about OpDI benefits, 64% of survey respondents chose “improves data’s quality, standards, models, metadata, and so on.” Related benefits concern how OpDI resolves contradictory data (25%, a common goal of data sync) and modernizes data and applications (9%, as seen in most system migrations).

OpDI yields business benefits, too. High percentages of survey respondents feel that OpDI improves the efficiency of business operations (52%), provides a more complete view of the business (38%), and increases visibility into business operations (36%). OpDI is less beneficial in other areas of the business where it supports organizational changes in the business (11%) and increases visibility across supply chains (7%).

The management of data is also a beneficiary of OpDI. In this regard, OpDI eases data management tasks (28%) and reduces IT administrative costs (15%).

Source: Operational Data Integration: A New Frontier for Data Management (TDWI Best Practices Report, Q2 2009). Click here to access the report.

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake:

Thinking You Can Just Turn off an

Old Platform

By Philip Russom

Believe it or not, an old application or database will most likely remain in use after its new replacement comes online. This happens when an early phase of the rollout delivers the new system to a limited end-user population, and a different user population continues to use the old one until they are migrated. When the hand-off period is long, this is a multi-year task, so plan accordingly.

Don’t be in a hurry to unplug the legacy platform. It might be needed for rollback and for the last groups of end users to be rolled over. Some platforms are subject to lease, amortization, or depreciation schedules, so check these before setting a date for platform retirement.

Expect that redundant instances of platforms will be required. For example, to do accurate but noninvasive testing, you may need to recreate source or target environments within your test environment. Old and new platforms commonly run concurrently during all phases of the project. With two redundant systems in production simultaneously, synchronizing data between them is a required, two-way data operation.

Keep the project’s momentum rolling after initial deployment. After all, you still have to transfer the new platform to its new owners and administrators, synchronize data between the old and new platforms, and monitor the new platform to measure its system performance and user adoption.

Source: Ten Mistakes to Avoid When Migrating Databases (Q4 2008). Click here to access the publication.