July 1, 2010

ANNOUNCEMENTS

Submissions for the next Business Intelligence Journal are due August 16. Submission guidelines

New Checklist Reports: Top Ten Best Practices for Data Integration and Product Data Quality.

CONTENTS

Moving Master Data Management into the Cloud

Is Your BI Program Playing at Full Strength?

Version and Upgrade Issues with BW and NetWeaver BI

See what's

current in TDWI Education, Research, Webinars, and Marketplace

Moving Master Data Management

into the Cloud

Dan Power

Hub Designs

Topics:

Master Data Management, Cloud Computing

Whether you call it software-as-a-service or cloud computing, deploying enterprise applications via the Internet continues to gain momentum. In fact, pioneers such as Amazon, Google, Rackspace, Salesforce.com, and NetSuite have experienced rapid growth in demand, despite global economic uncertainty.

Although we’re still in the early days of cloud computing, its benefits are compelling. Dave Powers, Eli Lilly's associate information consultant for discovery IT, recently said “We were … able to launch a 64-machine cluster computer working on bioinformatics sequence information, complete the work, and shut it down in 20 minutes. It cost $6.40. To do that internally--to go from nothing to getting a 64-machine cluster installed and qualified--is a 12-week process.”

Master data management (MDM) is also moving to the cloud. MDM is a set of disciplines, processes, and technologies for ensuring the accuracy, completeness, timeliness, and consistency of multiple domains of enterprise data across applications, systems, and databases, and across multiple business processes, functional areas, organizations, geographies, and channels. Note the key words: “multiple,” “across,” and “enterprise.” MDM spans multiple domains of master data and reaches across the many silos that exist in today’s enterprises, and cloud computing helps organizations integrate master data across multiple data centers in different geographies or from different acquisitions.

When I talk to people about moving MDM hubs from corporate data centers to cloud computing environments, security and compliance are the most frequently raised issues.

Ironically, corporate data centers may actually be less secure than cloud computing environments. Over the last few years, there have been thousands of well-publicized breeches at “household name” organizations. The Privacy Rights Clearinghouse has compiled an extensive list of known data breaches, along with the number of records exposed with each incident. There have also been attacks on, and breaches by, cloud computing providers such as Google, but there are far fewer of these incidents. That being said, there’s both a perception issue and a real need for improved security by cloud providers, particularly as security threats continue to grow and evolve.

When it comes to compliance, moving enterprise applications into the cloud doesn’t absolve a company from the laws and regulations it falls under if the company provides that service inside its firewall. Depending on the industry involved, evaluating potential cloud providers against that industry’s compliance requirements can definitely be a nontrivial effort.

MDM vendors--Oracle, IBM, SAP, Informatica/Siperian, Initiate (an IBM company), D&B Purisma, and smaller providers--are evolving to the cloud. Oracle’s Fusion MDM hub will offer a cloud deployment capability when it ships late this year. IBM and Initiate are likely working on future versions of their products that will operate smoothly in the cloud. Informatica, having acquired Siperian, has also made major investments in cloud computing, and D&B Purisma offers a software-as-a-service version of its customer MDM hub product.

Security, legal, and technical issues still need to be resolved by the cloud computing providers, software vendors, systems integrators, and their enterprise customers. This will involve firewalls, encryption, backup solutions, disaster recovery, service-level agreements, and so on, but technology and legal teams are good at solving these kinds of problems.

Meanwhile, the benefits are too large to ignore. Economically, it makes more sense to share complex infrastructure and pay only for what you actually use. From a time-to-value perspective, cloud computing allows you to skip hardware procurement and capital expenditure and instead just order from a “menu.”

Maintenance and updates are a constant headache for most IT shops. Thankfully, most cloud providers continuously update their software, adding new features as they become available. As for scalability, cloud systems are built to handle sharp increases in workload. Furthermore, cloud solutions are designed to work with a simple Web browser, so users can access them from their desktops, laptops, or smartphones.

The MDM market will probably trail the rest of the enterprise a bit, but the appetite for building large, multimillion dollar applications inside the firewall is cooling. CIOs see the economics of buying, maintaining, and upgrading the applications and accompanying servers, and end up saying, “On the whole, I think I’d rather rent!”

Dan Power is the founder and president of Hub Designs, a management and technology consulting firm specializing in developing and delivering high-value master data management (MDM) and data governance strategies.

Changed Data: Without a Trace

Patty Haines

Chimney Rock Information Solutions

Topics:

Data Warehousing, Change Data Capture

Imagine this scenario: A data warehouse (DW) team implements a successful data warehouse that works well and provides instant, accurate results to the business community’s queries. Business users are excited about the easy access to large amounts of clean data. After a short time, however, the unthinkable occurs: data in the DW does not match data in the operational source systems. What became an important company asset may now have its validity questioned.

The cause: changes were applied to the source data structure but the changes were not tracked. These changes were made with a manual process outside the standard operational system--that is, outside the process the data warehouse depends on so that the change data capture (CDC) process can identify what changes occurred and can process them in the data warehouse table correctly. Changes to source records were not identified and therefore not moved into the data warehouse, so the data in the warehouse did not accurately reflect data in the source operational system.

Numerous types of manual updates may be used by the source system support staff, such as overwriting data values, adding new records, or deleting records in the source data structures. If the CDC process is a file comparison, these manual changes will be identified and processed correctly. However, if CDC is using a last update date within the source data structure to determine what changed in the source data, these manual changes may be missed by this process.

Physical deletes. If a row is unexpectedly deleted from the source structure and is not recognized by and processed by CDC, the DW will have a row of data that is still considered active but the source system will have no record that this data even existed. The two systems are now out of sync, and the business community will receive conflicting results. When the data warehouse's CDC process uses last update date to determine what changed in the source structure and there is no record to extract data from, the original record will remain active for the life of the DW. To match the source system, this record should be tagged in the DW as “stopped.”

Additional rows. A new row of data may be inserted in the source structure. Without the appropriate last update date required by CDC, this change may not be identified and moved into the warehouse, so the DW tables will not reflect what has occurred in the source data.

Changed data values. The value within a data column may be updated in the source structure. Again, without the appropriate last update date required by CDC to identify that a data value change occurred, the DW tables will not reflect what has occurred in the source data.

Any type of change to data from the source systems that has not been identified and handled by the CDC processes will negatively impact the quality of data in the DW tables. The best way to address these scenarios is for the DW team to work with the source system support staff up front while the data warehouse and associated processes are being designed. This will ensure all scenarios are identified, processed, and tested during development.

The support staff may be able to change or reduce these manual processes, understanding the negative impact to the identification and population of changed data in the data warehouse. They may be able to implement a process to update the last update date and include a standard “manual change” user ID tag in the source structure. The CDC process would then have something to use as a trigger to identify data changed with a manual process. Instead of physically deleting the record, the support staff may be able to insert a row of data as a soft delete, with an indication on the record that it is no longer active in the source system.

During the development project, it is important to understand these special processes that occur outside the standard operational system. These need to be addressed early in the development cycle by the support staff and DW development team working together on a complete solution--one that helps keep the operational source system working correctly and allows the data warehouse to accurately reflect this data.

Patty Haines is president of Chimney Rock Information Solutions, a company specializing in data warehousing and master data management. She can be reached at 303.697.7740.

Is Your BI Program Playing at Full Strength?

Mark Peco

InQvis

Topics:

Business Intelligence

In organized sports, coaches assign players to positions with clearly defined roles and responsibilities. The assignments are based on the commonly understood rules of a sport. Typically, a coach recognizes the full roster of positions to be filled. However, a new coach who isn’t familiar with all of the rules might make an error and leave some positions open. For example, a U.S. football coach who is coaching a Canadian football game for the first time might not be aware that there are 12 positions to be filled rather than the familiar 11 as in the American game. This might result in his team being shorthanded--and playing at a disadvantage.

Consider business intelligence a challenging game to be won. How many components must be included for success?

BI enables an organization to think, plan, reason, comprehend, and intelligently decide how to define and achieve its creative and productive goals. This definition is based on the premise that business value is not usually created directly from information; it is created by activities and executing work. Products are designed and sold. Customers are acquired and served. Revenue is collected and managed. The combination of information with work activities is used to create and maximize value. If the BI program manager and the organization’s leadership team want to generate value, then the scope of BI may need to be readjusted. Value will be generated if the right work is done well. This simple phrase implies that the right work is related to achieving the firms’ strategic and tactical objectives and doing it well implies operational excellence and process efficiency. Using this phrase helps an organization understand how value is generated.

To play at full strength, consider implementing components from each of the following categories of components when defining the scope of your BI program.

Components for Value Generation

Value-generating components include defined business objectives, critical decision-making processes, core work processes, and human capital development processes. Each of these components interacts with and influences the others. Business value will be generated when motivated and skilled people consistently and reliably make decisions about what core work needs to get done, understand how to get the work done, and execute activities to get the work done. This perspective shows how these four components interact with each other in an aligned manner to generate value.

Components for Monitoring and Learning

The monitoring and learning components of a BI program include information, measurement, analytics, and technology categories. The information components provide answers to our business and operational questions. Measurement components quantify the position of business capability, activity, or performance level. The analytics components help generate insights, which describe something previously unknown by the organization. Technology components provide the automation capabilities for the other monitoring and learning components to operate. The monitoring and learning components are responsible for informing the business value–generating components if they are doing the right work well or if adjustments are required.

Components for Leadership and Control

The leadership and control components include stakeholders and governance. The stakeholder components define which people have a stake in the success of a BI program. Stakeholders include two categories of people in the organization: investors and customers. Investors commit funds, people, and time to a BI program. They expect a tangible return on their investment. Customers are actively involved in consuming information for making decisions, executing their work, planning their strategy, and other operational activities. They have needs and demands that are related to the quality of the information being delivered. The governance components of the BI program define the accountability structures and relationships that allow the value-generating components and the monitoring and learning components to work together cohesively.

Integrating the Components

Don’t be caught shorthanded. Business intelligence programs are complex and challenging enough without the disadvantages of scope and accountability definitions that are too narrow. Recognize that the real game being played by BI should not be information delivery—the game worth playing is business value generation. Make sure you have the complete set of components under your influence and mandate to ensure your organization can win at playing the right game. You need components from all three areas to play at full strength and win the game of intelligent value generation.

Mark Peco, CBIP, is a consultant and educator with degrees in engineering from the University of Waterloo. He is a partner with InQvis, a consulting firm based in Toronto, and is also a faculty member of TDWI.

Highlight of key findings from TDWI's wide variety of research

Version and Upgrade Issues with BW and NetWeaver BI

There are good reasons why SAP customers should upgrade to NetWeaver BI version 7, the most recent release, which is sometimes called 2004s:

- NetWeaver BI 7 is more open to non-SAP systems than prior releases of BW. This is very important to SAP users who want to make the BW component of NetWeaver BI their primary data warehouse, which inevitably means integrating data from non-SAP sources into BW.

- BEx is better in version 7. SAP users interviewed by TDWI pointed to the greater usability and interactivity of the BEx and BEx Web user interface as one of the reasons they upgraded.

- NetWeaver provides an infrastructure for both application and data integration. This provides more options for integration, as well as master data management, both inside and outside the SAP environment.

- BI Accelerator requires the latest release. The SAP NetWeaver BI Acclerator requires NetWeaver 7.0.

- Its service-oriented architecture (SOA) enables new application options. One of the users TDWI interviewed explained that he upgraded to 7, so he can use services to build composite applications that provide alternative user interfaces for SAP applications with low usability, as well as improve interoperability between application modules. Another user said he’d build composite dashboards for BI with NetWeaver 7.

The bad news is that a number of SAP users whom TDWI interviewed complained about the difficulties of upgrading from version 3.5 or earlier of BW to version 7.0. Admittedly, the difficulties are largely due to users’ actions, like extensive customizations or the fact that they are multiple releases behind. The situation is exacerbated because the users in question lack internal resources for upgrades. For users in this situation, upgrades are unlikely anytime soon, which prevents them from reaping the benefits of the new functionality in version 7 of NetWeaver BI.

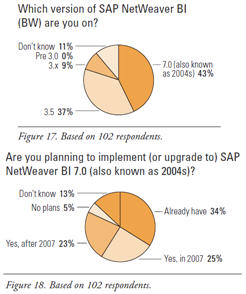

The good news is that many NetWeaver BI users are already on version 7 (43% in Figure 17 and 34% in Figure 18). Only 37% are one release behind on version 3.5, with 9% on other 3.x releases. No one answering the survey is on a pre-3.0 release. Adoption of version 7 should pick up soon, since many survey respondents plan to upgrade this year (25% in Figure 18) or next (23%).

Source: Business Intelligence Solutions for SAP (TDWI Best Practices Report, Q4 2007). Click here to access the report.

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake:

Narrow Focus

By Arkady Maydanchik

Systematic data quality management efforts originated in the ’90s from parsing, matching, standardizing, and deduplicating customer data. Over the years, great strides have been made in this area. Modern tools and solutions allow businesses to achieve high rates of success. This progress makes many organizations feel good about their efforts to manage customer data quality. A good number of organizations have implemented solutions by now, and it is fair to say that the level of quality in corporate customer data is at its highest ever.

Unfortunately, the same cannot be said about the rest of the data universe. Data quality has continually deteriorated in the areas of human resources, finance, product orders and sales, loans and accounts, patients and students, and myriad other categories. Yet these types of data are far more plentiful and certainly no less important than customer names and addresses.

The main reason we fail to adequately manage quality in these categories is that this data’s structure is far more complex and does not allow for a “one size fits all” solution. More effort and expertise are required, and data quality tools offer less help. Until organizations require data quality management programs to focus equally on all of their data, we cannot expect significant progress.

Source: Ten Mistakes to Avoid In Data Quality Management (Q4 2007). Click here to access the publication.