By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report about High-Performance Data Warehousing (HiPer DW) is finished and will be published in October. The report’s Webinar will broadcast on October 9, 2012. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #HiPerDW to find other leaks. Enjoy!]

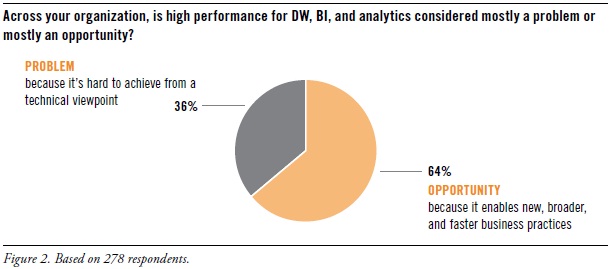

In recent years, TDWI has seen many user organizations adopt new vendor platforms and user best practices, which helped overcome some of the performance issues that dogged them for years, especial data volume scalability and real-time data movement for operational BI. With that progress in mind, a TDWI survey asked: “Across your organization, is high performance for DW, BI, and analytics considered mostly a problem or mostly an opportunity?” (See Figure 2, shown above.)

Two thirds (64%) consider high performance an opportunity. This positive assessment isn’t surprising, given the success of real-time practices like operational BI. Similarly, many user organizations have turned the corner on big data, no longer struggling to merely manage it, but instead leveraging its valuable information through exploratory or predictive analytics, to discover new facts about customers, markets, partners, costs, and operations.

Only a third (36%) consider high performance a problem. Unfortunately, some organizations still struggle to meet user expectations and service level agreements for queries, cubes, reports, and analytic workloads. Data volume alone is a show stopper for some organizations. Common performance bottlenecks center on loading large data volumes into a data warehouse, running reports that involve complex table joins, and presenting time-sensitive data to business managers.

BENEFITS OF HIGH-PERFORMANCE DATA WAREHOUSING

Analytic methods are the primary beneficiaries of high performance. Advanced analytics (mining, statistics, complex SQL; 62%) and big data for analytics (40%) top the list of practices most likely to benefit from high performance, with basic analysis (OLAP and its variants; 26%) not too far down the list. High performance is critical for analytic methods because they demand hefty system resources, they are evolving toward real-time response, and they are a rising priority for business users.

Real-time BI practices are also key beneficiaries of HiPer DW. High performance can assist practices that include a number of real-time functions, including operational business intelligence (37%), dashboards and performance management (34%), operational analytics (30%), and automated decisions for real-time processes (25%). Don’t forget: the incremental movement toward real-time operation is the most influential trend in BI today, in that it affects every layer of the BI/DW/DI and analytics technology stack, plus user practices.

System performance can contribute to business processes that rely on data or BI/DW/DI infrastructure. These include business decisions and strategies (33%), customer experience and service (21%), business performance and execution (19%), and data-driven corporate objectives (14%).

Enterprise business intelligence (EBI) needs all the performance help it can get. By definition, EBI involves thousands of users (most of them concurrent) and tens of thousands of reports (most refreshed on a 24-hour cycle). Given its size and complexity, EBI can be a performance problem. Yet, survey respondents don’t seem that concerned about EBI, with few respondents selecting EBI issues, such as standard reports (15%), supporting thousands of concurrent users (15%), and refreshing thousands of reports (12%).

Want more? Register for my HiPer DW Webinar, coming up Oct.9 noon ET.

Read other blogs in this series:

The Four Dimensions of HiPer DW

Defining HiPer DW

High Performance: The Secret of Success and Survival

Posted by Philip Russom, Ph.D. on September 21, 20120 comments

By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report about High-Performance Data Warehousing (HiPer DW) is finished and will be published in October. The report’s Webinar will broadcast on October 9, 2013. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #HiPerDW to find other leaks. Enjoy!]

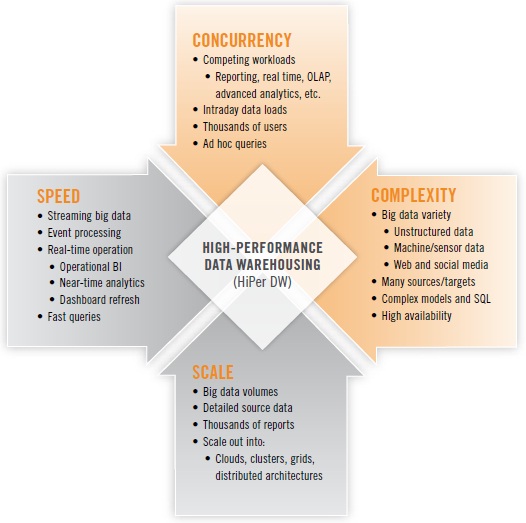

FIGURE 1.

High-performance data warehousing (HiPer DW) is primarily about achieving speed and scale, while also coping with increasing complexity and concurrency. These are the four dimensions that define HiPer DW. Each dimension can be a goal unto itself; yet, the four are related. For example, scaling up may require speed, and complexity and concurrency tend to inhibit speed and scale. The four dimensions of HiPer DW are summarized in Figure 1 above.

Here follow a few examples of each:

SPEED. The now-common practice of operational BI usually involves fetching and presenting operational data (typically from ERP and CRM applications) in real time or close to it. Just as operational BI has pushed many organizations closer and closer to real-time operation, the emerging practice of operational analytics will do the same for a variety of analytic methods. Many analytic methods are based on SQL, making the speed of query response more urgent than ever. Other analytic methods are even more challenging for performance, due to iterative analytic operations for variable selection and reduction, binning, and neural net construction. Out on the leading edge, events and some forms of big data stream from Web servers, transactional systems, media feeds, robotics, and sensors; an increasing number of user organizations are now capturing and analyzing these streams, then making decisions or taking actions within minutes or hours.

SCALE. Upon hearing the term “scalability,” most of us immediately think of the burgeoning data volumes we’ve been experiencing since the 1990s. Data volumes have recently spiked in the phenomenon known as “big data,” which forces organizations to manage tens of terabytes – sometimes hundreds of terabytes, even petabytes – of detailed source data of varying types. But it’s not just data volumes and the databases that manage them. Scalability is also required of BI platforms that now support thousands of users, along with their thousands of reports that must be refreshed. Nor is it just a matter of scaling up; all kinds of platforms must scale out into ever larger grids, clusters, clouds, and other distributed architectures.

COMPLEXITY. Complexity has increased steadily with the addition of more data sources and targets, not to mention more tables, dimensions, and hierarchies within DWs. Today, complexity is accelerating, as more user organizations embrace the diversity of big data, with its unstructured data, semi-structured data, and machine data. As data’s diversity increases, so does the complexity of its management and processing. Some organizations are assuring high performance for some workloads (especially real time and advanced analytics) by deploying standalone systems for these; one of the trade-offs is that the resulting distributed DW architecture has complexity that makes it difficult to optimize the performance of processes that run across multiple platforms.

CONCURRENCY. As we scale up to more analytic applications and more BI users, an increasing number of them are concurrent—that is, using the BI/DW/DI and analytics technology stack simultaneously. In a similar trend, the average EDW now supports more database workloads – more often running concurrently – than ever before, driven up by the growth of real-time operation, event processing, advanced analytics, and multi-structured data.

Want more? Register for my HiPer DW Webinar, coming up Oct.9 noon ET.

Read other blogs in this series:

Defining HiPerDW

High Performance: The Secret of Success and Survival

Posted on September 14, 20120 comments

By Philip Russom, TDWI Research Director

[

NOTE -- My new TDWI report about High-Performance Data Warehousing (HiPer DW) is finished and will be published in October. The report’s Webinar will broadcast on October 9, 2013. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #HiPerDW to find other leaks. Enjoy!]

Data used to be just data. Now there’s big data, real-time data, multi-structured data, analytic data, and machine data. Likewise, user communities have swollen into thousands of concurrent users, reports, dashboards, scorecards, and analyses. The rising popularity of advanced analytics has driven up the number of power users, with their titanic ad hoc queries and analytic workloads. And there are still brave new worlds to explore, such as social media and sensor data.

The aggressive growth of data and attendant disciplines has piled additional stresses on the performance of systems for business intelligence (BI), data warehousing (DW), data integration (DI), and analytics. The stress, in turn, threatens new business practices that need these systems to handle bigger and faster workloads. Just think about modern analytic practices that depend on real-time data, namely operational BI, streaming analytics, just-in-time inventory, facility monitoring, price optimization, fraud detection, and mobile asset management. Many of the latest practices apply business analytics to leveraging big data, which is a performance double whammy of heavy analytic workloads and extreme scalability.

The good news for BI, DW, DI, and analytic practices is that solutions for high-performance are available today. These solutions involve a mix of vendor tools or platforms and user designs or optimizations. For example, the vendor community has recently delivered new types of database management systems, analytic tools, platforms, and tool features that greatly assist performance. And users continue to develop their skills for high-performance architectures and designs, plus tactical tweaking and tuning. This report [to be published in October 2013] refers this eclectic mix of vendor products and user practices as high-performance data warehousing (HiPer DW).

In most user organizations, a DW and similar databases bear much of the burden of performance; yet, the quest for speed and scale also applies to every layer of the complex BI/DW/DI and analytics technology stack, as well as processes that unfold across multiple layers. Hence, in this report, the term high-performance data warehousing (HiPer DW) encompasses performance characteristics, issues, and enablers across the entire technology stack and associated practices.

HiPer DW Solutions combine Vendor Functionality with User Optimizations

Performance goals are challenging to achieve. Luckily, many of today’s challenges are addressed by technical advancements in vendor tools and platforms.

For example, there are now multiple high-performance platform architectures available for data warehouses, including massively parallel processing (MPP), grids, clusters, server virtualization, clouds, and SaaS. For real-time data, databases and data integration tools are now much better at handling streaming big data, service buses, SOA, Web services, data federation, virtualization, and event processing. 64-bit computing has fueled an explosion of in-memory databases and in-memory analytic processing in user solutions; flash memory and solid-state drives will soon fuel even more innovative practices. Other performance enhancements have recently come from multi-core CPUs, appliances, columnar storage, high-availability features, Hadoop, MapReduce, and in-database analytics. Later sections of this report will discuss in detail how these and other innovations assist with high performance.

Vendor tools and platforms are indispensible, but HiPer DW still requires a fair amount of optimization by technical users. The best optimizations are those that are designed into the BI and analytic deliverables that users produce, such as queries, reports, data models, analytic models, interfaces, and jobs for extract, transform, and load (ETL). As we’ll see later in this report, successful user organizations have pre-determined standards, styles sheets, architectures, and designs that foster high performance and other desirable characteristics. Vendor tools and user standards together solve a lot of performance problems up front, but there’s still a need for the tactical tweaking and of tuning of user-built BI deliverables and analytic applications. Hence, team members with skills in SQL tuning and model tweaking remain very valuable.

Want more? Register for my HiPer DW Webinar, coming up Oct.9 noon ET:

http://bit.ly/HiPerDWwebinar

Posted by Philip Russom, Ph.D. on September 7, 20120 comments

Business decision cycles are turning faster, and to keep up, executives and managers are in constant need of new data and new types of reporting and analysis. Dynamic organizations are demanding greater agility from their business intelligence (BI) systems. TDWI Research is currently examining how well organizations are able to adjust their BI and data warehouse (DW) development, deployment, and management to enable greater agility.

How is your organization doing in addressing user demands for more agile BI/DW? What are your toughest challenges? We would very much like to include your opinions and insights in the TDWI Research survey, which is live right now. Thank you to everyone who has already participated in the survey. As part of my research for what will ultimately be a TDWI Best Practices Report, I am also conducting interviews with professionals to understand their experiences with agile development methods for BI/DW and with deploying self-service BI, data virtualization, and other technologies that are helping organizations become more agile. If you are interested, please drop me a line at [email protected].

With survey data coming in, it’s hard not to take a peek at what we have so far. Respondents say that the business factors having the most disruptive impact, requiring greater business and IT agility, are increased competition (74%, with 20% calling it “very disruptive”), economic or global instability (68%), shorter decision cycles (65%), and technology modernization (62%). Changes in customer behavior form the fifth highest factor, with 60%. The largest percentage of respondents (45%) say that their organizations are “average” at adjusting to change and taking advantage of emerging opportunities, with 10% saying that their organization is “excellent,” 31% saying “good,” and 14% saying “poor.”

Other questions in the survey will provide data for deeper insight into where challenges are most acute in terms of BI/DW development processes and technologies. One of the biggest issues regarding agility is, of course, agile software development method adoption. Ralph Hughes, chief systems architect for Ceregenics and I will be speaking on this topic on September 20 at the upcoming TDWI World Conference in Boston. If you would like to hear a preview of what we will be talking about, including the ongoing research effort into use of agile methods, listen to our recent Webinar.

Achieving greater agility through better methods and technology is a hot area of interest in the TDWI community. Let us know your views on this important topic, both by taking the research survey and by getting in touch.

Posted by David Stodder on August 27, 20120 comments

Personal, self-service analytics and discovery is one of the most important trends not only in business intelligence (BI) but in user applications generally. Expensive, monster systems that have big footprints and are not flexible to meet dynamic business needs are increasingly viewed by users as legacy. Rather than work with monolithic, one-size-fits-all applications that are dominated by IT management and development, users today want freedom and agility. They do not want to wait weeks or months for changes; they want to tailor reporting, analysis, and data sharing to their immediate and often changing needs.

I recently wrote a TDWI Checklist Report on this topic. The report offers seven steps toward personal, self-service BI and analytics success, from taking new approaches to gathering user requirements to implementing in-memory computing, visualization, and enterprise integration. I hope you find this Checklist Report useful in your BI and analytics technology evaluations and deployments.

An important conclusion in the report is that perhaps ironically, IT data management is absolutely critical to the success of personal, self-service analytics and discovery. Nowhere is this truer than with enterprise data integration. Business users often require a mix of different types of data, including structured, detailed data, aggregate or dimensional data, and semi-structured or unstructured content. In addition, given that it is doubtful that users will give up their spreadsheets any time soon, systems must be able to import and export data and analysis artifacts to and from spreadsheets. Assembling and orchestrating access to such diverse data sources must not be left up to nontechnical users.

Thus, even as users celebrate the trend toward personal, self-service analytics and discovery, its success hinges on IT’s data management prowess to ensure data quality, enterprise integration, security, availability, and ultimately, business agility with information.

Posted by David Stodder on June 28, 20120 comments

At the recently concluded TDWI Solution Summit on big data analytics in San Diego, a discussion topic that percolated throughout the conference was the increasing role in IT purchases of the marketing function and chief marketing officers (CMOs). During a question-and-answer period, an attendee asked sponsor panel speakers for comment about a January 2012 projection by Gartner research vice president Laura McLellan that by 2017, CMOs will spend more on IT than CIOs. Though impressed by the projection, the panelists did not seem surprised by this trend.

Analytics adoption is driving major changes in marketing functions, which in most organizations are empowered with the responsibility for identifying, attracting, satisfying, and keeping customers. Marketing functions are becoming increasingly quantitative; they are replacing “gut feel” with data-driven decision making. Data drives the pursuit of efficiency and the achievement of measurable results. Marketing functions are key supporters of “data science,” which is the use of scientific methods on data to develop hypotheses and models and apply iterative, test-and-learn strategies to marketing campaigns and related initiatives.

In the new TDWI Best Practices Report I wrote, “Customer Analytics in the Age of Social Media” (to be published in early July), our survey found that in the majority of organizations (59%), IT and data management functions are still the owners of the budget for customer analytics technologies and services. TDWI did, however, discover a growing budget role played by marketing and advertising functions. Nearly two out of five (38%) respondents said that this function has responsibility for the customer analytics budget in their organizations. Executive management (39%) is also a significant player in budgetary decisions. (Note: “Big data analytics for better customer intelligence” is the theme of the next TDWI BI Executive Summit in San Diego.)

Whether located in IT or under the aegis of the corporate marketing function, specialists in customer analytics must often consult with globally distributed, departmental marketing teams as well as other business units to understand key business challenges and opportunities that should be considered in the development of models, algorithms, queries, and data files for analysis. In other words, customer analytics professionals must be able to live in both technology and business worlds and work with diverse teams from not only marketing, but also finance and operations, to develop accurate, consistent, and common metrics for evaluating results. The ability to move across functions is important for delivering holistic, or enterprise, benefits from customer analytics that go beyond marketing.

Customer analytics and the budget for analytic processes are often in the middle of tensions between IT and marketing. In interviews for this report, TDWI found that the growth in analytics implementation by marketing functions is putting stress on relations with IT over control of the data and who develops and runs analytic routines. The iterative, discovery-oriented qualities of predictive modeling and variable development don’t fit well with IT’s standard approach to gathering all user requirements at once and owning the development of a solution. “IT would ask us to identify the fields we wanted,” a marketing data analyst interviewed for the report said, “but we had to say, ‘Gee, we won’t know until we can look at what’s available and start playing with it.’”

Analytics is thus rising as a sensitive – and competitive – issue as marketing functions gain a larger share of organizations’ technology budgets. It is imperative, therefore, that CMOs and CIOs communicate effectively about shared customer analytics objectives to avoid letting internal budgetary battles become an obstacle to business success. Functioning in a complementary and collaborative fashion, marketing and IT functions can achieve more together than either could accomplish alone.

Posted by David Stodder on June 18, 20120 comments