View online: tdwi.org/flashpoint

View online: tdwi.org/flashpoint

January 16, 2014

ANNOUNCEMENTS

NEW TDWI E-Book:

From Enterprise to Personal Search: Flipping the Search Landscape

NEW TDWI E-Book:

Top Considerations for Effective Visualizations

NEW TDWI Best Practices Report:

Predictive Analytics for Business Advantage

NEW TDWI Checklist Report:

Seven Use Cases for Geospatial Analytics

NEW TDWI Big Data Maturity Model and Assessment Tool

Take the Assessment Now

CONTENTS

Going Agile with Data Warehouse Automation

Mainframes: The (Other) Elephant in the Big Data Room

In-Memory Computing for Visual Analysis and Discovery

Thinking of Your Data Strategy as a Plan Rather than a Process

See what's

current in TDWI Education, Events, Webinars,

and Marketplace

Going Agile with Data Warehouse Automation

Dave Wells

BI Consultant, Mentor, and Teacher

Topics:

Data Analysis and Design, Data Warehousing

The common and long-standing problems with data warehouses are that they take too long to build, they cost too much to build, and they’re too hard to change after they are deployed. Slow, expensive, and inflexible are the antithesis of agile, but they are difficult barriers to overcome with conventional development methods. Fortunately, there is an alternative to conventional methods. Data warehouse automation--a relatively mature but underutilized technology--is an effective way to resolve the three barriers to agile data warehousing.

What Is Data Warehouse Automation?

Data warehouse automation uses technology to increase efficiencies and improve effectiveness in data warehousing processes. Data warehouse automation is more than simply automating ETL development or even the entire development process. It encompasses the entire data warehousing life cycle, from planning and analysis to design and development, and extending into operations, maintenance, and change management.

Adoption of data warehouse automation changes the way we think about building data warehouses. The widely accepted best practice of extensive up-front analysis, design, and modeling can be left behind as the mindset changes from “Get it right the first time” to “Develop fast and develop frequently,” an approach that is aligned with today’s agile development practices.

Automation in data warehousing has many of the same benefits as in manufacturing:

- Increased productivity and speed of production

- Reduction of manual effort

- Improved quality and consistency

- Better controls and process optimization opportunities

The manufacturing parallel holds true when building a data warehouse; we can think of it as an information factory. However, data warehousing is more complex than product manufacturing. Manufactured products are typically delivered to a consumer and the job is done. Data warehouses must be sustained through a long life cycle where changes in source data, business requirements, and underlying technologies are ongoing concerns. Automation helps implement the right changes in the right ways, as quickly as they are needed.

There are, of course, popular arguments in favor of handcrafting. In consumer goods, for example, advocates of handmade objects use terms such as unique, personal, and human touch. These are all valid reasons to buy a handmade scarf, but are they qualities that are needed in a data warehouse?

Business Benefits

Data warehouse automation is not just a “technology thing” to help developers and IT professionals who build and operate data warehouses. It has real and substantial business benefits, including:

- Quality and effectiveness: Automation enables warehousing teams to build solutions that best meet real business requirements. It is especially difficult to get complete and correct requirements when limited to an early phase of a linear development process. With data warehouse automation, the business can make changes much later in the development process and change can occur more frequently with less disruption, waste, and rework.

- Business agility: The ability to change fast and frequently extends beyond the warehouse development process. Changes that occur in business requirements can be met with a quick response. Responding to change in real time and without the delay of lengthy projects is the essence of business agility.

- Speed: Speed is the critical factor that enables agility for both agile business and agile development. The ability to generate (and regenerate) quickly when change occurs is a fundamental automation capability.

- Cost savings: Ultimately, building better, building faster, and changing quickly when needed bring substantial cost savings to data warehouse development, operations, maintenance, and evolution.

Technical Benefits

The benefits of automation aren’t solely for business. IT organizations also derive real value from data warehouse automation in several ways:

- Agile projects: Agile and automation work well together

- Time savings: Developers can deliver more in less time

- Adaptability: It is much easier to make changes when requirements change

- Consistency: Building in standards and conventions makes them easy to follow

- Better documentation: You can generate comprehensive and consistent documentation that stays in sync with implementations

- Impact analysis: Metadata-based capabilities enable complete identification of the upstream and downstream impacts of planned changes

- Simplified testing: Most automation tools support both incremental testing during development and validation testing as part of operation

- Maintainability: Ease of maintenance is achieved through improved consistency, better documentation, simplified testing, and automating design and implementation of changes

BIReady, Kalido, timeXtender, and WhereScape are among the vendors offering such automation products. The business case is strong, the value proposition is sound, and automation will help make agile data warehousing a reality.

Dave Wells is actively involved in information management, business management, and the intersection of the two. With more than 30 years of information management background and over 10 years of business management experience, he brings a wealth of pragmatic knowledge. Dave is teaching a full-day class about data warehouse automation on February 24, 2014 at TDWI’s World Conference in Las Vegas.

Mainframes: The (Other) Elephant in the Big Data Room

Jorge A. Lopez

With up to 80 percent of data originating on your mainframe, you can’t ignore big data trends. In this article, we recommend steps to get started using Hadoop to leverage your mainframe data.

At first sight, mainframes and Hadoop might seem like the most unlikely duo. One appeared in the late 1950s--even before the PC--while the other (to this day) hasn’t reached its teenage years but is already bragging about managing big data. Much has been said and written about the death of mainframe computers, but the truth is, some of the largest organizations (think of the top telcos, retailers, insurance, healthcare, and financial organizations of the world) still rely on mainframes for mission-critical applications.

When talking to these organizations, it’s not unusual to hear that up to 80 percent of their corporate data originates on the mainframe. That is some serious big data, and organizations cannot afford to neglect it! That’s why they are making the mainframe a core piece of their big data strategies.

How can such organizations get started with Hadoop? What are some practical Hadoop use cases for mainframe users?

Read the full article and more: Download Business Intelligence Journal, Vol. 18, No. 4

Highlight of key findings from TDWI's wide variety of research

In-Memory Computing for Visual Analysis and Discovery

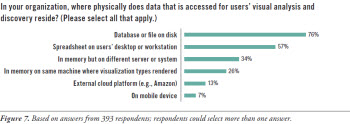

Although in-memory computing for analytics is a hot industry topic, TDWI Research finds that overwhelmingly, the physical location of data accessed for users’ visual analysis and discovery is on a database or file on disk (76%; see Figure 7). The second most prevalent source is spreadsheets on desktops or workstations (57%). Use of in-memory computing, however, does garner a healthy amount of interest; one-third of respondents are implementing in-memory on different servers or systems from where the visualization types are rendered, and 26% are doing so on the same machine.

Adoption of 64-bit operating systems has made it easier for developers and users of BI and analytics systems to exploit very large memory and bring powerful functions closer to the data. With in-memory computing, the traditional I/O bottleneck constraint--where queries have to read information from tables stored only on disk--becomes less of a factor. Users can perform, on their own, types of analysis that would be too slow with disk-dependent systems and limited in scope because not enough data is available. In-memory computing could therefore be an advantage for complex, highly interactive analytics or in circumstances where it would hurt the performance of operational data sources to go against live data.

Read the full report: Download Data Visualization and Discovery for Better Business Decisions (Q3 2013)

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake: Thinking of Your Data Strategy as a Plan Rather than a Process

Mark Madsen

Your data strategy is not static. You don’t create a plan once and execute it. The nature of strategy is planning your way through ambiguity and positioning for the best long-term outcomes. Strategy is a process of adapting, not the definition of an end state that will be reached.

Business conditions change: there are mergers, acquisitions, divestitures, exits from unprofitable markets, and introductions of products into new markets. The conditions change constantly, and with them, the goals and strategies of your organization.

Strategies evolve as conditions change. The conditions can be internal or external. They may be the process and constraints of the business, the available data, the staff and skills, or the shifting technology market. For example, a data strategy from a decade ago would have assumed that all data would be managed inside the organization; today it must assume that data will be managed across multiple locations both inside and outside the direct physical control of IT. Creating a data strategy implies a process of revision and update, aligning with the strategy of the organization as a whole.

Read the full issue: Download Ten Mistakes to Avoid When Creating Your Data Strategy (Q4 2013)