View online: tdwi.org/flashpoint

View online: tdwi.org/flashpoint

September 12, 2013

ANNOUNCEMENTS

NEW TDWI Checklist Report:

Operational Intelligence: Real-Time Business Analytics from Big Data

CONTENTS

Reallocation of Data Resources for Predictive Analytics Projects

Insights on Hiring for BI and Analytics

Mistake: Cherry-Picking Agile Practices

See what's

current in TDWI Education, Events, Webinars,

and Marketplace

Reallocation of Data Resources for Predictive Analytics Projects

Thomas A. Rathburn

The Modeling Agency

Topics:

Business Analytics

Introduction

Traditional statistical projects often utilize a train/test design. This approach is appropriate because most projects are completed to test a hypothesis about a specific formulation of a solution using a single model.

Predictive analytics projects generally involve the development of many models in the search for a solution that significantly outperforms the current decision-making process. In a business environment, these projects are likely to involve some aspect of human behavior.

Human behavior does not possess the underlying structure of physical systems, and is further complicated by a high level of inconsistency. The combination of these factors means you must add a significant level of validation to your project development effort to ensure the models will perform in a live decision-making environment.

The Train/Test/Validate Project Design

Mutually exclusive data sets are developed for each phase of the project design:

- Training Data Set: Training data is used for the development of challenger models that will compete to replace the current champion model. This will fulfill the decision-making role for the process under analysis.

- Testing Data Set: All developed models are run against a single set of test data. This allows you to evaluate the relative performance of each of the competing models based on your business performance metrics.

- Validation Data Sets: A single model is selected, based on test performance, and advances into validation. Validation studies are completed to allow the analyst to develop a reliable estimate of performance and variance expectations.

How Much Data Is Enough?

The number of records to be included in each data set varies depending on the type of project being undertaken, and the nature of the decision process in the business environment. In today’s environments, we tend to use far more data than is required, or desirable, in the development of models. The traditional recommendation to use approximately half of the available data for training is no longer justified. Rather, the vast majority of your data should be reserved for validation.

- Training Data Set: For classification projects with a binary “outcome” attribute, the training data set typically requires approximately 5,000 records, uniformly distributed between the 1s and 0s. For forecasting projects, the training data should include a minimum of 25 to 50 records for each “condition” attribute in the model.

- Testing Data Set: The test data set should reflect the approximate number of examples that will be scored in a decision cycle in the business environment. More than one decision cycle may be utilized for testing. Results should be computed in a manner consistent with the live decision environment.

- Validation Data Sets: The structure of the validation data sets should be consistent with the decision cycle under which the model will perform in the live environment. It is recommended that 30 to 50 validation studies be completed. The central limit theorem tells us that this level of validation provides a statistically valid estimate of performance and variance expectations.

The Impact of Additional Records

The inclusion of additional records in training may be useful for forecasting projects, which are inherently based on developing estimates of a continuous valued “outcome” attribute. Additional data provides reference points for your interpolation efforts that are closer together in mathematical space. The use of additional records offers the potential for additional precision in your estimates.

Human behavior is inherently inconsistent. In both forecasting and classification projects, the inconsistency content of additional records may have a negative impact on the performance of your model that is more significant than any positive impact of the additional information contributed by those records. Maintaining approximately 5,000 records typically provides an adequate representation of a multidimensional solution space.

Why Validation Should Be Your Priority

In our current data-rich environment, we are rarely faced with the historical issues related to a lack of experience reflected in our data. The analyst generally has far more records available for developing models than is required to successfully complete a project. The threat of “fat data” is the high dimensionality often represented in our models. This threat too often results in models that do not generalize well into unseen experiences that will be encountered in a live decision environment. Our priority, in this environment, needs to shift away from concerns related to model development and focus on ensuring that the models we do develop will perform as expected in the live decision environment.

Thomas A. Rathburn senior consultant and training director for The Modeling Agency, has a strong track record of innovation and creativity, with more than two decades of experience in applying predictive analytics in business environments, assisting commercial and government clients internationally in the development and implementation of applied analytics solutions. He is a regular presenter of the data mining and predictive analytics tracks at TDWI World Conferences.

Insights on Hiring for BI and Analytics

Hugh J. Watson, Barbara H. Wixom, and Thilini Ariyachandra

Although there are many keys to success in business intelligence and business analytics, having the right talent is critical. Finding BI/BA talent, however, is becoming a serious problem as more organizations turn to the use of analytics. Our research has found that 89 percent of BI/BA employers anticipate that their organizations’ needs for BI/BA skills will increase over time. An oft-cited study by the McKinsey Global Institute predicts that by 2018 the U.S. will have a shortage of 140,000 to 190,000 people with deep analytical skills, and 1.5 million managers and analysts will be needed to analyze big data and make decisions (Manyika, et al, 2011).

There are several options for securing BI/BA talent. A common approach is to hire people from other companies. This may address short-term needs for some enterprises, but it is clearly not a sustainable solution for the marketplace. Another option is to find people from within your own organization and augment their skills through employee training and development programs. Power users with an analytic bent are good candidates for this approach. A third alternative is to hire recent college graduates.

In this article, we will share some thoughts and recent survey data to provide insights about hiring BI/BA talent from universities.

Read the full article and more: Download Business Intelligence Journal, Vol. 18, No. 2

Highlight of key findings from TDWI's wide variety of research

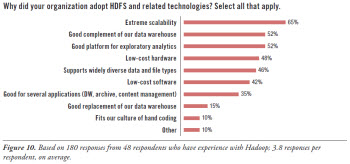

Reasons to Adopt Hadoop

To get a sense of why user organizations are deploying Hadoop, the survey asked a subset of respondents: Why did your organization adopt HDFS and related technologies? (See Figure 10.)

Real-world organizations adopt Hadoop for its extreme scalability. Sixty-five percent of respondents with Hadoop experience chose Hadoop for scalability, whereas only 19% of the total survey population viewed scalability as an important benefit (refer back to Figure 8). In other words, the more users learn about HDFS, the more they respect its unique ability to scale.

Users experienced with HDFS consider it a complement to their DW. Roughly half of respondents believe this (52% in Figure 10), whereas only 15% think HDFS could replace their DW.

Half of Hadoop users deployed it as a platform for exploratory analytics (52%). However, one-third (35%) feel that HDFS and related technologies are also good for several applications (such as DW, archive, and content management).

Almost half of Hadoop users surveyed chose it because it’s cost effective. Compared to other enterprise software, HDFS and its tools are low-cost software (42%), even when acquired from a vendor. They run on low-cost hardware (48%).

Read the full report: Download Integrating Hadoop into Business Intelligence and Data Warehousing (Q2 2013)

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake: Cherry-Picking Agile Practices

Ken Collier, Ph.D.

Scrum only has five prescribed practices and three roles. Nonetheless, it’s surprising how many agile BI teams cherry-pick the simpler practices or even redefine the practices to suit them. This mistake is so prevalent that it has become known as “Scrum … but,” as in, “We do Scrum, but having a daily Scrum meeting is onerous, so we just do one per week,” or “We do Scrum, but two-week sprints are too short, so our sprints are eight weeks long.”

Scrum must be augmented with sound technical practices to be an effective method. Techniques such as test automation and continuous integration are essential to effective agile BI, yet few agile BI teams undertake these disciplines.

Agile BI also calls for a shift in management and leadership discipline. These leadership behaviors are all agile management disciplines:

- Enabling teams to be self-organizing and self-managing

- Creating cross-functional, co-located teams

- Cultivating a culture of project transparency without penalties

- Rewarding early experimentation and failure

All of these, and other agile practices and disciplines, are important components of an effective agile culture. It is important to be rigorous when adopting agile BI. When only the easy practices are adopted and the harder ones are either abandoned or marginalized, the result is a confusing blend of conventional discipline and lightweight agile methodology. This mistake often results in (at best) mediocre success in applying agility to data warehousing and business intelligence.

Read the full issue: Download Ten Mistakes to Avoid In an Agile BI Transformation (Q2 2013)