View online: tdwi.org/flashpoint

View online: tdwi.org/flashpoint

November 3, 2011

ANNOUNCEMENTS

Submissions for the next Business Intelligence Journal are due December 2. Submission guidelines

CONTENTS

Making the Case for Data Inventory: Busywork or Critical Need?

Additional Technologies for OpDI

Mistake: Lack of a Requirements Management Process

See what's

current in TDWI Education, Research, Webinars,

and Marketplace

Making the Case for Data Inventory: Busywork or Critical Need?

Lisa Loftis

Baseline Consulting

Topic:

Data Inventory

When thinking of mission-critical applications, data inventories rarely come to mind. In fact, in an informal poll of our clients, no one mentioned “data inventory” and “critical” in the same sentence. Until recently (and I hate to admit this), I probably would have agreed with the prevailing opinions of our clients. Not anymore.

As I researched data inventory methods and processes for my TDWI data inventory workshop, the findings really opened my eyes to both the need for, and benefits of, data inventories.

Attempting to understand what data we have and publishing that understanding in a way others can access is nothing new. For the history buffs, the ancient Assyrians, widely credited with both the first finance/accounting systems and early written word, marked their writings (the tablets) with labels so they could be easily retrieved from storage. They also denoted hollow clay envelopes used for commerce (bullae) with markings designed to indicate the contents stored within. Occurring some 2,800 years ago, these activities could be considered the first data inventory.

In more recent times, there is a plethora of legislation that requires fairly extensive data inventory and classification. Consider the Control Objectives for Information and Related Technology (COBIT) framework. COBIT is the framework used by many companies to comply with Sarbanes-Oxley, and it has a number of data requirements, including:

Establish a classification scheme that applies throughout the enterprise, based on the criticality and sensitivity (e.g., public, confidential, top secret) of enterprise data. This scheme includes details about data ownership, definition of appropriate security levels and protection controls, and a brief description of data retention and destruction requirements, criticality, and sensitivity. It is used as the basis for applying controls such as access controls, archiving, or encryption.

Next, let’s look at the impending legislation in Europe, Solvency II, that has insurers scrambling (and spending millions in the process) to comply. Although the primary focus of this legislation is on ensuring adequate and accurate risk modeling, the underlying data used in those risk models is getting plenty of attention. The following is just one of many similar provisions included in the legislation:

Data is appropriate (i.e., relevant to the portfolio of risk). Data is complete when all material information is taken into account and reflected in the data set. It should have sufficient granularity to allow for the identification of trends and the full understanding of the behavior of the underlying risks. Data is accurate when it is free from material mistakes, errors, and omissions. The assessment of the accuracy criteria should include appropriate cross-checks and internal tests to the consistency of data (i.e., with other relevant information or with the same data in different points in time).

Both COBIT and Solvency II regulations demand many elements of a data inventory, including the ability to track data ownership, definitions, quality, access rights, sensitivity, and lineage. You can’t apply access controls or ensure the appropriateness, completeness, or accuracy of data if you don’t know where that data resides, who is accessing it, or how it is being changed as it moves through your organization.

The latest trend, big data, is another problem that many of our clients are just starting to tackle--and it’s a challenge that highlights the need for a data inventory. According to The Economist, the information created by mankind is compounding at almost 60 percent annually. Alex Szalay, a professor at Johns Hopkins University, drives this point home: “How do we make sense of all these data? People should be worried about how we train the next generation, not just of scientists, but people in government and industry.” 1

On reflection, I think we should reconsider the importance of data inventory to our organizations. Properly maintained, building a structured data inventory is a process we can use to bring our massive amounts of data back into a form we can utilize to the best advantage. The inventory is a necessary component of the management of data as a strategic asset to the organization, and it can be of tremendous use in both setting and carrying out our data governance policies.

Perhaps its biggest promise is that a structured and regular data inventory process can eliminate manual, custom, and repetitive work, including data fixes, definitions, and metadata generation. It can reduce individual or departmental data hunting and gathering while simultaneously lowering data carrying costs and driving improved compliance. What’s not to love about a data inventory done right?

1 "Data, Data, Everywhere,” The Economist, London, February 2010.

[Back to top]

Lisa Loftis is a management consultant with Baseline Consulting. She will be teaching her workshop, “Conducting a Structured Data Inventory,” at the TDWI World Conference in Las Vegas in February 2012.

Highlight of key findings from TDWI's wide variety of research

Additional Technologies for OpDI

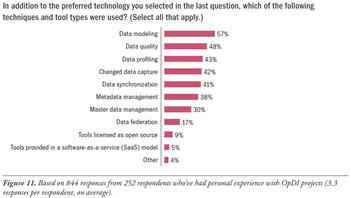

Besides asking about preferred technologies, this report’s survey also asked about additional technologies and tool types that data integration specialists are using for OpDI. (See Figure 11.) This question was directed solely at survey respondents who reported having personal experience with OpDI projects, so their responses represent real-world tool usage. Again, survey responses reveal a priority order of technologies and tool types, which we’ll discuss.

Source: Operational Data Integration: A New Frontier for Data Management (TDWI Best Practices Report, Q2 2009). Access the report here.

FlashPoint Rx prescribes a "Mistake to Avoid" for business intelligence and data warehousing professionals.

Mistake: Lack of a Requirements Management Process

By Dave Wells

The process of gathering requirements is fraught with problems unless it is supported by a requirements management process. The goal of requirements management is to ensure that the set of requirements collectively satisfies all of the needs for a system. Eliciting and individually specifying requirements is different from looking across the entire set of requirements to manage completeness, consistency, dependency, and change.

A comprehensive requirements management process addresses each of the following items:

- IDENTIFICATION Every requirement has a unique identifier in the form of a code, number, or short name.

- RECORDING Every requirement is consistently described using a standard or template, and each is linked to an index of all requirements.

- TRACING Each requirement is traceable to its source; the path from business to functional to technical requirements can be followed.

- CHANGE Every change to a requirement is recorded from the time the requirement is elicited to the time that it is implemented.

- CLASSIFICATION Each requirement is classified as business, functional, or technical.

- CONNECTION Each requirement points to any related documents, models, or other artifacts of the requirements-gathering process.

- VERIFICATION The state of each requirement is known as it relates to testing criteria such as clarity, ambiguity, necessity, and feasibility.

- PRIORITY It is sometimes necessary to prioritize requirements as a method of managing project time and scope.

Source: Ten Mistakes to Avoid When Gathering BI Requirements (Q3 2008). Access the publication here.